By Rob Farber, contributing writer

ExaWorks is an Exascale Computing Project (ECP)–funded project that provides access to hardened and tested workflow components through a software development kit (SDK). Developers use this SDK and associated APIs to build and deploy production-grade, exascale-capable workflows on US Department of Energy (DOE) and other computers. The prestigious Gordon Bell Prize competition highlighted the success of the ExaWorks SDK when the winner and two of three finalists in the 2020 Association for Computing Machinery (ACM) Gordon Bell Special Prize for High Performance Computing–Based COVID-19 Research competition leveraged ExaWorks technologies.[1],[2]

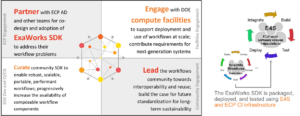

The ExaWorks SDK consists of a set of workflow management components that feature clean API designs, thereby enabling them to interoperate through common software interfaces. The project is working with the open-source community, application developers, large computing facilities, and high-performance computing (HPC) platform vendors to create a sustainable, cross-platform SDK. In particular, the project works with the Extreme-Scale Scientific Software Stack (E4S), which provides from-source builds and containers and provides robust testing of a broad collection of HPC software packages (Figure 1).[3],[4],[5] The project also provides comprehensive SDK documentation along with user-facing examples and tutorials to facilitate adoption of workflow technologies by developers.[6]

Figure 1. ExaWorks ensures exascale readiness and availability. The ExaWorks SDK is packaged, deployed, and tested using the E4S and ECP continuous integration (CI) infrastructure. (Source)

The need for the ExaWorks SDK is reflected by the observation that even with the historical prevalence of workflows in HPC, no single tool has demonstrated that it can meet the needs of all HPC projects. This need is exemplified by the observation that many ECP projects created their own workflow solutions. As explained in “ExaWorks: Workflows for Exascale,” these solutions were created through bespoke processes that assembled multiple software components into complex multistage workflows and resulted in many tightly integrated, but unfortunately stove-piped, software solutions. An explosion in the number of per-project independent solutions is making development and support of workflows increasingly unwieldy, expensive, and unsustainable. Furthermore, the complexity of these workflows is expected to increase because of the need to run on multiple heterogeneous computing platforms and exploit all levels of parallelism across multiple disparate distributed computing environments. In a DOE Python Exchange video recorded on May 31, 2023, Rafael Ferreira da Silva (an ExaWorks co-PI) noted that the challenge is reflected in users’ comments that these powerful systems require “a developer to be ‘shipped’ with the workflow system.”

The challenge is reflected in users’ comments that these powerful systems require “a developer to be ‘shipped’ with the workflow system” — Rafael Ferreira da Silva

Based on these observations coupled with extensive research, the ExaWorks project created an SDK that addresses the need of applications teams to implement custom workflows utilizing robust and interoperable workflow technologies.

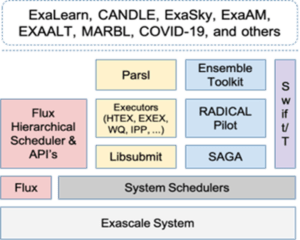

The SDK brings together four seed workflow technologies, specifically Flux, Parsl, RADICAL, and Swift/T. The ExaWorks SDK is working toward increasing composability of these tools, meaning that users can select and bring together these components in combination to construct domain-specific workflows.[7] This composability was key for the RADICAL Cybertools submission to the 2020 ACM Gordon Bell Special Prize competition in which RADICAL Cybertools leveraged Flux for increased performance. Many other applications such as ExaLearn, CANDLE, ExaSky, ExaAM, EXAALT, and MARBL utilize the ExaWorks software stack and APIs to implement their workflows (Figure 2).

Detailed information about Flux, Parsl, RADICAL, and Swift/T components is provided at ExaWorks.org.

Workflows Cannot Be an Afterthought

Thinking beyond current and legacy HPC workflows, the ExaWorks project created a general infrastructure designed to support a diverse range of workflows as a first-order requirement, as opposed to an afterthought. The beauty of this approach lies in the generality of workflows that can be created with ExaWorks for all major computing environments, including DOE supercomputers, cloud environments, and academic HPC clusters. The ExaWorks SDK enables the creation of HPC, AI, and industry workflows in all these environments. This generality is realized by providing developers access to hardened and tested components that they can use for the development and maintenance of an interoperable ecosystem of workflow systems and components.

The specific challenges for workflow management systems (WMSs) that ExaWorks is addressing are as follows:

- Workflow community: The workflow community is relatively fragmented with minimal communication between key stakeholders (e.g., workflow systems, applications, and facilities), little sharing of common components, and a lack of standard interfaces for interoperability.

- Portability and robustness: Most WMSs are tested on a handful of systems, and the frequency with which system hardware and software change makes it impossible to guarantee that a workflow will work on even the same system in the future.

- Scalability and performance: Many modern workflows feature huge ensembles of short-running jobs, which can create millions or even billions of tasks that must be rapidly scheduled and executed.

- Fault tolerance: The enormous number of computing elements and workflow tasks increases the likelihood of encountering faults within a workflow both at the system level and from the millions of concurrent tasks.

- Coordination and communication: Workflows depend on coordination between the workflow and the tasks within the workflow, thereby requiring efficient exchange of data following various communication patterns.

- Scheduling: Workflows must manage the efficient execution of diverse tasks (e.g., in runtime duration, resource requirements, single node or multinode) with complex interdependencies on increasingly heterogeneous resources.

Workflow Communities: Sustainability through a Community Model

ExaWorks is an ambitious project designed to achieve generality and sustainability through a community-based software model. The community model has been successfully applied to several general and sustainable ECP projects, including core numerical libraries such as xSDK, PETSc, SUNDIALS, and Hypre.

Dan Laney, PI of the ExaWorks project and project leader of the Workflow Project in the Weapon Simulation and Computing Computational Physics program at Lawrence Livermore National Laboratory, noted, “A central goal of the ExaWorks project is to create a community that will extend beyond the ECP that will nucleate around shared workflow technologies. We want to enable teams throughout the world to develop their workflows based on a shared, community-driven set of open-source technologies. This will allow them to focus on the specifics of their domain while leveraging the hard work of the workflow community to build scalable, robust workflow capabilities.”

Laney observed that sustainability requires a significant focus on community building. The SDK is part of the value proposition for joining this open-source community effort, which gives small teams access to CI across many sites, a portal for documentation and tutorials, and opportunities for outreach. The commonality of the SDK also makes it easier to work toward common APIs and interoperability and facilitates communication and collaboration across workflow developers. Along with community feedback, the team also examines benchmarks and verification and validation as part of the CI effort. Building out the test suite and CI is a major part of the effort to assure, as best as possible, portability, interoperability, and performance.[8]

“Shovel Ready” for Exascale

According to Laney, “ExaWorks brought together a set of tools already being leveraged by several ECP applications teams. Since then, our job has been to build an open-source SDK that provides enhanced integrated testing at multiple sites, easy-to-follow tutorials and getting started guides, and an active community exploring interoperability across workflow components.”

To date, Laney pointed out that ExaWorks has successfully brought together stakeholder communities at a series of summits that represented HPC facilities, application developers, and 27 workflow systems. The project has received seed money based on the success of the ECP-funded work after ECP funding ends. This new proposal, led by Ferreira da Silva, proposed a Center for Sustaining Workflows and Applications Services (SWAS).

The SWAS Proposal

If funded, the new center will focus on advancing and sustaining workflows and application services development with entrusted validation and verification capabilities (via a community-endorsed sustainability model) so that these systems and application services can provide the functionality and robustness required by DOE science users. Ferreira da Silva emphasized that “workflows in this context represent the broad set of software and services users need to configure, orchestrate, and operate modern analysis, modeling, and simulation campaigns.”

Specifically, the proposed center seeks engagement with the following communities:

- Workflow systems

- Data management frameworks

- Visualization frameworks

- AI/ML tools (used in modern workflows)

- Application services

According to the proposal, the center will bring together academia, national laboratories, and industry to create a sustainable software ecosystem supporting the myriad software and services used in workflows and the workflow orchestration software itself. SWAS will grow, support, and sustain the ecosystem spanning the full range of analysis, simulation, experiment, and ML workflows. SWAS will ensure that researchers can rely on robust, portable, scalable, secure, and interoperable workflow software and application services.

|

|

|

|

Figure 3. Clockwise from top left: Dan Laney, Kyle Chard, Shantenu Jha, and Rafael Ferreira da Silva.

Scalability, Fault Tolerance, and Coordinating Communications

Laney summarized the ExaWorks efforts in the vast areas of scalability, fault tolerance, and coordinating communications in terms of a common set of tasks that workflows must often perform: “We want to free teams from the burden of the ‘easy’ stuff so they can focus on the challenges such as exception handling (a research problem), portability, and monitoring and debugging of workflows. We need to make all these capabilities scale to millions of tasks and simulation campaigns that can run for weeks or months as well as online workflows connected to experimental facilities. This is a challenging problem, at least as difficult as building tools to debug massively parallel applications. One run is easy. Production runs are challenging because they can fail in various ways resulting in partial data, incomplete results, et cetera. We need to make this manageable by humans at scale when machines run thousands to tens of thousands of these runs over long periods of time and on many different machine and data center installations.”

Laney continued, “This includes pre- and postprocessing of the data produced by the production workflow. Our team likes to think in terms of invocations of a workflow and how it encompasses all issues of the applications (Message Passing Interface, I/O, GPU usage, and more) coupled with the added complexity of managing the entire workflow including I/O and visualization.”

Laney used the analogy of transitioning HPC from vector computing to distributed computing: “I think it is reasonable to think of workflows today as being at the same state of development as the tools and libraries that were being developed in the early days of the transition to distributed parallel computing. We need to mature our workflow tools and frameworks and hopefully converge to a smaller set of high-performance solutions that workflow developers can leverage to build custom workflows. This implies some level of standardization and consistency in the tool and frameworks—hence ExaWorks.”

Scheduling

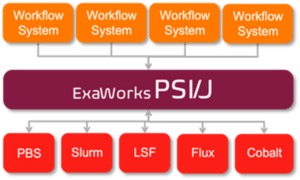

The paper “ExaWorks: Workflows for Exascale” presents the initial set of ExaWorks technologies and highlights Portable Submission Interface for Jobs (PSI/J), the first component envisioned as a common API for interacting with job schedulers in a unified way (Figure 4). Almost all portable workflow systems implement a layer similar to PSI/J to abstract different schedulers (e.g., Slurm, LSF). PSI/J is both a language-agnostic specification and a Python reference implementation of a set of interfaces that allow the specification and management of jobs. PSI/J supports common HPC schedulers such as Slurm, LSF, Cobalt, Flux, and the Portable Batch System (PBS).

According to the paper, encouraging adoption of common APIs in the user community while advocating for workflow requirements to enter directly into the procurement processes at facilities will lay the foundation for workflow developers to build, maintain, and support their workflow technologies in partnership with the facilities and private sector.

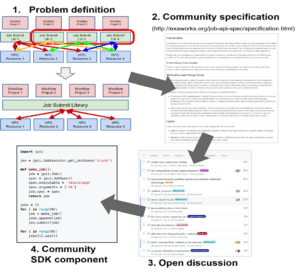

In terms of community effort, the initial focus has been on implementing the PSI/J Python library, but the team noted that implementations in other languages are encouraged. This focus is based on surveys of the user community including interviews and co-design meetings geared to creating a lightweight user-space API for job submission. This four-step process was made concrete by PSI/J and the open ExaWorks job specification language (Figure 5).

PSI/J

The PSI/J Python-language project was designed via a community effort on GitHub. The project represents a reference implementation via a relatively straightforward Python implementation.

PSI/J represents an initial effort toward the successful adoption of common APIs in the workflow community in that PSI/J is scoped to achieve both adoption by bespoke workflow developers (i.e., small teams of domain scientists) and inclusion in workflow tools (starting with the ExaWorks SDK) that will be tested widely across many facilities and cloud providers. According to the authors, the focused scope of PSI/J may allow for it to be included as a requirement in future procurements with the possibility of it becoming a standard API provided for both users and workflow system developers.

In the May 31, 2023 DOE Python Exchange panel discussion, “Workflows Community Initiative & PSI/J Python Reference Implementation,” Ferreira da Silva and panelists discussed the latest developments in Python and PSI/J workflow communities and where the ExaWorks tools fit in academic and scientific research. Da Silva framed this discussion in terms of the transition from traditional to modern workflow management along with the explosion of workflow technologies that have recently occurred.

Other panelists discussed their research, including Jan Janssen (in the Theoretical Division at Los Alamos National Laboratory) who created a custom workflow and Dr. Tanny Chavez who discussed PSI/J and the Advanced Light Source at Lawrence Berkeley National Laboratory.

The Workflows Community Initiative

Ferreira da Silva explained the genesis of the Workflows Community Initiative (WCI). “In early 2022”, he recalled, “we, along with a group of international workflows researchers, launched the WCI. This is a volunteer initiative created to bring the workflow community together (users, developers, researchers, and facilities); to provide community resources and capabilities to enable scientists; help workflow system developers to discover software products, related efforts, events, technical reports, et cetera; and engage in community-wide efforts to tackle workflow grand challenges.” The WCI is also a topic of discussion in the May 31, 2023, DOE Python Exchange video.

The WCI has quickly grown into a thriving community with 170 members, 29 workflow systems cataloged, an active jobs board, and a regular workflow newsletter. In addition to general resources, WCI also offers working groups and regular Workflows Community Summits that address workflow challenges and solutions (the 2022 edition of the summit had more than 100 international participants from 18 countries). The main outcome of these summits is to produce summary technical reports of the discussions and, more importantly, develop a community road map for workflow research and development. The initiative has extended its presence to major supercomputing conferences, including the IEEE/ACM Supercomputing Conference, in which a birds of a feather session was held that had 85 attendees.

The WCI report is provided on Zenodo and the Workflows Community Summits page.

DOE CI Infrastructure and Status

Users can access a dashboard that reports the status of PSI/J according to performance metrics determined by the CI infrastructure running across multiple DOE sites. These sites currently include Oak Ridge National Laboratory, the National Energy Research Scientific Computing Center, Lawrence Livermore National Laboratory, and Argonne National Laboratory.

Information reported by such dashboards can save much time and funding because people can check whether the tools they need are functioning acceptably. The E4S project also provides such a dashboard for numerous tools that run inside the E4S CI framework. In this way, users can rely on data, not opinion, to determine which are the most portable workflow tools.

Summary

Through cooperation rather than competition, the community based ExaWorks software development model is advancing the state of the art in workflow management. The community based WCI was created to address such grand challenges in workflow management. The ExaWorks project itself is a concrete instantiation of this community effort to provide that core API. This API and software components are based on a community effort that will extend beyond the ECP and will nucleate around shared workflow technologies.

The complexity and diversity of today’s on-premises and cloud computing environments require centralizing on a core API that can portably support these computing environments while delivering the performance and scalability needed to run on DOE leadership-class supercomputers. Testing via CI ensures that the ExaWorks software runs correctly and performs well on supported DOE systems. It also reflects the ability of the software to support users, developers, researchers, and facilities at other commercial, academic, and cloud data centers.

This research was supported by the Exascale Computing Project (17-SC-20-SC), a joint project of the U.S. Department of Energy’s Office of Science and National Nuclear Security Administration, responsible for delivering a capable exascale ecosystem, including software, applications, and hardware technology, to support the nation’s exascale computing imperative.

Rob Farber is a global technology consultant and author with an extensive background in HPC and in developing machine learning technology that he applies at national laboratories and commercial organizations.

[1] https://www.osti.gov/servlets/purl/1863883

[2] https://www.exascaleproject.org/workflow-technologies-impact-sc20-gordon-bell-covid-19-award-winner-and-two-of-the-three-finalists/

[3] https://www.exascaleproject.org/event/e4s_at_nersc_aug2022/

[4] https://www.exascaleproject.org/extreme-scale-scientific-software-stack-e4s-releases-version-22-02/

[5] https://www.exascaleproject.org/revisiting-the-e4s-software-ecosystem-upon-release-of-version-22-02/

[6] https://www.osti.gov/servlets/purl/1863883

[7] https://www.exascaleproject.org/workflow-technologies-impact-sc20-gordon-bell-covid-19-award-winner-and-two-of-the-three-finalists/

[8] https://www.exascaleproject.org/continuous-integration-the-path-to-the-future-for-hpc/