By Rob Farber, contributing writer

The Exascale Atomistics for Accuracy, Length, and Time (EXAALT) project is an application development effort within the Exascale Computing Project’s (ECP) chemistry and materials portfolio. Its mission goal is to give scientists the ability to model the dynamics of systems of atoms over longer periods of time compared to the lengths of time they can study using current, standard methods – even when the standard methods are running at scale on large leadership-class supercomputers.

Molecular dynamics (MD) simulations essentially create a sequential series of snapshots of a dynamical system of atoms given an initial starting state. These sequential snapshots are then combined together to become what is essentially a 3D video that scientists can view to study the dynamics of the atoms and molecules in the simulated system. Danny Perez, a technical staff member at Los Alamos National Laboratory (LANL), notes, “With standard methods you will face a limitation. You can simulate larger systems but not long timescales. The technology is pushing us to bigger systems, but we are all bumping our heads against the communications wall, what keeps up from simulating over longer times.” He continues, “We need to fundamentally rethink our methods and rewrite our codes, [or] else we can expect diminishing scientific returns from each new generation of supercomputers.” The solution implemented by the EXAALT team is to parallelize over time instead of over space, as it is usually done.

Software Improvements Increase Performance by 22x

Parallelizing atomic simulations over time means the software can fully use all the processing elements in a modern parallel computer, even when simulating a small system on a leadership-class exascale supercomputer. It does so by generating many short trajectories according to a special protocol. A Parallel Trajectory Splicing algorithm[i],[ii] is then used to combine all the frames to create a coherent movie for scientists to study. Frames that cannot be used immediately are stored for possible use if the system revisits the same configuration later, thus minimizing wasted effort.

Because of the way the calculation conventionally uses parallel computers, current MD codes are limited to relatively short timescales, regardless of whether they are simulating a small or large number of atoms. This limits scientists to creating videos of roughly 100 ns of simulated atomic movement per day (100 ns/day) of computer runtime. Using Parallel Trajectory Splicing , Perez and the EXAALT team at LANL, Sandia National Laboratories, and the University of Tennessee, Knoxville, report achieving much faster simulation rates of 0.5 ms/h, or approximately 12 ms/day of generated video for a simple system of atoms. The goal of the team deliver a capability to run simulations:

- On timescales from picoseconds to milliseconds,

- At length scales from hundreds to trillions of atoms

- With accuracy from empirical to machine learning (ML) to quantum methods.

The team reports they have already achieved an overall 288x increase in simulation rate. Software improvements alone provided a 22× increase in performance, which when running on newer computational hardware on Summit resulted in an overall 288× performance increase compared to the baseline software release running on previous generation hardware. This highlights the potential benefits of running EXAALT on future exascale systems.

Perez points out that this improvement resulted from a comprehensive and multi-disciplinary team effort that

- Took a fresh look at the problem, inspired by how modern machine learning libraries train neural networks and identified a novel factorization that enabled a reduction in the total number of operations, at the expense of additional storage.

- This resulted in the implementation of a so-called proxy-app (a simplified version of the production code that implements only the most computationally intensive operations). This enabled the fast prototyping of a range of different implementations and the consideration of different programming models.

- The most promising candidates were then reimplemented in the production code and aggressively optimized, especially for the type of hardware expected of upcoming exascale computers. This was possible because the team is composed of domain experts who a very familiar with the theoretical formulation of the problem, of computer scientists who are fluent in different programming paradigms, and of engineers that intimately understand the details of the hardware on which the code is executed. Credit is also due to the ECP collaboration (most notably with the CoPA co-design center), with the DOE computing facilities (mainly with the NERSC computing center), and with vendors (e.g., NVIDIA).

Perez notes that the simulation throughput ultimately depends on the specific point in the accuracy, length, and time (ALT) space where the simulation is performed. The parallel trajectory stitching algorithm used in the new code exploits the fact that many atoms in a simulation tend to spend much of their time wiggling and jiggling around in space, in such a way that they tend to exhibit the same behavior for a long time, except when rapid “jumps” to new configurations occur. The ParSplice parallel trajectory stitching algorithm exploits this tendency to deliver impressive speedups. The caveat is that once atoms start behaving in new ways, such as when the temperature of the modeled system of atoms reaches the melting point, then the EXAALT MD simulation performance has to scale back until ultimately it exhibits the same performance of a standard MD simulation. Once the atoms again start exhibiting the same behavior over time, the EXAALT software can again exploit this to deliver increased performance. For many atomic systems, the EXAALT software delivers the performance scientists need to study the longer term dynamics of the atomic system of interest.

The Workflow

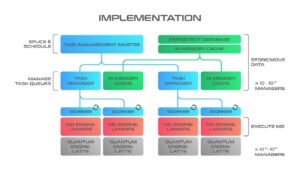

The EXAALT workflow combines three state-of-the-art codes—Large-Scale Atomic/Molecular Massively Parallel Simulator (LAMMPS), Los Alamos Transferable Tight-Binding for Energetics (LATTE), and ParSplice into one unified tool to leverage exascale platforms efficiently across all three dimensions of the ALT simulation space. Figure 1 illustrates the EXAALT implementation.

Figure 1. EXAALT implementation. Note that the Parasplice code base is open source and can be freely downloaded. (Source: Danny Perez.)

First, a task and data management layer (shown in blue and green in the figure) enables the creation of MD tasks, their management through task queues, and the storage of results in distributed databases. The first layer is used to implement various replica-based accelerated MD techniques and enable other complex MD workflows. The second layer is a powerful MD engine based on the LAMMPS code (shown in orange in the figure) that offers a uniform interface through which various physical models can be accessed. The third layer provides a wide range of physical models from which to derive accurate interatomic and/or molecular forces (shown in gray in the figure). In addition to the many empirical potentials implemented in LAMMPS, EXAALT also provides high-performance implementations of quantum MD at the density functional tight binding level through the LATTE code to spectral neighbor analysis potentials, as well as a set of high-accuracy machine-learned potentials.[iii]

Scientific Application

Like a standard MD software package, EXAALT can generate unbiased trajectories from the initial state. Scientists use EXAALT to build a virtual experiment for examining system behavior over very long timescales. This includes simulating long-term behavior and generating data to for use by data scientists to use machine learning to create more accurate atomic potentials.

Understanding Long-Term Behavior

Nuclear fusion is a promising source of abundant carbon-free energy. Harnessing the promises of fusion energy production however poses considerable engineering challenges. For example, the materials facing the plasma in the reactor are exposed to extreme conditions that can affect their performance and durability. In order to design commercially viable fusion powerplants, one needs to understand how the materials will evolve overs years of operation.

Scientists use EXAALT to understand long-term simulated behavior, such as the development of nanostructured fuzz that forms on the surface of tungsten parts in the so-called diverter in fusion reactors. These tendrils, which are on the order of tens of nanometers in diameter, form at very high temperatures (1,000–2,200 K) and can lead to premature materials failure or quench the reaction in a fusion reaction by cooling and destabilizing the plasma. Scientists use EXAALT to perform large, time-extended MD simulations to gain insight into this problem, [iv],[v] with the goal of identifying mitigating solutions or new materials that would be more tolerant to these extreme conditions.

Identifying Limitations and Creating More Accurate Atomic Potentials

EXAALT is also an excellent data-generation package for training accurate ML models of atomic potentials.

EXAALT uses ML to create better models of the atomic potentials. Perez notes, “One of our goals is to eliminate the need for humans to tediously create potentials by hand.” He points out that it can take humans many months to create a simple atomic potential model with tens of free parameters and observes that such a lengthy effort obviates the time-to-solution benefits of a scientific effort running on an exascale supercomputer. Validation is also lengthy. Human-generated models tend to fit only to a relatively small number of quantum calculations or experiments.

Instead, scientists are planning to use EXAALT to automatically generate huge, complex datasets that can be used to train an artificial neural network (ANN) relatively quickly. Furthermore, Perez notes that the ANN might contain 100,000 free parameters to represent the real atomic potential more accurately. Setting such a large number of free parameters appropriately for such a complex problem requires many training examples. Verification is also key to trusting the ANN model. The speed of the EXAALT software will give data scientists the ability to both accurately train and extensively verify the trained model using EXAALT to create large, representative computer-generated datasets.

Summary

The EXAALT team is working to combine software advances with exascale supercomputing into a tool that the scientific community can use to address fundamental questions in materials science., such as designing new materials for fusion reactors that may one day solve the world’s energy needs.

Rob Farber is a global technology consultant and author with an extensive background in HPC and machine learning technology development that he applies at national labs and commercial organizations. Rob can be reached at [email protected].

[i] D. Perez, E. D. Cubuk, A. Waterland, E. Kaxiras, and A. F. Voter, “Long-Time Dynamics through Parallel Trajectory Splicing,” Journal of Chemical Theory and Computation 12, no. 1 (2016): 18–28. https://pubs.acs.org/doi/abs/10.1021/acs.jctc.5b00916.

[ii] C. Le Bris, T. Lelievre, M. Luskin, and D. Perez, “A Mathematical Formalization of the Parallel Replica Dynamics,” Monte Carlo Methods App. 18, no. 2 (2012): 119–146. https://doi.org/10.1515/mcma-2012-0003.

[iii] “EXAALT,” Exascale Computing Project, https://www.exascaleproject.org/research-project/exaalt/.

[iv] D. Perez, L. Sandoval, S. Blondel, B. D. Wirth, B. P. Uberuaga, and A. F. Voter, “The Mobility of Small Vacancy/Helium Complexes in Tungsten and Its Impact on Retention in Fusion-Relevant Conditions,” Scientific Reports 7 (2017): 2552. https://www.nature.com/articles/s41598-017-02428-2.

[v] “EXAALT,” Exascale Computing Project, https://www.exascaleproject.org/research-project/exaalt/.