Harnessing the Power of Exascale Software for Faster and More Accurate Warnings of Dangerous Weather Conditions

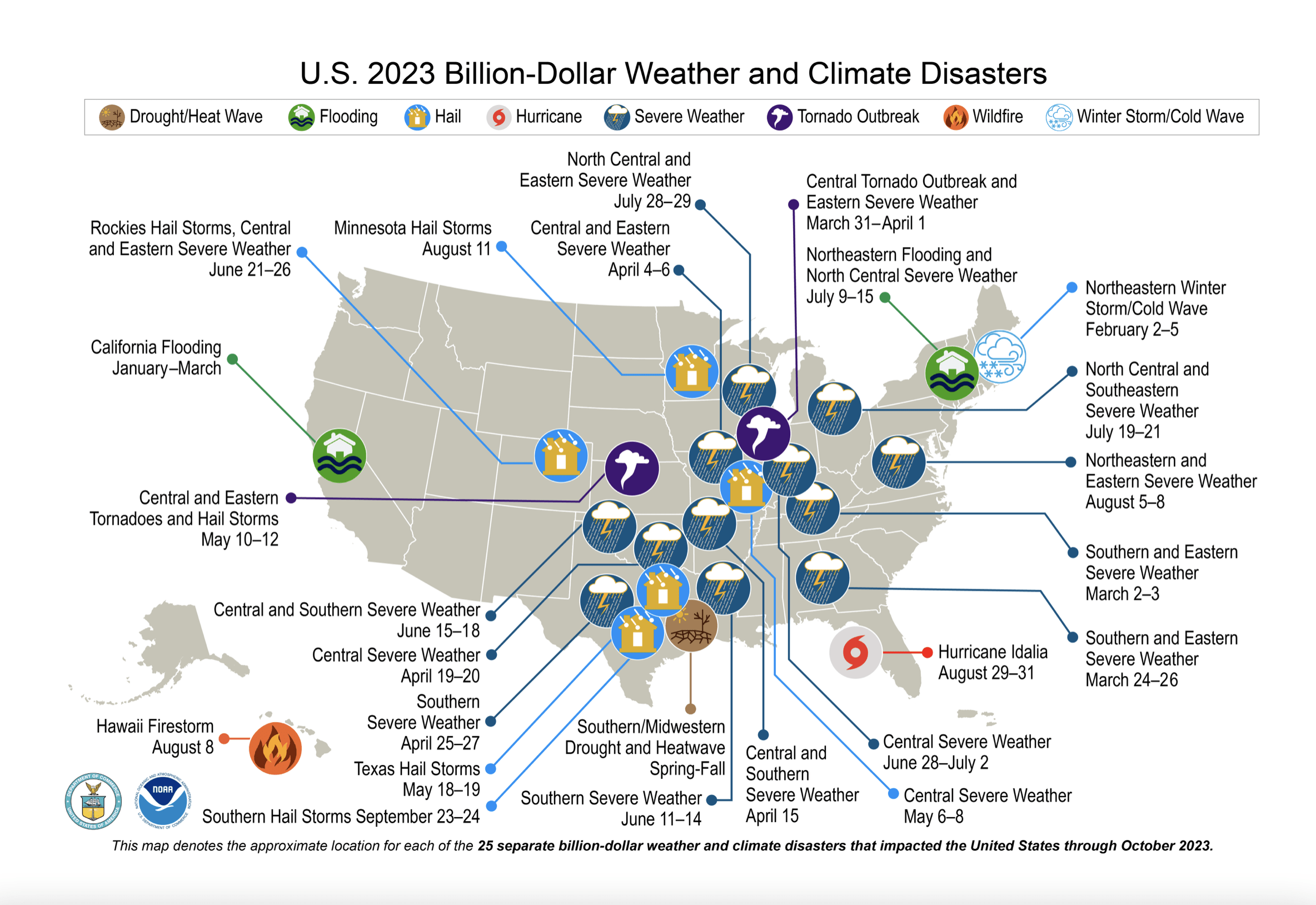

According to a recent report by NOAA, the US has confirmed a total of 23 separate billion-dollar weather and climate disasters in 2023 alone—the most events on record during a calendar year. Since 1980, the overall total cost of these billion-dollar weather disasters (including CPI adjustments to 2023) is $2.615 trillion. These numbers tell only part of the story as the single costliest type of natural catastrophe for insurers in 2023 wasn’t hurricanes or earthquakes or volcanic eruptions—it was thunderstorms.

Figure 1. Billion-dollar weather and climate disasters in the U.S. in 2023. (Source NOAA)

Organizations such as NOAA use computers to help forecast such severe weather events as a public service to help mitigate damages, but the task is devilishly tricky. Scientists and meteorologists use massive computer programs called numerical weather forecast models to help determine whether conditions will be favorable for the development of a severe weather event—such as a thunderstorm, tornado, or hurricane. These computer models are designed to calculate what the atmosphere will do at certain points over a large area, from the earth’s surface to the top of the atmosphere.

Earth scientists use both climate and weather forecast models to gain insight as they seek to create and validate higher-resolution models that can more accurately reflect the weather we all experience. Climate and forecast models differ spatially and according to timescale, and the data used to validate them also differs:

- Climate models aim to reflect the behavior of previous weather patterns over long timescales by using historical data. These models are then run forward to project climate trends, El Niño and La Niña climate patterns, and more.

- Weather forecast models do something similar. They model and are validated against weather phenomena over a shorter time frame and over a localized (albeit large) region of the earth’s surface (and associated atmospheric volume).

Both types of models provide critical information for earth scientists and decision-makers. Daily computer-generated forecasts are used by meteorologists to warn of high-probability severe weather events and help us prepare accordingly, from planning outdoor events or making alternate travel plans to organizing emergency teams in advance of natural disasters. These forecasts are also affected by long-term climate trends, but the assimilation of volumes of historical data requires computers with massive data-handling capabilities. Complex climate models serve several purposes, including projected trends in severe weather events over the long term and over very large geographical regions, and are used by decision-makers to formulate energy policy, set insurance rates, and more.

Faster Computers Matter

The good news is that both climate and weather forecast models are getting better as earth scientists work to incorporate more data sources and model large areas of the earth system of interest at higher resolutions. Modeling thunderstorms and tornadoes, for example, requires extremely high-resolution computer atmospheric models.

Weather forecast models particularly must run quickly enough to produce results in a timely fashion. Leslie Hart, who is a senior high-performance computing (HPC) software engineer at NOAA’s Office of the Chief Information Officer, noted, “Think in terms of a tornado forming where minutes count when issuing a warning. Even a few minutes of additional warning is invaluable.” Accuracy is extremely important because severe weather warnings disrupt people’s lives, and a failure to issue a warning may cost people their lives.

These models all rely on data, and lots of it. Data is gathered from weather balloons, satellites, aircraft, ships, temperature profilers, and surface weather stations and is used as input to the computer models. NOAA is also increasingly using historical data, which is very important in validating the models. Validation seeks to answer a question fundamental to any computer modeling effort: how do we know that the model reflects what is going on in the real world? (See “Severe Weather 101” by NOAA for more information.)

More data means better results from the models but only if computers can handle it. According to Jeff Durachta, who is the engineering lead for software development at NOAA’s Geophysical Fluid Dynamics Laboratory (GFDL), faster computers matter because they enable greater data assimilation into the models. Hart explained, “The benefit of increased data means that we have been able to improve the accuracy of our models, but we need faster, more capable machines to run these data-rich models.

Otherwise, unacceptable run times might result or worse: it might not be possible to run the model at all due to system memory and storage limitations. The benefit of faster, more capable machines means that we can look to resolve clouds and model thunderstorms more accurately, and more.”

Software Is the Key

Software is the key ingredient that allows applications such as weather models to run on faster, more capable computer hardware. NOAA’s Software Engineering for Novel Architectures (SENA) team is working to ensure that the software used for earth system modeling can run on computers that are fast enough to meet the needs of meteorologists and climate scientists for years to come. This effort includes planning so that the software used to build even more accurate future forecast and climate models can run on the latest, fastest computer hardware—even when that hardware does not yet exist.

To anticipate these future needs, Durachta and his team along with other SENA participants have been using software from the US Department of Energy’s (DOE’s) Exascale Computing Project (ECP).

The ECP has spent the past 7 years building a freely available, easily deployable set of software components that can work together to robustly support scientific and mission-critical applications that run on everything from laptops to the world’s fastest exascale supercomputers. The ECP software (sometimes referred to as a software stack or software ecosystem) can even run in the cloud. As Durachta observed, “the ECP software presents an array of possibilities and examples that have proven effective. The accelerated abilities afforded by ECP software give access to faster machines, improve our infrastructure, and give scientists tools that could significantly improve the accuracy of our models by supporting new science.”

Currently, the fastest computers in the world are exascale supercomputers. These systems—when enabled by exascale-capable software—can perform a million trillion calculations per second. The June 2023 TOP500 list shows that Oak Ridge National Laboratory’s Frontier, which is the first operational DOE exascale system, is currently the fastest system in the world. As of this writing, Frontier remains the only true exascale machine on the TOP500 list. The TOP500 list has published an updated list of the world’s fastest supercomputers twice a year since 1993.

Isidora Jankov, who is the Scientific Computing Branch lead at NOAA’s Global Systems Laboratory (GSL), sees an extraordinary future enabled by the latest generation of leadership-class supercomputers. “With exascale supercomputing,” she observed, “we can think and plan beyond what earth scientists are currently considering. One can even envision a future where an exascale-capable software infrastructure can leverage the capability of leadership-class supercomputers to execute numerical models at resolutions that will allow for explicit solving and understanding of physical processes that we have not anticipated before (e.g., explicitly resolving atmospheric turbulence over larger areas).” Such models promise to provide unprecedented insight into atmospheric phenomena.

Plan for the Fastest but Ensure Users Can Run the Models Anywhere

Durachta further explained the NOAA software vision: “There has been an ever-increasing emphasis on forecasting and mitigation. We [NOAA] are just beginning to increase the level of detail in our computer models. Exascale-capable software and acceleration are the gateway to resolving near-term weather and long-term climate with increasing detail. For us, the ECP has provided a catalyst for change at many levels.”

Hart expanded on this vision: “ECP computational successes have brought attention to and made exascale computing real for our organization. We are now being asked if we can start using exascale machines, which we can do with sufficient resources and scientific focus. It’s not just a computer science problem though; we need to include the earth system scientists. Think in terms of modeling the earth as a system. All that complexity can affect the weather.”

Melding Software and Science

Durachta cautioned that faster hardware by itself does not solve problems: “Our focus is on the ‘revolutionization’ of an HPC software ecosystem for earth system modeling. The key to harnessing faster machines, including systems that can deliver exascale levels of performance, lies in the melding of software and science. We at NOAA are on the on-ramp to faster computing, which can advance the state of the art in weather and climate forecasting and mitigation. Earth system scientists are taking notice. Innovative science is happening to harness faster machines including the introduction of machine learning and other computational approaches.”

A Shared Fate

Durachta praised the ECP “shared fate” approach when he noted, “The reality is the ECP has built the software on-ramp to running on many very capable systems up to and including machines that are exascale capable.”

Also referred to as “better together,” the ECP approach reflects both the realization and practical harnessing of the aspirations of numerous community software development projects. This ECP vision seeks to build an ecosystem of interoperable software components for the benefit of everyone. Applications such as climate and weather models can then use these components to robustly realize the performance capabilities of the many hardware pieces that go into the latest and fastest computers.

Why This Matters

Rusty Benson, who is the deputy lead for software development at NOAA’s GFDL, encapsulated much of the thought behind the ECP vision and the software planning for NOAA: “The ability to easily deploy our models [using what are called E4S containers] truly makes our models easy to run, portable, and available for everyone to use—even in the cloud. This is valuable and makes NOAA earth system modeling technology available to the worldwide community of research organizations. Such community availability provides the on-ramp to faster computers but also to open research. Open research provides the necessary condition for the melding of earth science and software to create the next generation of climate and forecast models.”

The Unified Forecasting System, which is a NOAA-initiated community model that has been made available via the EPIC (Earth Prediction Innovation Center), is one example.

Figure 2. Jeff Durachta (left), Isidora Jankov (center left), Rusty Benson (center right), Leslie Hart (right)

Summary

Weather simulations exemplify how computer models aid decision-makers in mitigating property damage, societal disruptions, and loss of life. For NOAA, these computer models assist in identifying problematic climate trends and helping to mitigate dangerous weather events. Faster computers provide scientists and engineers with the platforms they need to create and run ever more accurate versions of these massive computer programs.

Organizations such as NOAA recognize the value of the ECP software components. By using various Extreme-Scale Scientific Software Stack (E4S) features including training and by deploying the E4S software via the E4S common deployment capability, NOAA acts as a proof-point for others to capitalize on the extensive design efforts by the ECP teams. These teams have worked diligently to provide the software components that act as the “on ramps” to the faster computers that are the freeways to our computational future.

Even more fundamental are the recognition and adoption of the ECP “shared fate” vision at an organizational level. The success of the 7-year, $1.8 billion ECP project stems from this “shared fate” vision about software technology.

Adoption of a shared fate vision is necessary because of the rapid rate of change in computer hardware and the advent of new technologies such as AI. The rapidity of technical innovation, although highly beneficial, has made it impossible to sustain a patchwork legacy of ad hoc approaches to software development and interoperability. These ad hoc approaches have proven wasteful because individual groups duplicate resources to develop similar yet incompatible software solutions to the same problems (i.e., they “reinvent the wheel”). Furthermore, it is not practical to provide every software project access to the latest hardware for software development, nor is it practical to provide developers with the continued and frequent access they need for continuous integration, which is a process that is essential to preserving software interoperability and robustness.

For More Information

See the following for more information about the ECP software packages currently being used by NOAA. Most of the ECP software has undergone extensive work by ECP experts to use the GPU hardware accelerators used by many computers to achieve high numerical processing rates.

- org: This is the main site of information about ECP software and technology.

- E4S: This is the delivery vehicle for the hardened and robust ECP software components—even for on-demand runs on many cloud platforms. E4S is an extensive project that includes testing, a dashboard, a documentation portal, and a strategy group.

- Spack: NOAA uses Spack to build its software simulations. Spack is used to build all ECP software components.

- AMReX: AMReX is a performance-portable computational framework for block-structured adaptive mesh refinement applications. This computational framework efficiently uses accelerators such as GPUs. Jankov noted, “We have a research effort with AMReX to explore its capabilities. This has been a great collaboration! We also had a 2-day tutorial on best software practices, which has proven to be very helpful.”

- HDF5: NOAA uses NetCDF (Network Common Data Form) for storage operations. NetCDF is built using the Hierarchical Data Format version 5 (HDF5), which is an open-source file format designed to support large, complex, heterogeneous data such as the data produced by weather simulations. The HDF5 library delivers efficient parallel I/O operations to speed data access.

- ExaWorks: The ExaWorks software development kit (SDK) provides access to a collection of hardened and tested workflow technologies. This SDK is important because the modern computational workflows used by NOAA scientists manage huge amounts of data and computation and, if care is not taken, can take a long time to run. ExaWorks helps computer scientists exploit all levels of parallelism across multiple disparate distributed HPC computing environments to achieve fast run times. Many award-winning HPC projects utilize the ExaWorks SDK.

- Kokkos: Kokkos is a parallel runtime that helps many HPC applications achieve high performance on many hardware platforms. Work performed with ECP funding helps the Kokkos Programming Model leverage parallelism, asynchronous computations, and advanced hardware capabilities to enable developers to write fully performance-portable code for exascale-era and HPC platforms.

- LLVM: The NOAA software team is investigating integrating directly into the LLVM software ecosystem. The LLVM project is a collection of modular and reusable compiler and toolchain technologies. This is a huge community development effort that enjoys tremendous support. The ECP collaborates with the LLVM developers and has provided funding to the LLVM project.

This research was supported by the Exascale Computing Project (17-SC-20-SC), a joint project of the U.S. Department of Energy’s Office of Science and National Nuclear Security Administration, responsible for delivering a capable exascale ecosystem, including software, applications, and hardware technology, to support the nation’s exascale computing imperative.

Rob Farber is a global technology consultant and author with an extensive background in HPC and machine learning technology.

Topics: NOAA Weather Climate