Exploring the Universe and Nature’s Physical Processes with Unprecedented Power

By John Spizzirri

Argonne National Laboratory

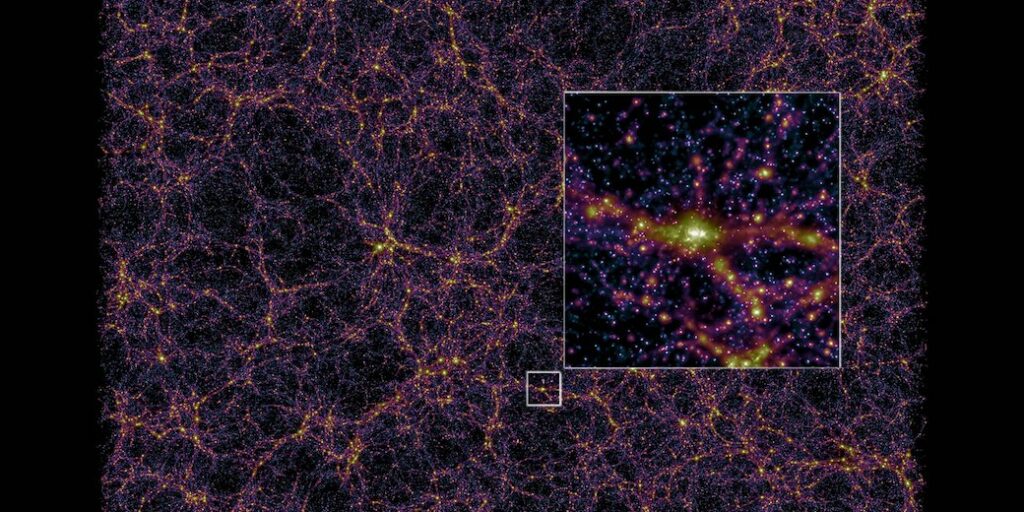

Visualization of results from a cosmological hydrodynamical simulation run with the CRK-HACC code. The figure shows the present-day gas distribution, with the density (brightness) and temperature (color) integrated along a slab of one-tenth the thickness of one side of the simulation cube. The inset highlights the details and the environment of a cluster of galaxies; clusters are the most massive bound objects in the universe, dominated by dark matter and containing very hot gas and hundreds to thousands of galaxies. The simulation was run on the Summit system at Oak Ridge National Laboratory and the ray tracing for the visualization was performed on the Polaris system at Argonne National Laboratory. Credit: Michael Buehlmann and the HACC team, Argonne National Laboratory.

By the turn of the seventeenth century, naked-eye observations, mathematics, and a rudimentary understanding of physics created enough data to sketch out a basic survey of our nighttime sky. But a change was coming that may very well have set the stage for modern cosmology, the study of the composition and evolution of the universe.

“The leap from naked-eye observation to instrument-aided vision would be one of the great advances in the history of the planet,” wrote former Librarian of Congress Daniel J. Boorstin, in his 1983 book, The Discoverers.

It was the Italian scientist and artist Galileo Galilei who, in 1610, would become the first to turn a scientific instrument toward the heavens.

Having redesigned the newly emerged spyglass, Galileo used his telescope to describe, in vivid detail, the landscape of the moon and provide his audience with a preview of the multitude of stars that were then beyond our ken.

But it was the discovery of four new “stars” attending the planet Jupiter that excited Galileo most. Calling them the Medicean stars for his benefactor, Cosmo Medici, we would later come to know them as moons of Jupiter. (Today, we count 95.)

And while he summoned “all astronomers to apply themselves to examine and determine their (the four moons) periodic times,” in his pamphlet, Siderius Nuncius or Starry Messenger, he urged that they not approach such an inquiry without “a very accurate telescope … ”

In the intervening 400 years, Galileo’s successors took heed and great pains to develop exacting optical instruments, and each new advancement led to greater strides in our understanding of the heavens and the laws that govern them.

“Today, progress in modern cosmology is driven largely by advances in our ability to deeply survey the sky in a number of observational windows across the spectrum, from radio waves to x-rays,” explains Salman Habib, a physicist and cosmologist at the US Department of Energy’s (DOE’s) Argonne National Laboratory.

“The progress consists of advances in the cosmological model itself, as well as the use of cosmological tools as probes of fundamental physics.”

Yet, there is so much more to discover and explain. To do so requires even more powerful observation capabilities and a complement of concurrent technologies —computing chief among them—to breathe life into those observations.

An Argonne Distinguished Fellow and director of the lab’s Computational Science Division, Habib’s work straddles this frontier between humankind’s largest and most complex optical instruments and the new era of exascale supercomputing.

With the power of more than a billion-billion calculations per second, these new exascale supercomputers will play a pivotal role in creating highly accurate computer simulations of the universe, or sky maps. Observations captured by sources such as the Vera C. Rubin Observatory’s Legacy Survey of Space and Time (LSST) in Chile and other sky surveys will help fill the gaps in these maps, which will ultimately provide a dynamic platform for studying new physics.

For example, three areas of primary interest in cosmology, today, are dark matter and its role in structure formation throughout the universe; dark energy, which is a mysterious ingredient thought to be responsible for the accelerated expansion of the universe; and the mass of neutrinos, which remain an enigmatic component within the Standard Model of particle physics.

“One big problem in conducting this type of research is that one cannot do experiments with the universe,” notes Habib, perhaps in a nod to Einstein. “It is not possible to run multiple universes and adjust for various parameters to see what happens. The best solution is to build realistic models in supercomputers and then investigate various scenarios by running our own virtual universes.”

Habib and his colleagues are doing exactly that through several concurrent projects, including ExaSky, which is funded and supported by DOE’s Exascale Computing Project (ECP). As a collaboration between multiple national laboratories, universities, and industry, ECP’s efforts support scientists whose complex research requires the unprecedented power and scale of these exascale-class supercomputers.

Within the ECP’s Application Development portfolio, 24 projects are on their way to a finish line slated for the end of 2023. Once deployed at scale, ECP’s projects will have impacts on a whole host of domains, including national, financial, and energy security; health care; and, of course, scientific discovery.

Since its inception, ECP has supported ExaSky in its effort to advance two complementary software codes that can accurately represent the manic range of parameters inherent in complex cosmological systems, from star-birthing nebulae to supernovae.

The codes predict the formation of cosmic structures on very large scales by following the formation and arrangement of billions of galaxies in a complex structure known as the cosmic web. In doing so, the codes account for the structure and physics of an individual formation, such as a galaxy and its stellar constituents, and all of its faraway gravitational interactions with other bodies.

Since he began his work with ECP, Habib has seen “tremendous progress” in the development of the ExaSky codes.

The HACC (Hardware/Hybrid Accelerated Cosmology Code) and Nyx codes are significantly updated versions of earlier sky surveys that were piloted by Argonne and Lawrence Berkeley National Laboratories and conducted on less powerful computers—although, they were leading-edge for their time.

Each code uses a different approach to solve for a wide swath of physics measurements, from N-body interactions, which relate to gravitational physics, to gas physics and related astrophysical processes (e.g., star formation, supernova explosions, and feedback from gas heated as it falls into supermassive black holes).

Salman Habib is the director of Argonne National Laboratory’s Computational Science Division and an Argonne Distinguished Fellow. He holds a joint position in Argonne’s Physical Sciences and Engineering Directorate and has joint appointments at the University of Chicago and Northwestern University. Habib’s interests cover a broad span of research, ranging from quantum field theory and quantum information to the formation and evolution of cosmological structures.

“The idea is that the similar physical models in both codes should provide similar results at many different scales, even though the two codes utilize different computational approaches,” says Habib. “This agreement helps validate our understanding and treatment of the physical processes that are occurring in nature.”

Currently, much of the ECP work has involved modifying the codes to work on various types and combinations of processors, from GPUs, which are the workhorses of newer high-performance computers, to more traditional CPUs.

This shared capability also allows for easier adaptation to next-generation processors and serves as the driving force behind new mathematical solvers for natural phenomena and the development of subgrid models to account for physical processes that cannot be directly simulated.

Subgrid models enable researchers to include small-scale phenomena that are missing in the simulations, such as active galactic nuclei, in which case the central core of a galaxy emits immense radiation that does not originate from stars. The likely source of this radiation is a supermassive black hole at the center of the galaxy.

Despite their mass—millions of times that of the sun—their formation process happens on scales that make it difficult for the associated physics to be directly simulated with the current generation of cosmology codes.

Although these high-tech upgrades move us toward a better understanding of the universe and its many galactic neighborhoods and stellar nurseries, they do so with the stipulation that we are modeling the universe. Computationally, the amount of data that these models create—driven by dozens to hundreds of specific technical parameters—confers upon the intrepid researcher a mind-boggling crush of ones and zeros.

And all of those ones and zeros, which constitute potentially many petabytes of data from a single simulation run, must be analyzed.

Exascale machines, such as Argonne’s upcoming Aurora and Oak Ridge National Laboratory’s Frontier, will enable complex scientific analyses far beyond anything we are capable of at the moment.

Work on ExaSky includes a whole new set of analysis tools that allow for both in situ analyses (i.e., analyzing the new data while the model is running) and fast off-line analyses.

“And this is all very important,” says Habib. “Problems that were once considered virtually impossible become doable, and hard problems can be addressed in hours, rather than on time scales running into many months.”

Data derived from the simulations are used to build very realistic models of what will be observed by sky surveys such as LSST, the Dark Energy Spectroscopic Instrument (DESI), and the South Pole Telescope. Sky surveys observe the universe in different wavelengths and are often optimized to investigate different aspects of cosmology. Some target large areas of the sky, and others focus on looking deeper (i.e., further back in time) over relatively small patches of sky.

For example, some of Habib’s research focuses on the cosmic microwave background (CMB), which is a primordial signature from the birth of the universe. At that time, there were no structures but rather an almost uniform, very hot plasma somewhat reminiscent of the interior of the sun.

As the universe expanded and cooled, atoms (hydrogen and helium) formed, and light could escape, thereby preserving a record of that time in the fossil radiation that is the CMB. Small fluctuations in the density of the universe seeded in the distant past caused fluctuations in the temperature and polarization of the CMB, which we can measure today. These small fluctuations in density then grew due to gravitational attraction to form more dense regions that would evolve into stars and galaxies and, eventually, life.

As Galileo could only describe the observable universe given the tools available to him, the current wealth of sky surveys allows cosmologists to see the universe, both visible and not, in the language of electromagnetic radiation.

Just as the CMB was detected by its microwave radiation, x-rays, infrared and ultraviolet light, radio waves, and gamma rays can all reveal different components of the cosmos.

Modeling the trove of data from each of these pathways separately may once have proved a daunting challenge. Today, however, the transformative power of exascale computing and the collective knowledge gained from communities like ECP will enable simulations to make predictions for all of these at once.

“The point is that we now have the capability, the computing power, and the physics to address all of these results, or all of these datasets, and all of their cross-correlations using a single simulation strategy, which is a very powerful tool,” states Habib.

The results of the work are manifest. Researchers can generate many universes with different parameters and compare their models with real observations to determine which models most accurately fit the data.

Alternatively, the cosmological simulations can make predictions for observations yet to be made and thereby serve as a source of discovery. By producing extremely realistic pictures of the sky, the simulation results allow researchers to optimize telescopes and observational campaigns such as DESI and LSST to meet their data and science challenges—a forward-thinking strategy that Galileo would have applauded.

At the end of 2023, ECP will close shop, and ExaSky will go its own way. And as much as it has been an exciting time for Habib and his colleagues, they exit with some regrets. Chiefly, that the project is ending just as the Frontier and Aurora systems come fully online and the Application Development teams are only reasonably prepared for the first runs on the new machines.

Still, Habib sees the ECP work as a major success, one that DOE can be proud of in terms of successful collaborations between scientists of all stripes, different labs, and outside vendors as well as the advances made in a relatively short time—roughly the span of a single supercomputer generation.

Habib is particularly saddened to part company with a community that bonded and thrived over the course of the ECP project, many of them truly becoming experts in their field because of this interaction, Habib notes.

“There’s this whole idea of a shared fate kind of scenario, where everyone works together to meet the measures and goals,” says Habib. “And the ECP community has a good combination of senior people with lots of experience down to postdocs and even undergraduates who are all super smart and making major contributions to the overall effort. I think having this large and fun mix of people is what makes science worth doing, aside from the results that you get.”

For now, the team will write up its findings and share their trials and triumphs with the larger scientific community, anxiously awaiting results from the full exascale systems to determine the breadth of their success and what might need further tweaking.

Beyond the exascale aspect, their work in virtual universes is already adding depth of knowledge to the growing field of digital twins. These are sophisticated models that can make robust predictions for, and help us understand, the underlying issues related to unexpected evolutions in systems ranging from disease spread, geosciences, weather, climate, and industrial processes.

Just as Galileo’s simple telescope started a revolution in scientific tools and thought, the observations that result from ExaSky and similar sky maps will undoubtedly turn long-held beliefs on their head and inexorably alter our view of the universe.