EQSIM and RAJA: Enabling Exascale Predictions of Earthquake Effects on Critical Infrastructure

Nearly 120 years ago, the great “San Francisco” earthquake of 1906 provided a stark and sobering view of the havoc that can be caused by the sudden and violent movement of Earth’s tectonic plates. According to USGS, the rupture along the San Andreas fault extended 296 miles (447 kilometers) and shook so violently that the motion could be felt as far north as Oregon and east into Nevada. The estimated 7.9-magnitude quake and subsequent fires decimated the major metropolis and surrounding areas: buildings turned to ruins, hundreds of thousands of people left homeless, and a death toll exceeding 3,000. Today, as evidenced by the catastrophic 7.8-magnitude earthquake that struck Turkey in February of 2023, large earthquakes still present a significant potential danger to life and economic security as researchers work to develop ways to better understand earthquake phenomena and quantify associated risks.

High-performance computing (HPC) has enabled scientists to use geophysical and seismographic data from past events to simulate the underlying physics of earthquake processes. This work has led to the development of predictive capabilities for evaluating the potential risks to critical infrastructure posed by future tremors. However, data limitations and the high computational burden of these models has made detailed, site-specific resolution impossible—until now. With the Department of Energy’s (DOE’s) Frontier supercomputer, the world’s fastest and first exascale system, researchers have access to a transformative degree of computational power and problem-solving that extends the range of earthquake prediction to previously unattainable realms.

Exascale systems are the first HPC machines to provide the size and speed needed to run regional-level, end-to-end simulations of an earthquake event—from the fault rupture to the waves propagating through the heterogeneous Earth to the waves interacting with a structure—at higher frequencies that are necessary for infrastructure assessments in less time. However, the full potential of exascale could only be realized through a concerted effort to enhance the codes used to run the models and implementation of improved software technology products to boost application performance and efficiency. Through DOE’s Exascale Computing Project (ECP), a multidisciplinary team of seismologists, applied mathematicians, applications and software developers, and computational scientists have worked in tandem to produce EQSIM—a unique framework that simulates site-specific ground motions to investigate the complex distribution of infrastructure risk resulting from various earthquake scenarios. “Understanding site-specific motions is important because the effects on a building will be drastically different depending on where the rupture occurs and the geology of the area,” says principal investigator David McCallen, a senior scientist at Lawrence Berkeley National Laboratory who leads the Critical Infrastructure Initiative for the Energy Geosciences Division and director of the Center for Civil Engineering Earthquake Research at the University of Nevada at Reno. “ECP’s multidisciplinary approach to advancing HPC and its collaborative research paradigm were essential to making this transformative tool a reality. By having all the experts focused on the project together at the same time, we achieved the seemingly impossible.”

An Ambitious and Lofty Goal

At the heart of EQSIM is a Lawrence Livermore–developed wave propagation code called SW4 (Seismic Wave, 4th Order). SW4 is a summation-by-parts finite difference code for simulating seismic motions in a 3D Earth model that incorporates both a material model of an area (the San Francisco Bay Area, for example) and a rupture model along a specific fault. “Our ECP goal was rather lofty and ambitious,” says Livermore applied mathematician and SW4 developer Anders Petersson. “We aimed to resolve earthquake ground motions up to 10 Hertz in frequency, which correspond to very short wavelengths, and to do such a simulation in 4 to 5 hours.” McCallen adds, “Higher frequency motions mean the ground shakes more rapidly. The structural vibrations and the dynamics of the structures we are analyzing demand these higher frequencies so that we can adequately evaluate infrastructure risk.”

To achieve this goal, the team had to advance algorithms and adapt codes to efficiently utilize exascale architectures based on graphics-processing units (GPUs). From an application development perspective, Petersson is quick to point out the “magnitude” of such a feat. “Every time you double the frequency, the amount of computational work you must do increases by a factor of 16, so achieving 10 Hertz is around 1,000 times harder than simulations with frequencies on the order of 1 to 2 Hertz,” he says. “The other challenge is timestepping. As we go to higher frequencies, the shorter wavelengths oscillate faster in time and that requires more timesteps. Throwing more compute nodes on the problem allows each time step to go faster, but the number of timesteps increases linearly with the frequency, so it seemed almost impossible to complete such a run in 4 to 5 hours, considering simulations with a frequency of 2 Hertz took 20 to 30 hours previously. Ultimately, it was further improvements in the code that increased the accuracy and reduced the overall compute time.”

According to McCallen, one of the most significant improvements the ECP team made was completely modifying the code’s adaptive gridding. “We developed new, advanced numerical algorithms to enhance modeling efficiency and numerical rigor to geologically optimize computational grid refinement,” says McCallen. SW4 discretizes seismic wave equations on a Cartesian mesh below an automatically generated and enhanced curvilinear grid, which accurately represents surface topography. This feature allows the computational mesh to be made finer near the free surface, where more resolution is often needed to resolve short wavelengths in the solution. “We’ve also developed enhanced physics representations, such as improved fault rupture mechanics, to increase the realism of the simulations.” The computational domain now includes up to 500 billion grid points for models that contain fine-scale representations of soft near-surface sedimentary soils, such as those in the San Francisco Bay Area—a hot spot of earthquake activity.

The execution of these enhanced SW4 models on Frontier has become the first step in a sophisticated computational workflow for fault-to-structure simulations developed by the EQSIM team. The workflow requires a tremendous amount of computational power to execute, and exascale systems, such as Frontier, offer the speed and file storage size that enable this scientific exploration. However, SW4 was initially developed to run on CPU-based HPC machines, and almost all the computational power of Frontier—which combines CPUs and GPUs—resides in GPUs. RAJA, a game-changing portability software, allows SW4 to run on both CPU and GPU architectures without having to develop and maintain multiple versions of the code. Thus, RAJA was crucial to enabling EQSIM to make the most of exascale capability at the onset.

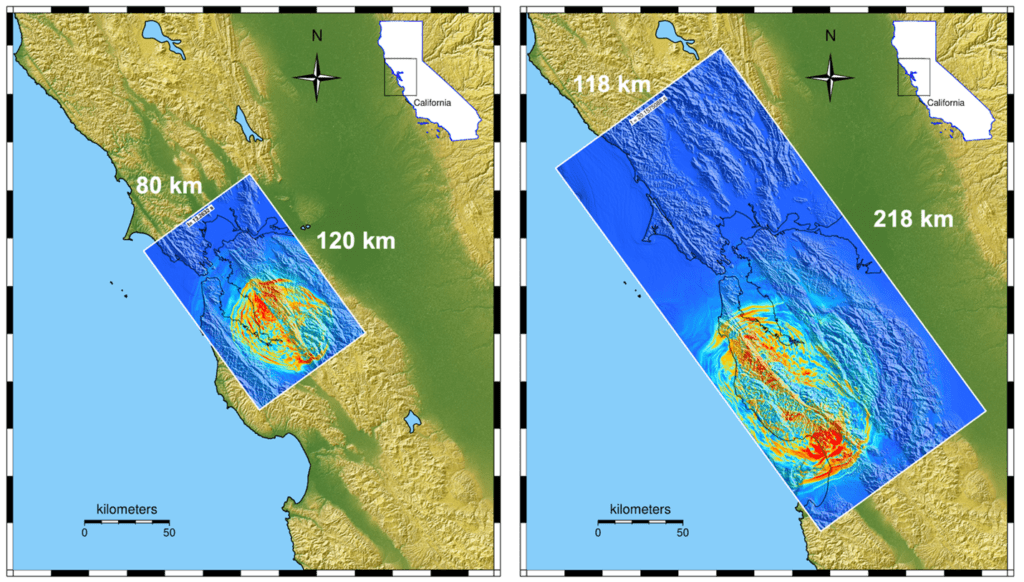

Figure 1. These ground motion simulations of (left) a 7-magnitude Hayward fault earthquake and (right) a 7.5-magnitude San Andreas fault earthquake were recently completed on Frontier. The images illustrate the major growth in computational domain size with earthquake magnitude and the ability to model much larger events as a result of exascale computing systems.

Performance No Matter the Machine

The Livermore-developed RAJA Portability Suite includes RAJA, a collection of C++ abstractions that enable developers to write single-source application code that can run on different architectures, and Umpire, which provides portable memory management. RAJA offers abstractions for parallel loop execution so that applications written in a native programming language can be portably compiled to different hardware architectures, eliminating the need to completely rewrite the code for new HPC systems as they come online. While the origin of these software products is rooted in supporting the mission of DOE’s National Nuclear Security Administration, ECP provided an opportunity to apply the technologies to a broader user base. The open-source suite is used in up to eight ECP projects, including application development projects like EQSIM.

With RAJA, the code’s parallel algorithms are mapped onto the underlying execution mechanism by using existing parallel programming models, such as OpenMP. “The whole point behind RAJA is that once the code is ported to one machine, it can be ported almost immediately to another machine with a different architecture, and the code will run faster with minor adjustments,” says Livermore computer scientist Ramesh Pankajakshan, who led the RAJA implementation for EQSIM. As part of the effort, Pankajakshan worked directly with RAJA developers at Livermore to overcome performance challenges. As an example, when running on ever-more powerful machines, the compilers generally struggled with the complicated loops inherent in SW4. The RAJA development team partners with vendors to run RAJA performance tracking software to identify and determine the reason for any drop off. “The RAJA Performance Suite contains many representative computational kernels from different applications and algorithms, including from several ECP applications, and compares the performance of RAJA to a native implementation of a particular program model,” says Rich Hornung, a computational scientist in Livermore’s Center for Applied Scientific Computing who leads RAJA development. “Ramesh had done an initial performance analysis and discovered an issue in the SW4 kernels. We worked with the vendor using the Performance Suite to identify the source of the problem. Based on the vendor’s recommendation, we tried something different, and in doing so, we were able to achieve significantly improved performance.”

In this symbiotic relationship representative of ECP, the work done to improve the SW4 kernels also yielded benefits to other RAJA users. “We came up with a solution that we subsequently integrated into RAJA, and the adjustment resulted in all users seeing a performance improvement with their codes,” says Hornung. In addition, working with ECP applications has helped make the RAJA portability suite more robust. “Each of these applications stresses the software in different ways, so it hardens it for more use cases and gives us more options and capability.” ECP has also provided a collaborative space for teams working on similar products to build capability together and not reinvent the proverbial wheel. Hornung explains, “After the first three years of ECP, we became a joint project with the Kokkos development team. Together, we have developed an open-source utility library that we can both use and contribute to. That’s a benefit we get for free when we work more closely with one another. We don’t both need to build a solution to this one problem; we can share it.”

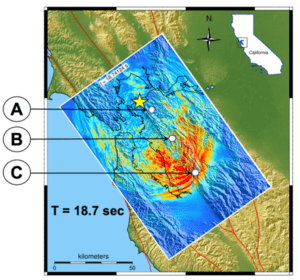

Figure 2a. EQSIM offers the ability to understand the site-to-site variability of building response during an earthquake. The differences in building response at the three sites is due to the radiation pattern of the seismic waves.

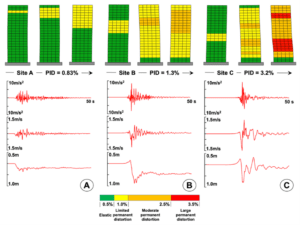

Figure 2b. The building damage prediction for the same building located at three different sites that are 1 kilometer off the Hayward fault (site A, site B, and site C in Figure 2a). The plots under the buildings show the ground motions from SW4 and the ground motion simulations at each site, indicating the differences in motion and the corresponding site-specific differences in building damage.

The Secret Sauce to Success

At the beginning of ECP, EQSIM could resolve motions of 2 Hertz in a material model where the shear speed exceeds 500 meters per second. Now, the team has achieved 10 Hertz in a model that resolves motion down to a shear speed of 140 meters per second—providing a far more realistic predictive tool for validating infrastructure risk. “Earthquake hazards and risks are a problem of national importance,” says McCallen. “The damage that could result from a large earthquake is a bigger societal problem than most people imagine.” Indeed, according to USGS and the Federal Emergency Management Agency, earthquakes cost the nation an estimated $14.7 billion annually in building damage and related losses. Says McCallen, “We’ve really pushed the envelope of what is possible computationally and made tremendous advancements. EQSIM has become a practical tool for realistically evaluating and planning for the effects of future earthquakes on critical infrastructure, providing a basis for objectively and reliably designing or retrofitting structures.”

Looking back, the EQSIM team agrees that the success of the effort would not have been possible without ECP’s unique funding paradigm and its focus on cross-discipline collaboration. “Incorporating RAJA into the project was instrumental to getting us to where we are today,” says Petersson. “ECP allowed us to involve experts in the necessary fields and work together. It’s impossible for a single person to know enough about everything to make advancements like this happen in such a relatively short amount of time. When you think about all the pieces that must be executed for a simulation to run, what we’ve managed to accomplish is incredible.” Pankajakshan adds, “At the cutting edge of computing capabilities, you need to talk to people and learn from their mistakes and experiences. Without ECP, all the different projects would have been working separately to make their applications and software work on the machines, and everyone would have been completely stovepiped. ECP’s collaborative environment was an invaluable benefit. It is essential that we find ways to maintain and extend the capabilities we’ve developed through ECP into the future.”

Since its inception in 2017, ECP has facilitated significant enhancements in algorithmic, software, and hardware capabilities, leading to an astonishing 3,500-fold increase in the computational performance of EQSIM. The improved simulations have been coupled with engineering models to illustrate the site-dependent distribution of building damage for representative buildings impacted by a 7-magnitude earthquake along the Hayward fault, and the work is garnering national and global attention as the team looks to further the application’s utility. McCallen says, “We are actively transitioning all the great developments and capabilities achieved through ECP to engineering practice by engaging and establishing partnerships with industry and other state and federal agency stakeholders. ECP’s integrated approach and collaborative environment was our ‘secret sauce’ in creating a viable and accurate tool for predicting earthquake hazards and risk.” And it’s a good thing too, because when it comes to earthquakes and their destructive potential, it’s not a matter of “if,” but “when.”

This work was performed under the auspices of the U.S. Department of Energy by Lawrence Livermore National Laboratory under Contract DE-AC52-07NA27344. LLNL-TR-856928

This research was supported by the Exascale Computing Project (17-SC-20-SC), a collaborative effort of the U.S. Department of Energy Office of Science and the National Nuclear Security Administration.

Topics: Earthquake Berkeley Seismic McCallen