The goal of the ATDM OS/R project is to augment and optimize existing operating system and runtime (OS/R) components for production high-performance computing (HPC) systems. Ron Brightwell (Figure 1), project manager of the Exascale Computing Project’s (ECP’s) Sandia National Laboratory (Sandia) ATDM software ecosystem and scalable systems software manager at Sandia, notes that ATDM OS/R is not a software development effort. Instead, the project focuses on the measurement and analysis of portable, open-source, and vendor-supplied software to increase the functionality, performance, and scalability of supercomputer applications and runtime systems with an emphasis on the integration and consistency of the OS within the software ecosystem. According to Brightwell, “System resources should be managed in cooperation with applications and runtime systems to provide improved performance and resilience. The increasing complexity of HPC hardware and application software must be matched by corresponding increases in the capabilities of system management solutions. A consistent yet flexible software ecosystem is required—rather than separate, one-size-fits-all solutions.”

System resources should be managed in cooperation with applications and runtime systems to provide improved performance and resilience. The increasing complexity of HPC hardware and application software must be matched by corresponding increases in the capabilities of system management solutions. A consistent yet flexible software ecosystem is required—rather than separate, one-size-fits-all solutions. – Ron Brightwell, Sandia ATDM software ecosystem program manager and scalable systems software manager at Sandia.

Balancing Flexibility, Consistency, and Performance

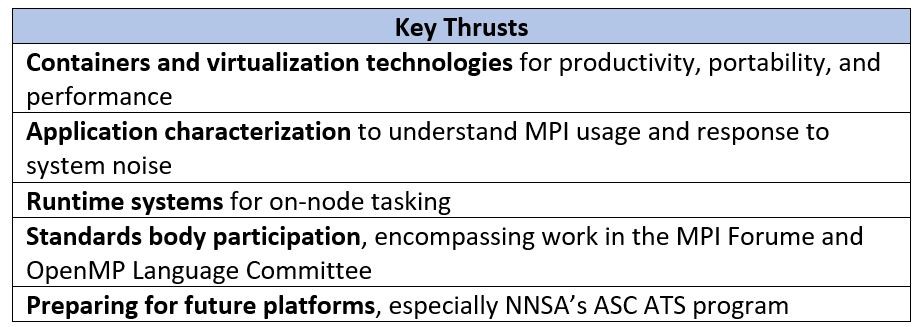

The key thrusts of the ATDM OS/R effort (shown below and described in the ATDM OS/R poster) represent an application-driven approach to meet current HPC and exascale needs while providing a path forward for future platforms, especially systems designed for the National Nuclear Security Administration’s (NNSA’s) Advanced Simulation and Computing (ASC) Advanced Technology Systems (ATS) program. The NNSA ASC program provides state-of-the-art computer simulation capabilities as part of NNSA’s mission to extend the lifetime of the nuclear weapons stockpile without underground testing.

Furthermore, the computing, modeling, and simulation tools developed in the ASC program are leveraged by a variety of national security programs. Funding from the ECP is used to similarly leverage this work to benefit the current exascale supercomputer efforts in the US Department of Energy (DOE) as well as the general HPC community. There is also a complementary relationship between the NNSA and DOE’s Advanced Scientific Computing Research program.

Technical Introduction

The multipronged ATDM OS/R effort is working with vendors, open-source projects, and standards bodies to ensure that operating environments (e.g., containers) and on-node runtime capabilities meet the needs of users on current and future supercomputers. This includes MPI analysis, a key performance analysis the results of which are essential to achieving the best communications performance in modern distributed and heterogenous distributed computing environments.

Brightwell observes, “We are looking at core container and virtualization technologies and how they affect OS overhead, the ability of these technologies to support the software package version needs for both legacy and leading-edge application executables, plus how containers and virtualization introduce OS noise, such as jitter, which can affect the performance of tightly coupled applications. For the runtime, we are analyzing low-level overhead, including that of the threading model. To assist our effort, the team has augmented a performance simulator so we can see and measure what changes in different runtime environments. We are also examining how the MPI overhead changes as distributed applications use lots of tasks and GPU devices, which are a hallmark of modern HPC architectures. The overarching idea is to minimize the OS and runtime overhead for HPC applications running at scale.”

Science and Application Drivers

The value to all the organizations funding this work is reflected in the successes and lessons learned as the ATDM OS/R team examines applications running in different computing environments and at increasingly greater scale. In addition to interacting with open-source developers and standards bodies, the team also presents their work at conferences and publishes their results in peer-reviewed articles.

Brightwell highlights MPI as one example, “MPI resource usage is a key question when running at scale. Does point-to-point MPI communication work best, or should users adapt their codes to use one-sided communications or the new MPI partition model to better map to the underlying resources? We also examine the efficacy of current approaches, such as the HPE Cray slingshot fabric. The result of these analyses is better science through better resource management.”

MPI resource usage is a key question when running at scale. Does point-to-point MPI communication work best, or should users adapt their codes to use one-sided communications or the new MPI partition model to better map to the underlying resources? We also examine the efficacy of current approaches, such as the HPE Cray slingshot fabric. The result of these analyses is better science through better resource management. – Ron Brightwell

Containers and Virtualization for Productivity, Portability, and Performance

Containers are being adopted across the HPC landscape.[1] They allow HPC users to scale from workstations to supercomputers inside a consistent, reproducible software environment because everything (except the OS kernel) is self-contained. Traceability is possible via build scripts.[2]

The NNSA’s mission to extend the lifetime of the nuclear stockpile without underground testing requires large-scale modeling and simulation.[3] The 2019 paper, “A Case for Portability and Reproducibility of HPC Containers,” notes, “The importance of any single simulation may not be known initially, and subsequent analysis, validation, and verification efforts may identify key results that need to be further analyzed or reproduced, potentially years later.” ECP and HPC scientists have the same needs. Containers present a software solution by encapsulating applications together with the entire supporting software environment in a containerized workflow that is both traceable and reproducible to meet the needs of the NNSA mission along with the needs of HPC users and other organizations.

Striking the balance between performance and portability can be challenging—especially in HPC environments that utilize accelerators (e.g., GPUs) to achieve high performance. Similarly, the interconnect technologies that combine many compute nodes in a distributed supercomputer can affect both performance and portability.

Even though work is ongoing—and likely will continue into the foreseeable future—the latest Sandia ATDM container approach is being deployed by vendors such as HPE Cray. The objective is to ensure that the container works and to solicit feedback from the vendor. According to Brightwell, the current (2022) results all look good from a GPU and MPI performance perspective. He notes that the team has iterated with the vendor. For example, based on vendor feedback, the team is working to reduce the size of the container by incorporating a minimal set of software. The goal of the minimal software set is to make the container as lightweight as possible. In discussing this example, Brightwell notes that users do not typically see this behind-the-scenes vendor activity. The ideal outcome is that users need only be concerned that their containers perform well and work reliably across many machines.

For more information, see the following paper and presentation to better understand the performance and portability trade-off concerns of containers:

- Beltre et al., “A Case for Portability and Reproducibility of HPC Containers,” CANOPIE‑11PC Workshop at SC19, IEEE, slides: https://www.osti.gov/servlets/purl/1643331.

Application Characterization to Understand MPI Usage and Response to System Noise

The Message Passing Interface (MPI) is the dominant programming model on leadership-class HPC systems. To better understand how MPI performs in modern HPC environments, the team uses a simulation approach to examine MPI runtime behavior. Brightwell observes, “Simulation allows us to carefully examine the relationship between MPI message matching performance and application time-to-solution. The result is better science through better resource management. The goal is better utilization and consistency.”

Simulation allows us to carefully examine the relationship between MPI message matching performance and application time-to-solution. The result is better science through better resource management. The goal is better utilization and consistency. – Ron Brightwell

Simulation, Queue Depth, and Message Matching

To gain better insight into the behavior of internal MPI mechanisms, the team extended the LoGOPSim network simulator to analyze the behavior of MPI message matching and queue depth.

MPI provides two main methods of point-to-point communication: (1) two-sided (matched) messages (e.g., MPI_Recv() and MPI_Send()) and (2) one-sided remote memory access (RMA) communication that does not require matching (e.g., MPI_Put() and MPI_Get()).

Message matching in two-sided MPI messages is defined as the deterministic, in-order completion of messages sent between two processes within a communication context (i.e., for a given instance of an MPI communicator). Each target process matches messages received from a source based on several criteria. Several factors can complicate this matching operation, including the following:[4]

- MPI message matching requires messages received on a given MPI communicator to be matched in order.

- Wildcards in receive requests (i.e., MPI_ANY_TAG and MPI_ANY_SOURCE) allow the receive request to match many messages.

- Provisions must be made because a message can sometimes arrive before the target has prepared a receive entry.

To account for this complexity, “MPI implementations need to keep either one large list for traversal for each communicator or two lists, one with exact matches and one with wildcards that must be searched for each incoming message.”[5] These search and traversal operations are obviously concerning from a performance perspective—especially when the list(s) become long owing to many queued messages.

The paper, “Using Simulation to Examine the Effect of MPI Message Matching Costs on Application Performance,” examined these performance implications: “We use trace-based simulation to understand how the cost of message matching affects workload performance. Simulation gives us fine-grained control over the cost of message matching and allows for straightforward experimentation with different match queue models. It also allows us to maintain detailed statistics without perturbing the workload under test.”[6]

Detailed results and analysis are reported in the paper. Key takeaways for the HPC audience include the following:

- Current workloads are unlikely to be significantly affected by the cost of MPI message matching unless matching becomes much slower than current mechanisms or match queues become much longer than observed in current applications.

- Advances in match queue design that provide sublinear performance as a function of queue length are unlikely to provide significant performance benefits unless match queue lengths dramatically increase.

- Although multithreaded MPI has the potential to significantly increase message matching costs, current forecasts suggest that if locking costs can be managed, the impact of message matching on execution time is likely to remain modest.

- Should match queue performance degrade in the future, then the paper provides guidance on how long the mean time per match attempt may be without significantly affecting application performance.

Current workloads are unlikely to be significantly affected by the cost of MPI message matching unless matching gets much slower than current mechanisms or match queues get much longer than observed in current applications. – Levy et al., “Using Simulation to Examine the Effect of MPI Message Matching Costs on Application Performance.”[7]

Multiple Sources of Data Including Real Application Traces are Required to Analyze MPI Behavior

The team also examines traces from real applications to evaluate their MPI usage, optimize where possible, and influence vendor hardware and software design decisions. The data and analysis are reported in detail in the paper, “Hardware MPI message matching. Insights into MPI matching behavior to inform design.”

A key takeaway is stated in the conclusion, “relying solely on one single source does not provide a sufficient understanding of application behavior in order to design efficient, performant hardware.”

For more information, see the referenced papers on the importance of MPI simulations with application trace data.

Runtime Systems for On-Node Tasking

The prevalence of many-core processors and massively parallel accelerators has motivated the refactoring and development of heavily multithreaded applications. During its long history, MPI implementations have traditionally not provided highly tuned support for multithreaded MPI communication.[8] Version 1.0 of the MPI specification was released in 1994[9], which was prior to the advent of many-core processors. To assist and provide feedback to developers and vendors, the Sandia ATDM team is looking at the impact of heavy multithreading on MPI performance and resource utilization.

The authors state in their “Hardware MPI message matching. Insights into MPI matching behavior” paper, “The MPI multithreading model has been historically difficult to optimize; the interface that it provides for threads was designed as a process-level interface. This model has led to implementations that treat function calls as critical regions and protect them with locks to avoid race conditions.”

The resulting performance boost is significant, and the authors report improvements of up to a 12× reduction in wait time for a send operation completion against a 2019 state-of-the-art multithreaded and optimized Open MPI implementation on an HPE Cray XC40 with an Aries network. Additional results show improvements in a working nuclear reactor physics, neutron-transport, proxy-application, which achieved up to a 26.1% improvement in communication time and up to a 4.8% improvement in runtime over the best performing MPI communication mode—single-threaded MPI.[10]

Brightwell uses Kokkos as current work progresses, “We want to ensure that the heavy multithreading in the Kokkos runtime is not adversely affecting MPI communications performance, locking, and memory utilization. We want to ensure that the software and hardware are doing the right thing. Are there any red flags?”

Along with Kokkos, team is also looking for the most common functionality across all vendors—including GPU vendors. For example, what is the memory model that makes sense?

Looking to the Future—Standards Body Participation

The team publishes papers and provides feedback to help vendors and software developers improve their MPI implementations. To ensure continued benefit to the NNSA and HPC communities, the team is actively participating in the MPI standards, MPI Forum, and the OpenMP language committees.

Brightwell highlights their successful contribution of the MPI partition communication model to the MPI standard. This communications model originated at Sandia, but the team used ECP funding to connect the dots. Regarding message matching, he notes that proposals are in progress at the MPI Forum (MPI’s standardization body) that could enable more parallelism in the matching process on a per-communicator basis given the guarantee from applications that they will not use wildcards in matching. However, even if these proposals become standardized, there will always exist some codes in which serialization will be required from a software queue point of view. Hardware can avoid this bottleneck because it can do many parallel searches and then decide on the correct ordered result at line rate.[11]

Current work includes the following:

- Heterogenous architecture support: the team is working with vendors on the partition model to enable more efficient GPU-MPI communications. Brightwell notes, “The team is working with vendors to find the best fit and hardware support.”

- Future platforms: The team is also preparing for future platforms, especially NNSA’s ASC ATS.

For more information, see the following papers on the work with the standards committees.

- R. de Supinski et al., “The Ongoing Evolution of OpenMP,” Proceedings of the IEEE 106 (11), November 2018.

- E. Grant et al., “Finepoints: Partitioned Multithreaded MPI Communication,” ISC High Performance 2019, Springer.

Summary (Key Messages about Recent Work)

The ubiquity of MPI in HPC and the dependence of many applications on communication performance warrants detailed studies and analysis to ensure that MPI does not restrict the evolving software approaches, such as heavy multithreading. Similarly, the impact of new software approaches, such as heavily multithreaded runtimes, must be understood and accounted for in MPI communications. The Sandia ATDM team is focused on gathering the data and performing analysis.

This work, along with containerizing the workflows, help match the capabilities of the system management solution to the increasing complexity of HPC hardware and application software.

Feedback to software developers and vendors provide immediate benefits to the NNSA and HPC communities. Long term, active participation by the Sandia ATDM team in the standards bodies and user forums means that the MPI and runtime communities, such as Kokkos and OpenMP, can adapt the existing standards and codes to support new machines and software approaches.

This research was supported by the Exascale Computing Project (17-SC-20-SC), a joint project of the US Department of Energy’s Office of Science and the National Nuclear Security Administration, responsible for delivering a capable exascale ecosystem, including software, applications, and hardware technology, to support the nation’s exascale computing imperative.

Rob Farber is a global technology consultant and author with an extensive background in HPC and in developing machine learning technology that he applies at national laboratories and commercial organizations.

[1] https://containerjournal.com/topics/container-management/containers-hpc-mutually-beneficial

[2] https://conferences.computer.org/sc19w/2019/pdfs/CANOPIE-HPC2019-7nd7J7oXBlGtzeJIHi79mM/3AyZkyZVlhldzPU6UEo655/3OqM2Lkt9DqiE2sDu1jvaS.pdf

[3] V. Reis, R. Hanrahan, and K. Levedahl, “The big science of stockpile stewardship,” in American Institute of Physics Conference Proceedings 1898, no. 1. (2017): 030003.

[4] https://onlinelibrary.wiley.com/doi/abs/10.1002/cpe.5150

[5] See note 4 above.

[6] https://www.sciencedirect.com/science/article/abs/pii/S0167819118303272

[7] See note 4 above.

[8] https://www.mcs.anl.gov/uploads/cels/papers/P1501.pdf

[9] https://en.wikipedia.org/wiki/Message_Passing_Interface

[10] https://www.researchgate.net/publication/333628811_Finepoints_Partitioned_Multithreaded_MPI_Communication

[11] See note 4 above.