E4S Deployments Boost Industry’s Acceptance and Use of Accelerators

Last November, during the SC23 conference held in Denver, Colorado, industry leaders from many organizations reflected on successful deployments of the Exascale Computing Project’s (ECP’s) Extreme-Scale Scientific Software Stack (E4S). In particular, ECP Industry and Agency Council (IAC) representatives, participating on an industry spokesperson panel, highlighted how E4S deployments at Pratt & Whitney, ExxonMobil, TAE Technologies, and GE Aerospace have reduced the perceived risk of adopting accelerator-enhanced architecture by industry. The impact has been immediate as demonstrated by changes in the planning for and use of accelerators by these organizations in their high-performance computing (HPC) environments. Similar sentiments were mirrored by a wide range of academics, business executives, government agencies, cloud providers, and independent software vendors participating at SC23.

The ability to deploy ECP software via E4S (which is freely available at https://e4s.io) was praised by users, recognizing the ability to deploy ECP software for “all-scale computing,” meaning the same ECP software can be used to run small experiments on student laptops; large and ensemble production tera- and petascale workloads on-premises and in the cloud; and when desired, extreme scale workloads on exascale, leadership-class supercomputers. The benefits of all-scale computing software transcend even the performance promise of exascale supercomputers as organizations can make significant contributions with real implications on their existing systems, without the need to run on an exascale platform. One such example is provided by the groundbreaking work by NOAA to harness the power of exascale software for faster and more accurate warnings of dangerous weather conditions.

SC23 industry panelist, Pete Bradley (principal fellow, Digital Tools and Data Science at Pratt & Whitney) said, “Exascale computing can allow us to understand complex phenomena that were previously out of reach, and we can then integrate those models to deliver capabilities beyond anything the world has seen.”

The Impacts of All-Scale Computing are Significant

Comments by industry, cloud, and academic leaders made during the multiday SC23 conference reinforce the benefits of ECP’s E4S software stack. Benefits include:

- Providing a fundamental contribution that lowers the barriers and reduces the time spent on developing, deploying, and using HPC platforms to speed up scientific endeavors.

- De-risking the decision to use GPU accelerators by proving that accelerators are productive across a wide variety of science and HPC applications.

- Providing an abundance of petascale computing by giving users (and vendor customers) a choice of platform so they can run at all scales on diverse architectures from multiple vendors.

- Putting industry users on the commodity power/performance curve to leverage the efficiency of accelerators to unlock significant hardware performance and efficiency boosts (up to 100×).

- Providing a robust runtime environment that supports many platforms through best practices and modern development techniques to prevent code failures. These benefits—including software component compatibility within the E4S stack—are expected to continue far into the future, so long as funding is provided for continued development of best practices—and very importantly—continuous integration and continuous deployment (CI/CD).

A Deployable Base for Technology Competitiveness

The seven-year, $1.8 billion ECP effort provided the foundation for these deployment successes by delivering specific applications and software products as assessed by independent figure-of-merit (FOM) outcomes at DOE computing facilities. These products—based on modern software best practices—benefit the global HPC community, including commercial users, due to generous, open-source licensing. (See the “Distribution” section on the E4S website and the TAE fusion reactor use case discussed in the Deploy, Modify, Enable section below). Any of these software components and applications can be easily deployed for free—without software bloat—via E4S.

For industrial HPC users, and ultimately for the entire HPC ecosystem, E4S deployments provide a base for U.S. technology competitiveness and exascale capability. This capability gives organizations a path forward to investigate scientific and technical challenges that were too computationally intensive and costly to pursue previously, thus providing an avenue to advance engineering capabilities well beyond the current state of the art.

Across the board comments by U.S. business executives; leaders at government agencies; numerous independent software, ISP, and hardware vendors; and other SC23 attendees, indicate that many organizations are leveraging the platform-agnostic ECP software capabilities to help build and maintain their global technology leadership.

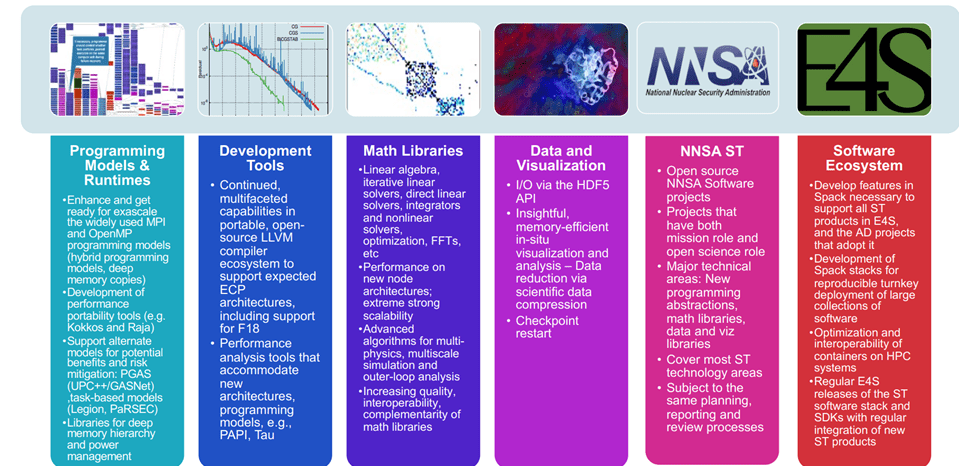

Figure 1. ECP Software Technology includes six technical areas that may be particularly suited to industry applications.

Along with targeted application development, a portfolio of ECP Software Technology (ST) products encapsulates the entire HPC software stack and toolset, including development tools, math libraries, programming models and runtimes, and the catch-all of data and visualization.

E4S is a Hallmark Accomplishment for Easy Deployment and More

Great software cannot be great unless it is made available in a form that can be easily deployed and installed, so users can run efficiently on their specific hardware. This includes support for GPU accelerators from multiple vendors. For this reason, E4S conveniently makes ECP software available for cloud and on-premises deployments as containers and binary distributions, as well as from source builds using the SPACK package manager. This multifaceted deployment capability lets user implementation teams deploy only the software they want—in a form ready for immediate deployment or custom-built specifically for their hardware configuration.

Leveraging this flexibility for their cloud customers, Michael Aronsen (technical vice president, Adaptive Computing) observed, “There have not been any issues with E4S from our point of view. We have optimized it for every cloud provider, and E4S can now be launched from our On-Demand Data Center solution into AWS, GCP, Azure, and OCI with just a few clicks.”

A Fundamental Contribution

Larry Kaplan (senior distinguished technologist, Hewlett Packard Enterprise) used the phrase “fundamental contributions” to summarize the value of E4S. He said, “E4S provides fundamental contributions to the HPC community by conveniently packaging and distributing open-source software to a broad set of users. These packages strive to meet the needs of customers running CPU and GPU systems with diverse architectures from multiple vendors. This includes builds that use several different programming environments, so customers can exercise choice and run at all scales. HPE worked with E4S to secure a system for running the HPE Cray Programming Environment as part of our engagement with the project to enable the use of modern development techniques, including CI/CD, to create a robust runtime environment now and in the future.”

Critical Infrastructure

Underscoring the direct (and detailed) impact on HPC computing, Sunita Chandrasekaran (associate professor, Department of Computer & Information Sciences, University of Delaware) pointed out, “LLVM-OpenMP offloading is one of the critical compilers used to migrate ECP applications such as QMCPACK, among others, to Frontier and other systems.” Frontier was highlighted as one of “the biggest science breakthroughs of 2023” by the journal Science.

Chandrasekaran continued, “We leveraged E4S to set up CI/CD pipelines to stress test LLVM-OpenMP, offloading compiler implementations on different GPU targets such that we were able to identify compiler/runtime bugs ahead of time and not wait till the bugs landed in upstream LLVM. This sort of rigorous regression testing required an experimental setup that E4S helped provide. We have so far tied up ECP SOLLVE validation and verification tests, HecBench, AMD smoke tests, and SPEChpc2021 benchmark suite into E4S.” For more information about these and other verification tests, see https://gitlab.e4s.io/uo-public.

An Enabling Infrastructure for HPC in the Cloud

The versatility and robustness of E4S is demonstrated in how this deployment capability enables accelerated science on systems ranging from laptops to leadership-class data centers as well as in the cloud. “HPC is an essential tool for scientists working to solve the world’s most challenging problems like fast-tracking drug discovery and uncovering genomic insights,” said Brendan “Boof” Bouffler (head of developer relations for HPC Engineering, AWS). “E4S serves an important role in the HPC community by helping to lower the barriers for HPC users,” he said, emphasizing how it reduces “time spent on developing, deploying, and using HPC to speed up scientific outcomes.”

Bill Magro (chief technologist for High Performance Computing, Google) reflected on the importance of all-scale computing capability and energy efficiency for HPC in the cloud. He said, “E4S provides a collection of open-source software packages. The packages are distributed in a portable way that supports many architectures, including a range of both CPU and GPU systems. E4S provides a valuable software stack for users to exploit the efficiency of their systems at a wide range of scales.”

Google has already played a leading role in providing a balanced and performant HPC scale-out cloud environment, which includes giving Google Cloud users access to the Distributed Asynchronous Object Store (DAOS). DAOS is a revolutionary new I/O system that will be used on the Aurora exascale supercomputer to support data intensive workloads.[1] Google now includes DAOS in the Google Cloud HPC Toolkit. According to Google, its inclusion reflects part of a greater opportunity for Google Cloud to address compute, communications, and even more storage intensive HPC workloads.[2]

Deploy, Modify, Enable

During the SC23 conference, TAE highlighted their hybrid PIC (particle-in-cell) implementation based on WarpX. TAE Technologies, Inc., is a private fusion company working towards building a viable, commercial fusion power plant as a source of abundant clean energy. Over its 25-year history, the company has developed progressively more advanced, larger fusion devices. In doing so, the science required to model these reactors has become ever more complicated. The challenge facing TAE is that as their machines get bigger, the physics issues become far more complex. To gain insight into these larger machines, TAE had to adapt their software models to account for the greater complexity and run on more capable HPC platforms. Rather than create their own software from scratch to address a myriad of scalability and complexity issues, TAE engineers saved both time and money by adapting their software models to the publicly released ECP WarpX electromagnetic and electrostatic PIC code—the 2022 ACM Gordon Bell Prize–winning application.

The Benefit of Modularity

TAE’s efforts benefitted from the ECP focused development of the application, as Roelof Groenewald (computational scientist, TAE) noted, “The care that the WarpX developers took to modularize the code allows us to build a very different model to simulate next-generation fusion experiments. Because WarpX scales well, our simulations built on WarpX also scale well.”[3]

The Importance of the Open-Source License

The TAE effort also highlighted the value of the open-source license model used by many ECP products. Groenewald said, “One of the benefits to us, which is true of a lot of ECP products, is that the code is completely open source. The code is easy to get started with, free to download, and it’s free to modify, which is a critical thing for our use case.”

Extending Capability beyond Exascale Supercomputing

A popular benefit of deploying exascale-capable software noted by many at SC23 is that the pursuit of exascale is as important as exascale itself because it provides an abundance of petascale. Rick Arthur (senior principal engineer for Computational Methods, GE Aerospace) observed, “The pursuit of exascale has been as important as exascale itself, in that it provides an abundance of petascale. Problems requiring the full machine size are rare (and currently prohibitively costly to create, verify, and interpret), but that abundance affords feasibility to run exploratory ensembles more freely at petascale, which is much more approachable for many in industry.” In addition, Arthur noted that the relationships, technology advancement, and the learnings from involvement with ECP has helped inform the company’s internal HPC investments and readiness. When it comes to expanding the capabilities of what can be done computationally, Arthur stated, “DOE has been the leader, and we are grateful to follow.”

The belief is that yes, the extreme scalability and efficiency of ECP exascale-capable software offers a tremendous advantage because it gives scientists and engineers the ability to investigate phenomena that were previously out of reach on smaller machines. For many commercial engineering projects though, the real value resides in the combined portability and scalability of ECP exascale-capable software because it gives scientists and engineers the ability to run ensembles of smaller simulations on existing tera- and petascale systems to examine and visualize phenomena over longer periods of time and over a greater range of initial conditions. This capability provides greater engineering insight that helps deliver better products.

De-risking Accelerator Technology

A key takeaway from the industry panel discussion was that ECP has de-risked a move to GPUs for many organizations. Users attributed much of this uplift to support for key tools and libraries such as SPACK, OpenMPI, HDF5, and zfp. Mike Townsley (senior principal for HPC, ExxonMobil) said, “[The] ECP effort proved GPU accelerators (and their software ecosystems) were productive across a wide variety of science [applications], de-risking our decision.”

Digital Twins and Multidisciplinary Simulation

Many voices articulated the same belief in the importance of the exascale-capable visualization tools that can be deployed with E4S. These tools render the data from large, complex, multidisciplinary simulations (e.g., digital twins)—even when run on exascale supercomputers.

The ability to visualize the phenomena that can be replicated by these models helps engineers understand the interactions between an engineering component and its environment. Engineers can then identify any critical interactions and their roles in affecting a component’s integrity and potential lifetime. It was also noted during the industry spokesperson panel discussion that artificial intelligence (AI)-based “physics-informed” models, rather than experience-based ones, are being investigated to accelerate future component design and product commercialization.

Pratt & Whitney’s Bradley highlighted the utility of exascale for simulating intricate combustion physics related to current and next-generation gas turbine engines at the company. “We are undergoing a digital transformation,” said Bradley. “Pratt & Whitney is building a model-based enterprise that connects every aspect of our product lifecycle from customer requirements to preliminary design to detailed designs, manufacturing, and sustainment. Advanced modeling helps us to build digital twins that allow us to optimize products virtually before we bend a single piece of metal. This will deliver new advances for our customers in performance, fuel burn, and emissions, and shorten the time it takes to go from concept to production.”

Putting HPC on the Commodity Hardware Power/Performance Curve

Mike Heroux, project leader and director of ECP ST, points to a bright future for organizations that leverage the success of ECP with E4S deployments because “ECP gave users the ability to manage within current power budgets by leveraging the efficiency curve of accelerators. Tremendous performance and efficiency boosts (up to 100×) make the ECP legacy one that can unlock the potential of accelerators available to everyone. This puts HPC on the commodity power/performance curve through accelerated computing. It benefits workloads that users and industry care about.”

A “Shared Fate” Vision is Essential to the Future of HPC

Heroux observed that current academic and industry deployments highlight the benefits that sprang from the ECP effort to create robust software for accelerators used in exascale systems, “The ECP ‘shared fate’ software development approach benefits applications at all scales.”

Adoption of a shared fate vision is necessary because of the rapid rate of change in computer hardware and the advent of recent technologies, such as AI. The rapidity of technical innovation, although highly beneficial, has made it impossible to sustain a patchwork legacy of ad hoc approaches to software development and interoperability. These ad hoc approaches have proven wasteful because individual groups duplicate resources to develop similar yet incompatible software solutions to the same problems—thereby “reinventing the wheel.” Furthermore, it is not practical to provide every software project with access to the latest hardware for software development, nor is it practical to provide developers with continued and frequent access required for compatibility and performance testing. For these and other reasons, sustained investment in CI/CD is both necessary and essential to preserving software interoperability and portability.

Spreading the Word

Getting the word out is key to bringing users and funding agencies together to benefit from a shared fate created by the need for all-scale, accelerated computing. An expanding user base that is willing to highlight their successful deployments is essential to obtaining continued funding for E4S outreach efforts and to preserve the essential software testing and verification work.

CI/CD is the Foundation Behind E4S and ECP Software

Sameer Shende (research professor and director of the Performance Research Laboratory, University of Oregon, Oregon Advanced Computing Institute for Science and Society) agrees with the highly visible user benefits of E4S, from easy deployments to education and community outreach, but he noted that ensuring software component interoperability and the robust, correct operation of software components on many hardware platforms can only be achieved through an extensive behind-the-scenes CI effort. The challenge is that while CI is the path to our shared fate future for the HPC community, it requires a significant time and monetary investment.

Two Ways that E4S Eliminates Guess Work

E4S software can be freely downloaded for easy deployment. The software tools and libraries support numerous multiarchitecture and multivendor implementations, allowing users to evaluate the performance of E4S on diverse hardware. The mission requirement for ECP—namely, to create software that will run on the Department of Energy’s exascale supercomputers—ensures that E4S software components can run at scale on even the largest systems and cloud instances yet still run on student laptops.

In addition to easy deployment and evaluation, Shende pointed out that HPC groups and organizations can contact him via the E4S project to gain access to the Frank cluster (short for Frankenstein). This cluster is used for E4S verification and can provide access to recent hardware not covered under nondisclosure agreements.

Summary

E4S deployments are now recognized by many in industry as a path forward for competitiveness, enabling all-scale computing and extending capability to the broader HPC community. Preserving E4S and all its benefits is critically important to future technology innovation and scientific discovery.

Keeping software performant and fresh is essential, but it does require funding. Ensuring a robust, portable, interoperable, all-scale laptop to exascale infrastructure is not free. CI/CD is particularly machine intensive to ensure platform and component compatibility. Clearly, CI/CD is one of the most effective investments that can preserve software interoperability, performance, and portability. To preserve these benefits, the message to users is clear: participate, advocate, and use it, or risk losing it.

Rob Farber is a global technology consultant and author with an extensive background in HPC and machine-learning technology.

This research was supported by the Exascale Computing Project (17-SC-20-SC), a joint project of the U.S. Department of Energy’s Office of Science and National Nuclear Security Administration, responsible for delivering a capable exascale ecosystem, including software, applications, and hardware technology, to support the nation’s exascale computing imperative.

[1] https://www.alcf.anl.gov/aurora

[2] https://www.intel.com/content/www/us/en/high-performance-computing/resources/daos-google-cloud-performant-hpc-white-paper.html

[3] https://tae.com/a-hybrid-pic-implementation-in-warpx/

Topics: Chandrasekaran Shende Townsley Arthur Groenewald Google Magro Bouffler AWS Adaptive Computing Aronsen Kaplan Bradley E4S Pratt & Whitney Heroux GE Aerospace ExxonMobil TAE HPE