By Rob Farber, contributing author

The quantum Monte Carlo methods package (QMCPACK) is a unique simulation code that can produce ab initio electronic structure solutions for a broad range of materials with an accuracy unreachable by other methods and packages. This parallel, many-core CPU and GPU accelerated software helps to overcome the time-to-solution challenges that quantum Monte Carlo (QMC) methods face, thus opening many new research opportunities with the software’s wide applicability, high accuracy, and a unique ability to improve the accuracy of solutions even to the point of approaching exact results for some systems. [1] Portability and exascale-capable performance make QMCPACK a unique and valuable resource to the scientific community for electronic structure modeling and cross-validation.

An Exemplar Use Case in Using Good Software Practices to Create a Performance-Portable Framework from Academic Code

QMCPACK is a use case worthy of detailed study by other high-performance computing (HPC) software projects.

The starting point for QMCPACK is a common one in the HPC community in that the team begin with a working research grade academic parallel code. In this case, the code had been optimized for the Intel Xeon Phi many-core coprocessors. To date, the QMCPACK team has created a performance-portable framework that runs well on systems from laptops to leadership-class supercomputers and that is expected to run well on exascale and future, beyond exascale systems.

While creating an exemplar performance portable framework, the QMCPACK team has been open about their thought process and development effort. This includes the following:

- The software selection process

- The collaborative use by many vendors and open-source teams of Exascale Computing Project (ECP) resources to help speed up the maturation of Open Multi-Processing (OpenMP) Offload implementations for CPUs and GPUs

- The adoption of ECP continuous integration (CI) to ensure program correctness on the many platforms supported by QMCPACK[2]

- The adoption of the ECP standard Spack package manager and build process to support as many platforms as possible without overburdening their developments

The QMCPACK use case highlights the value of government support for the ECP effort and the value delivered by various ECP projects to bring to fruition a sustainable, cross-platform, and performant software ecosystem for the nation’s exascale systems.

Scientific Significance

In the past, the fundamental challenges with, and high computational cost of QMC methods prevented their mass adoption. Consequently, scientists utilized approximations in methods such as the popular density-functional theory (DFT), and more computationally expensive electronic structure methods such as the GW approximation and dynamical mean-field theory. The accuracy and applicability of these approximations varies widely for different materials and chemical systems and weakens the solutions found by the packages that use them. Obtaining systematically improvable and increasingly accurate results for general systems represents a major challenge.[3] Alternatively, quantum chemistry methods that are accurate and systematically improvable scale poorly with system size. They are not well developed for periodic systems with hundreds of electrons and are not yet suitable for describing metals.[4]

To address these issues, the QMCPACK methods use stochastic sampling to solve the many-body Schrödinger equation.[5] Paul Kent (Figure 1)—a distinguished member of R&D staff at Oak Ridge National Laboratory (ORNL) and PI of the ECP’s QMCPACK project—explains, “QMC methods are not magically accurate because they do make a few approximations in solving the Schrödinger equation.”[6] However, unlike other approaches, these approximations can be systematically improved, and in some cases they can be improved sufficiently to give nearly exact results.[7] The QMCPACK methods also scale to big atomic systems with thousands of electrons: “By using statistical methods to solve the Schrödinger equation, large and complex systems can be studied with unprecedented accuracy—even systems in which other electronic structure methods have difficulty.”[8]

As with any other quantum electronic structure method, the QMCPACK methods can be directly applied to materials and chemical problems of interest and can provide unprecedented accuracy and broad support for a wide range of systems. Examples of such applications include semiconductors, metals, and a variety of molecular systems.[9] This capability creates a wealth of new scientific opportunities, as Kent explains, “There are many examples of materials for which the results are very sensitive to the accuracy of the atomic models. Examples include 2D nano materials (owing to weak van der Waals force), quantum materials, and lithium-ion battery materials. Small differences in accuracy can have big effects.”

There are many examples of materials for which the results are very sensitive to the accuracy of the atomic models. Examples include 2D nano materials (owing to weak van der Waals force), quantum materials, and lithium-ion battery materials. Small differences in accuracy can have big effects. — Paul Kent

Most significantly, QMC methods enable cross-validation of electronic structure schemes to challenge chemical, physical, and materials problems and to help guide improvements in the methodology.[10] Looking to the future, Kent notes, “Current electronic structure databases are based on lower-fidelity results, such as the approximate-in-practice DFT. The hope is to create AI systems models based on high accuracy, guaranteed accuracy QMC results.”

Current electronic structure databases are based on lower-fidelity results. The hope is to create AI systems models based on high accuracy, guaranteed accuracy QMC results. — Paul Kent

QMCPACK is designed to be accessible and usable by most scientists. It can be used with many packages, such as RMG-DFT, Quantum ESPRESSO, Quantum Package, Qbox, PySCF and GAMESS. Essentially the QMC methods further improve the results of the methods implemented in these codes. Additional codes such as NWCHEM can be interfaced straightforwardly. Furthermore, the QMCPACK methods utilize the parallelism and performance of both CPU and GPU devices. Accordingly, QMCPACK runs well on systems ranging from laptops and workstations to leadership-class supercomputers.[11] The software is open source and can be downloaded from GitHub.

Primary support for QMCPACK comes from the US Department of Energy (DOE), Office of Science, Basic Energy Sciences, Materials Sciences and Engineering Division, as part of the Computational Materials Sciences Program and the Center for Predictive Simulation of Functional Materials, and from the ECP, a collaboration between the DOE Office of Science and the National Nuclear Security Administration.

ECP Communications Specialist Scott Gibson featured Paul Kent and QMCPACK on an episode of the Let’s Talk Exascale podcast, “Enabling Highly Accurate and Reliable Predictions of the Basic Properties of Materials.”

Science Goals

“The goal of QMCPACK,” Kent notes, “is to come up with a performance-portable QMC code base to support material science. This code base addresses a gap in the HPC and exascale software capabilities represented by existing software packages. All these packages attempt to solve the Schrödinger equation as accurately as possible. QMCPACK helps fill this gap by giving scientists the ability to find solutions for molecules to general materials that are not possible with existing methods and software. For example, the highest-level quantum chemistry approaches cannot address metals.” Kent continues, “The QMC approach provides accurate and testable methods to find solutions, and the few approximations made can be tested and refined to improve accuracy if needed. In other words, the methods can provide guarantees in accuracy of results. Any extant method cannot provide these guarantees.”

The QMC approach provides accurate and testable methods to find solutions, and the few approximations made can be tested and refined to improve accuracy if needed. In other words, the methods can provide guarantees in accuracy of results. Any extant method cannot provide these guarantees. — Paul Kent

The value of any HPC solution resides in the usefulness of the results it produces. Although QMC can be applied directly, the team also intends to develop an open-access database of highly accurate materials properties for further research. This database could be used for training AI models for much broader application and at low cost.

“All this sounds great,” Kent continues, “but the challenge is that solving the many-body Schrödinger equation is known to scale exponentially and even to be NP-hard. This means that to achieve high accuracy, at some point users must pay for the computational burdens, hence the need for HPC and exascale supercomputers. The value of the QMCPACK effort starts with focusing on accuracy today and leveraging the growth in computing power tomorrow.”

All this sounds great, but the challenge is that solving the many-body Schrödinger equation is known to scale exponentially and even to be NP-hard. This means that to achieve high accuracy, at some point users must pay for the computational burdens, hence the need for HPC and exascale supercomputers. The value of the QMCPACK effort starts with focusing on accuracy today and leveraging the growth in computing power tomorrow. — Paul Kent

Even now in the pre-exascale era, the benefits of the QMC approach are already manifest. “For example,” Kent explains, “we can support systems that were out of reach even a few years ago, such as in a 2022 study of the anti-ferromagnetic topological insulator MnBi2Te4 that plays host to numerous exotic quantum states. Studying these kinds of materials required the addition of spin-orbit interaction, which was recently added.” This extension opens access to materials constituted with elements from nearly the entire periodic table.

Technical Approach

The QMCPACK project has been a design-intensive effort centered on good software practices.

First, the team needed to build a performance-portable software framework that could use CPU and GPU parallelism and run on everything from a student’s laptop to exascale HPC systems. The starting point was a very efficient Intel Xeon Phi (Knights Landing)-optimized many-core software implementation that vectorized well, as well as a limited capability CUDA implementation. This meant that the team had already identified the compute-intensive computational sections of code.

The team then needed to create a new design that met all the performance and portability goals from the beginning. This in-depth process required understanding how to handle the data ownership and data transfers and how to map QMC-specific computational issues to ensure that the Monte Carlo process could run at high efficiency on hybrid architectures. “Importantly,” Kent notes “we used an abstraction API to push the implementation down to as low a level as possible and used dynamic dispatch for asynchronous operations and flexibility of execution. This design abstraction—and the computer language and tools used to implement it—had to support CPUs and GPUs from many vendors and support concurrent CPU and GPU execution in a heterogenous environment.”

Importantly, we used an abstraction API to push the implementation down to as low a level as possible and used dynamic dispatch for asynchronous operations and flexibility of execution. — Paul Kent

The team is primarily using OpenMP Offload, but the design is general and flexible enough to also support proprietary implementations of key kernels and to enable migration to future technologies. Although the OpenMP standard is capable for QMCPACK, the standard does not impose performance requirements. Furthermore, the existing vendor and open-source compilers implementations are still new and not yet complete. Consequently, the team extensively uses CI to identify these problems and reports that compiler teams are actively working to address the various performance and correctness issues. In fact, many compilers are close to delivering the required behavior and performance. Kent notes, “The open-source LLVM compiler that targets NVIDIA GPUs already performs quite well and is usable for science production. Furthermore, the QMCPACK team has forged collaborations with other ECP projects, such as SOLLVE, to flesh out OpenMP Offload compiler support and related LLVM issues.”

The ECP goal is to run a challenge problem once the team can access an exascale system. Kent explains, “The challenge problem will determine the time to solution when computing the energy of a supercell of nickel oxide, which is one of the materials that challenges other electronic structure methods and that we were studying at the beginning of the ECP. Time to solution includes calculating the equilibration phase of the Monte Carlo—actually, the initial projection down to the electronic ground state—and then running long enough to measure the energy. This process combines elements of the weak and strong scaling behavior of our software.”

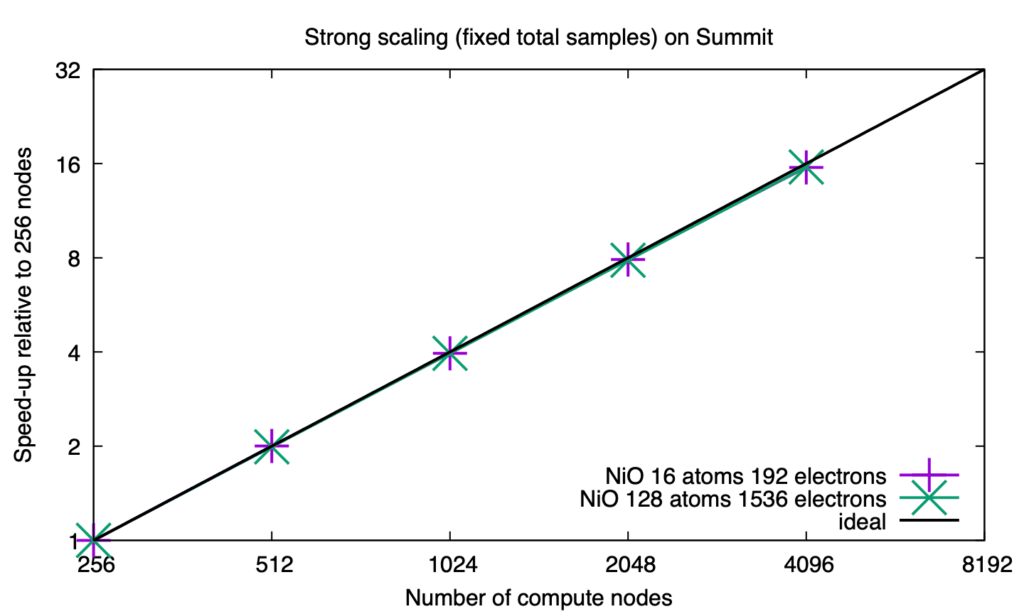

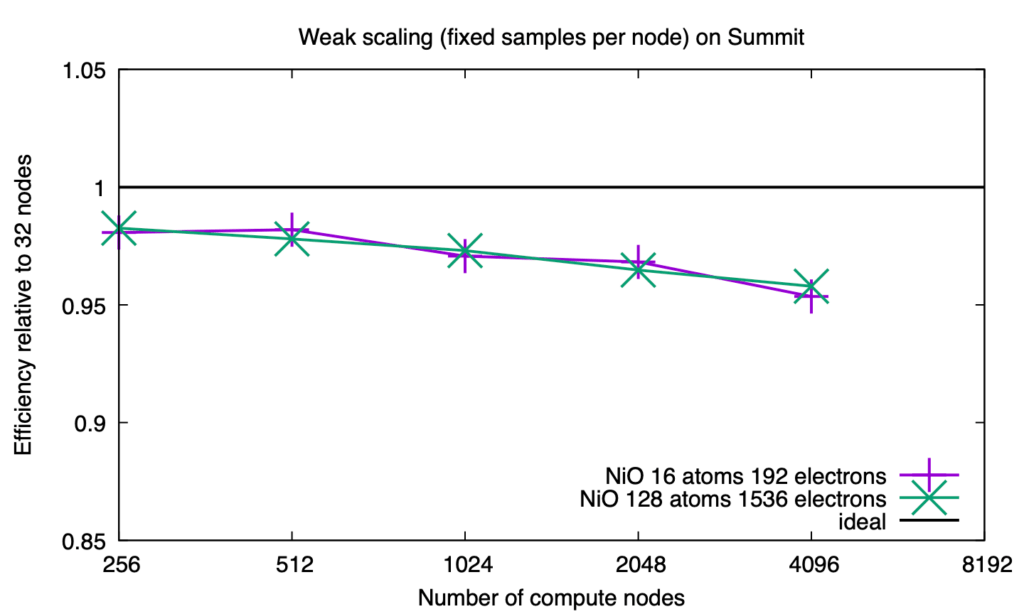

The strong scaling (Figure 2) and weak scaling (Figure 3) obtained on the Summit pre-exascale supercomputer offer an exciting glimpse of the potential performance of QMCPACK on the new exascale machines. These graphs demonstrate that QMCPACK will be able to scale as needed to achieve high performance on an exascale supercomputer.

Software Aspects that had to be Figured Out

Kent summarized the team’s effort in terms of “important things we had to figure out,” which coalesced into three sets of ECP activities:

- The team must design and implement a performance-portable version of the application that runs at high efficiency on all three main vendor GPUs, with minimal changes in source code and negligible variation in results. (Small differences in vendor hardware and libraries can cause unimportant variations in results.) Kent observes, “This is important for code maintenance and the general scalability of the humans involved, who are the most constrained parts of the operation.”

- The team must engage with the open-source and vendor compiler teams and with the SOLLVE project to mature the various OpenMP implementations so developers can rely on consistency of behavior, with minimal bugs, and not encounter unexpected performance aberrations. Kent notes, “The main problem has been that the OpenMP specification is years ahead of any implementation.”

- The team must improve the QMCPACK software engineering, testing, and developer operations. Kent notes, “The team pursued a major effort to accomplish this goal, and this effort required them to develop strategies to handle a stochastic method.”

A Model Use Case on the Value of the ECP

These “things to figure out” highlight the strength and success of the ECP story for QMCPACK and are reflected in other ECP efforts to create a sustainable, cross-platform, high-performance software ecosystem for the nation’s exascale systems. It also reflects well on the QMCPACK team, who have been open in presenting their thought processes and software activities. Their work provides a model for other projects in how to work within the ECP framework.

Very importantly, the team’s effort demonstrates how to transition from an academic-grade parallel code to production-ready software that can run on exascale systems regardless of their differing architectures and hardware components.

Their success reflects the value of the ECP software ecosystem because it exemplifies the ECP’s and the funding agencies’ foresight in meeting future HPC and scientific computing requirements. It also highlights the efforts of the ECP Application Development and Software Technology teams, which are working to identify scientific and user needs while also collaborating with vendors, standards bodies, and open-source developers to meet their needs. The benefits of the ECP’s sustainable, cross-platform, high-performance software ecosystem extend far beyond the exascale community to serve the interests of national security,[12],[13],[14] industry, and scientists around the world.

Designing a Performance Portable Software Framework

As stated, the implementation goal for the QMCPACK team is to provide a performance-portable software framework that runs well on machines that range from student laptops to exascale systems. Because the QMCPACK software supports GPUs, the framework must account for vendor hardware, software, and programming language differences. This presented the team with a conundrum because there are simply too many kernels in the QMCPACK code to rewrite for each GPU and, by extension, to support possibly incompatible GPU generations. Thus, the team needed a strategy to write a QMC method that efficiently supported each vendor’s single instruction, multiple threads (SIMT) GPU architecture while transferring minimal data between the CPU and the external, on-device GPU memory. The SIMT architecture is a subset of the general CPU multiple instruction, multiple data (MIMD) architecture. Differences between the two can cause catastrophic slowdowns when porting CPU software to GPUs.

This led to an important design decision: to push the device specific implementation details to as low level as possible via an abstraction API. It is performance critical for QMCPACK that the software abstraction include dynamic dispatch to support hardware asynchronous operations. The abstraction API allows the team to write OpenMP code by default and access specialized vendor-specific software to unlock more performance and access unique hardware features. The result is a software package that can run by default on the CPU and can use heterogenous CPU and GPU execution when a GPU is available.

In computer science terms, QMCPACK and the Monte Carlo algorithms implemented by the team map to a relatively simple, directed acyclic graph. Unlike many other HPC projects, the QMCPACK team already knows the optimal map to the hardware. The main issues involve performance-critical behavior, such as moving data and respecting the memory limits of GPU hardware. The team developed heuristics that dictate which operations are performed on the CPUs and which are performed on the GPUs, and these heuristics perform well in practice.

A key algorithmic advance relates to how the Markov chains are updated in QMC.[15] When QMCPACK was first ported to GPUs, a batching strategy was implemented to ensure sufficiently large kernels utilized the GPU resources and minimized overall latency.[16] The team found that for large systems and large batch sizes, this technique resulted in CPU bottlenecks, which limited GPU occupancy and overall performance. In the new design, this approach has been refined by introducing an additional parallelization level—what the team calls a crowd of walkers or Markov chains. Updates are now performed on a per-crowd basis and can be assigned specific CPU threads and a unique GPU stream. This capability allows for more asynchronicity in the main loop of the simulation and overall increased GPU occupancy compared with the older algorithm.

Kent notes, “The task scheduling is working now and is being used for science but is still a work in progress.” Other design choices included selecting algorithms that use minimal global communications. This decision helped focus the optimization effort because the best performance occurs when maximizing the local node performance. This approach also scales well (Figure 2 and Figure 3).

From day one, QMCPACK will run on everything from Aurora and Frontier to laptops and workstation-class CPU and GPU systems.[17] The exceptional scaling behavior (Figure 2 and Figure 3) indicates that the software will utilize the exascale hardware and will likely serve as a use case worthy of study on how to refactor scientific software for these machines.[18]

Selection of the Software Technology

Much thought was put into the choice of software technology. During the analysis phase, the team explored various frameworks, such as Kokkos and OpenMP Offload. The team also examined the future C++ standard, which they determined was of great interest but not yet mature enough. Based on their analysis, the team chose OpenMP Offload.

Essential to the analysis and decision-making process was the creation of mini apps. Key kernels were also written or extracted from the QMCPACK code to facilitate easier study and prototyping.[19]

Essential to the analysis and decision-making process was the creation of mini apps. Key kernels were also written or extracted to facilitate easier study and prototyping. — ECP 2020 QMCPACK Annual Meeting Poster

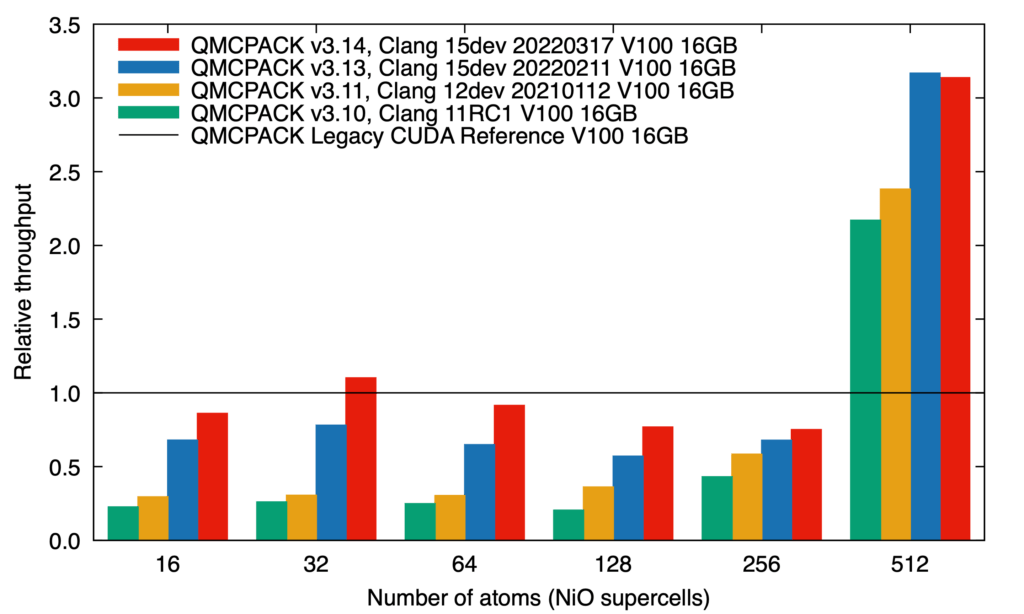

During the 2022 ECP Annual Meeting, the QMCPACK team noted in their poster, Towards QMCPACK Performance Portability, “After 3 years of porting, the performance of our new batched offload implementation now rivals the legacy CUDA version for performance for a broad range of problems while offering more functionality on NVIDIA GPUs. The code base passes all the correctness checks on AMD GPUs. We expect further optimization in the AMD software stack to unleash the full potential of AMD hardware.” Figure 4 shows the results from mainline QMCPACK, from 2020 to present.

Figure 4. Performance of the new batched offload implementation in QMCPACK now rivals the legacy CUDA version. Shown is throughput relative to this version vs. number of atoms for a broad range of problems. The different datasets show the progression made with new QMCPACK versions and with improvements in LLVM’s OpenMP implementation.

Achieving Performance on the Leading Edge was an ECP Collaborative Effort

The success of this software development effort is proven by how well QMCPACK runs on laptops, workstations, and even the latest-generation supercomputers.[20]

Kent notes that collaborations with the ECP’s SOLLVE project helped flesh out compiler support of the OpenMP Offload standard, resolve bugs, and address performance issues. The need to interact with all the vendors, open-source projects, and LLVM development teams was too much for the QMCPACK project to take on by itself. “All options required maturation,” he observed. “The OpenMP Offload standard looks good, but the software was not mature enough. For example, some parts were not implemented, while some parts used too many synchronizations or memory copies.”

The ECP’s focus on performance for the nation’s supercomputers proved to be particularly valuable because the OpenMP Offload standard does not impose performance requirements. Kent explains, “Both correctness and performance are important.” Notably, QMCPACK performance requires that OpenMP tasks run concurrently on the CPU and GPU threads and that streams be efficient. Furthermore, QMCPACK must maximize local node concurrency, such as memory support of non-uniform memory access (NUMA), and developers must be able to safely rely on the performance promise of the SIMT model when programming to exploit GPU hardware.

Over the past 3 years, the team found many performance issues in both vendor-developed and open-source compilers. “The good news,” Kent highlights, “is that we are getting lots of cooperation in resolving performance and correctness issues. The latest open-source LLVM release that targets NVIDIA GPUs performs well and is robust enough for production. Other vendor and GPU support from LLVM and LLVM-derived compilers is getting close. For example, compilers and run times can now map kernel launches from different threads to different distinct streams. We need this ability for performance because we know the compilers’ work is independent. Our focus for AMD GPUs is currently on improved performance through refinements of the compilers and run time because correctness has already been demonstrated. We are looking forward to achieving the same on Aurora’s Intel GPUs. Overall, we are looking forward to reporting production exascale results once performance and correctness are achieved on the production machines.”

The good news is that we are getting lots of cooperation in resolving performance and correctness issues. The latest open-source LLVM release that targets NVIDIA GPUs performs well and is robust enough for production. Other vendor and GPU support from LLVM and LLVM-derived compilers is getting close. Overall, we are looking forward to reporting production exascale results once performance and correctness are achieved on the production machines. — Paul Kent

Software Testing is Critical to Guarantee Program Correctness

In creating a performance portable framework, the team had to ensure that the software always produces correct results on all platforms—laptops, workstations, supercomputers, exascale-class machines, or future post-exascale computing systems.

The evolution of QMCPACK required far more than just adopting good software coding practices. Internally, the QMC method has many-state machines that operate in a complex fashion to eliminate duplicate computation. This complexity requires extensive testing to ensure correctness in the face of rapid, major software changes. It also requires that scientific domain experts join the project and that the experts are free to make major changes to the state machines while ensuring the changes do not introduce bugs or affect the accuracy of the results.

Considering these and other factors, the team recognized that occasionally they must make major updates to the code. Kent explains, “Having a robust CI and development process means that bugs and portability issues can be quickly identified. For example, the QMCPACK CI framework now incorporates many unit and code coverage tests, such as sanitizer checks to identify memory leaks. These checks will increase over time and include feedback from users. Overall, CI lets us ratchet up the quality of the software over time while giving us the freedom to make any necessary changes quickly.”

Having a robust CI and development process means that bugs and portability issues can be quickly identified. For example, the QMCPACK CI framework now incorporates many unit and code coverage tests, such as sanitizer checks to identify memory leaks. These checks will increase over time and include feedback from users. Overall, CI lets us ratchet up the quality of the software over time while giving us the freedom to make any necessary changes quickly. — Paul Kent

Evolving Beyond the Limits of Academic Code

As with many important scientific codes, QMCPACK started as a research-grade, academic project. Acceptance of the code was achieved only after the results from the code were verified by experts in the field. Academic projects rarely invest in capturing these verification runs and automating them as part of a regression test suite. Without such a test suite, and the ability to run it after code updates, people become fearful of any effort that would significantly change the verified known working algorithms and code base.

This locks in the early design decisions, which are rarely optimal, and creates a degenerate situation where algorithm and software innovation is stifled and even discouraged. Over time, the situation can degrade further because of package version lock-in. Without an automated testing and verification capability, people won’t utilize updated versions of the software libraries and other packages upon which the application relies, again because they aren’t the verified and known working versions. SUch reliance on outdated software can cause many problems ranging from poor scaling and runtime behavior to lack of support for new hardware. Eventually, bugs cannot be fixed because the package developers are no longer willing to support the outdated version of their software.

These are a few of the many reasons that highlight the need for CI and a sustained investment in CI across many organizations to ensure that the application will run correctly and deliver acceptable performance on all supported hardware platforms.

- Funding is essential at the project level as allocating people to create, automate, and maintain a comprehensive regression test suite is labor intensive and expensive.

- Multi-organization support is required to provide access to all the datacenters where the team of a popular scientific package might need to run their validation test suite. This is non-trivial cost as such CI workloads are generally both machine intensive and time-consuming.

The ability to verify program correctness with confidence provides a safety net for developers and algorithm designers upon which they can rely as they examine and correct inefficiencies in their code, algorithms, and software ecosystem. This confidence, in turn, gives them the freedom to innovate and create software and algorithmic approaches that can harness the capabilities of new hardware devices such as GPUs. Portability locks in CI a requirement as programmers have to ensure both correctness and performance across all supported platforms.

The QMCPACK team chose to invest in both CI and good software practices. The resulting success, even with the complexity of the QMC state machines, makes QMCPACK an excellent use case that other HPC projects can analyze and emulate. The software demonstrates to developers and funding agencies that investing in good software practices pays big dividends.

For more information, see the tutorial “Developing a Testing and Continuous Integration Strategy for Your Team.”

Automation of the Software Build Process

Portability also requires the use of a software infrastructure to keep the team from being inundated with build issues. This need motivated the ECP and QMCPACK to adopt the Spack package manager. Kent notes, “Spack integration provides the many disparate software dependencies that support CI and is a development use case for testing many vendor and open-source compilers and for testing library performance and correctness in our application. The result has improved compiler and library performance and correctness. It makes testing at scale feasible because we don’t have the resources to manually build all these things.”

Spack integration provides the many disparate software dependencies that support CI and is a development use case for testing many vendor and open-source compilers and for testing library performance and correctness in our application. The result has improved compiler and library performance and correctness. It makes testing at scale feasible because we don’t have the resources to manually build all these things. — Paul Kent

Summary

The QMCPACK software is a strong use case that illustrates how to take a working, parallelized academic code that ran well on one supercomputer system and turn it into a framework that runs well on systems of practically any size. The team adopted good software and validation practices in creating their framework, thereby freeing domain experts from the limitations of legacy algorithms to produce algorithms that support not only exascale systems (e.g., the QMC state machines) but also memory and massively parallel GPUs. In summary, the team

- identified how to map QMC efficiently to ECP exascale hardware such as GPUs;

- applied that knowledge to address identified science goals;

- collaborated within the ECP to help mature the OpenMP Offload compiler ecosystem;

- optimized CI and Spack’s use of human resources so domain experts can create accurate, yet parallel- and memory-friendly modifications, to speed up performance; and

- crafted a software framework that is benefitting scientists and that will support them for decades to come.

This research was supported by the Exascale Computing Project (17-SC-20-SC), a joint project of the U.S. Department of Energy’s Office of Science and National Nuclear Security Administration, responsible for delivering a capable exascale ecosystem, including software, applications, and hardware technology, to support the nation’s exascale computing imperative.

Rob Farber is a global technology consultant and author with an extensive background in HPC and in developing machine learning technology that he applies at national laboratories and commercial organizations.

[1] https://iopscience.iop.org/article/10.1088/1361-648X/aab9c3

[2] See also Developing a Testing and Continuous Integration Strategy for your Team.

[3] See note 1

[4] https://aip.scitation.org/doi/10.1063/5.0004860

[5] See note 1

[6] https://github.com/QMCPACK/qmc_workshop_2021/blob/master/week1_kickoff/week1_kickoff.pdf

[7] See note 1

[8] See note 1

[9] See note 1

[10] See note 1

[11] https://github.com/QMCPACK/qmcpack

[12] https://www.exascaleproject.org/highlight/llnl-atdm-addresses-software-infrastructure-needs-for-the-hpc-nnsa-cloud-and-exascale-communities/

[13] https://www.exascaleproject.org/highlight/visualization-and-analysis-with-cinema-in-the-exascale-era/

[14] https://www.exascaleproject.org/highlight/advancing-operating-systems-and-on-node-runtime-hpc-ecosystem-performance-and-integration/

[15] https://arxiv.org/abs/2209.14487

[16] https://ieeexplore.ieee.org/document/5601669

[17] https://ecpannualmeeting.com/poster-interface23894732314e23hreu823rd/posters/de37b1248f5a030d59a2dc9bf329ecfc2f03269dc9abd3b8fd8626191a8d1fb7.pdf

[18] See note 15

[19] https://ecpannualmeeting.com/assets/overview/posters/QMCPACK_Kent_ECP_Houston_2020.pdf

[20] See note 11