By Rob Farber, contributing writer

To ensure that simulation codes from the National Nuclear Security Administration’s (NNSA’s) Advanced Simulation and Computing (ASC) program are ready to leverage new exascale machines, NNSA funds the Advanced Technology Development and Mitigation (ATDM) program within the Exascale Computing Project (ECP). The ECP is jointly funded by the NNSA and the US Department of Energy’s (DOE’s) Office of Science. There is also a complementary relationship between the NNSA and the Office of Science’s Advanced Scientific Computing Research program.

Although ATDM primarily supports NNSA’s traditionally closed mission of national security, Lawrence Livermore National Laboratory’s (LLNL’s) ATDM Software Technology (ST) project exemplifies how in many cases the best way to support that mission is through open collaboration and a sustainable software infrastructure. LLNL ATDM is contributing key open-source components of a full-featured, integrated, and maintainable software stack for exascale systems that will impact both the ECP and the broader high-performance computing (HPC) community. “This work ultimately supports users in getting simulation results, but the primary focus is on infrastructure needs,” said Becky Springmeyer, PI of the LLNL ATDM ST project and leader of the Livermore Computing division at LLNL. “LLNL ATDM ST supports a set of open-source projects that create a framework for workflows that support end users, computational scientists, and computer scientists.”

This work ultimately supports users in getting simulation results, but the primary focus is on infrastructure needs. LLNL ATDM ST supports a set of open-source projects that create a framework for workflows that support end users, computational scientists, and computer scientists. – Becky Springmeyer, PI of LLNL ATDM ST project and division leader for Livermore Computing at LLNL

Key infrastructure foci include the following:

- Programming models and runtimes for GPUs (RAJA,CHAI, and Umpire)

- Mathematical libraries (MFEM)

- Productivity technologies (Spack)

- Workflow scheduling (Flux)

Todd Gamblin, ECP lead for Software Packaging Technologies and ATDM ST deputy, points out, “This project is about recognizing the sustainable value of open-source projects. We are looking to broaden our software and the software for the HPC community.”

This project is about recognizing the sustainable value of open-source projects. We are looking to broaden our software and the software for the HPC community. – ECP lead for Software Packaging Technologies and ATDM ST deputy

Technical Introduction

The overarching goal of the LLNL ATDM ST project is to build infrastructure to support a full featured, integrated, and maintainable exascale software stack, which is essential to support the ASC Program’s computational mission. At the infrastructure level, the ASC program’s national security mission shares many challenges with the rest of the ECP. Exploiting the capabilities of modern GPUs (graphics processing units) is a key for nearly every component of the exascale software stack, and libraries such as RAJA and MFEM that enable developers in harnessing these devices in a portable way. The complexities introduced by GPUs likewise fuel the need for better software management (e.g., Spack) and better scheduling (e.g., Flux). LLNL ATDM ST is an integrated effort across these sub-projects, which, as noted on the LLNL-ECP software technology website, “provides both coordination as well as a clear path from R&D to delivery and deployment.”[1]

Both Springmeyer and Gamblin observe that the ATDM project existed before the start of the ECP, and LLNL plans to continue these efforts in the future – throughout the exascale era and beyond. The ECP provided an opportunity for the LLNL ATDM projects to have broader impact on the HPC community through collaboration and for the projects to build sustainable open-source communities. Springmeyer states, “Collaborating with open source, universities, and vendors means we will have a stronger next-generation software framework to support our code at exascale and the future generations of supercomputers. It’s all intertwined, which is why we are investing in the overall environment and not only on individual projects.”

Collaborating with open source, universities, and vendors means we will have a stronger next-generation software framework to support our code at exascale and the future generations of supercomputers. It’s all intertwined, which is why we are investing in the overall environment and not only on individual projects. – Becky Springmeyer

Both Springmeyer and Gamblin (Figure 1) highlight the importance of the NNSA support for the development of open-source software technologies and how they contribute to the success of national security applications. External to national security, these same open-source technologies have a large impact on the ECP and global HPC community. The result is a win-win for everyone by enabling a large scientific userbase who can set new high-water marks in scalability and performance while they exercise ATDM targeted standards, toolsets, and libraries on the latest exascale supercomputer architectures. In return, the success of these external efforts ensure that the ASC mission needs can be met with software technology that has been tested and vetted on multiple instantiations of leadership-class supercomputers by pressing the limits of the latest hardware technology.

Gamblin specifically notes, “Spack is one example of a software project that affects much of the ECP software stack. The package handles the process of downloading a tool and all the necessary dependencies—which can be tens or hundreds of other packages—and assembles those components while ensuring that they are properly linked and optimized for the machine. Spack is the backbone of E4S, which is the ECP’s suite of open-source software products. ” Gamblin points out that “E4S itself is around 100 packages that leverage nearly 500 additional packages from Spack’s open-source repository. An integrated stack of this size would not be possible without tooling like Spack.”

RAJA, MFEM, and Flux have also benefitted from open-source communities and their work with the ECP. RAJA is used for GPU portability not only in LLNL’s internal codes, but also by external collaborators. The MFEM project has developed a network of users and contributors and recently held a workshop with over 100 community attendees. The Flux team also developed significant industry partnerships to leverage exascale scheduling capabilities for cloud computing.

The success of the program is exemplified by the broad adoption of E4S software across the cloud and HPC communities. LLNL has existing collaborations with AWS that involve Spack and Flux,[2], [3] which has expanded to a memorandum of understanding to define the role of leadership-class HPC in a future where cloud HPC is ubiquitous. [4] According to LLNL, “Building off that collaboration, LLNL and AWS will look to better understand how HPC centers can best utilize cloud resources to support HPC and explore models for cloud bursting, data staging, and data migration for deploying both on-site and in the cloud.”[5]

Sustainability is Paramount

The team recognizes that the mass adoption of these infrastructure components means the HPC community will continue to rely on the components far into the future.

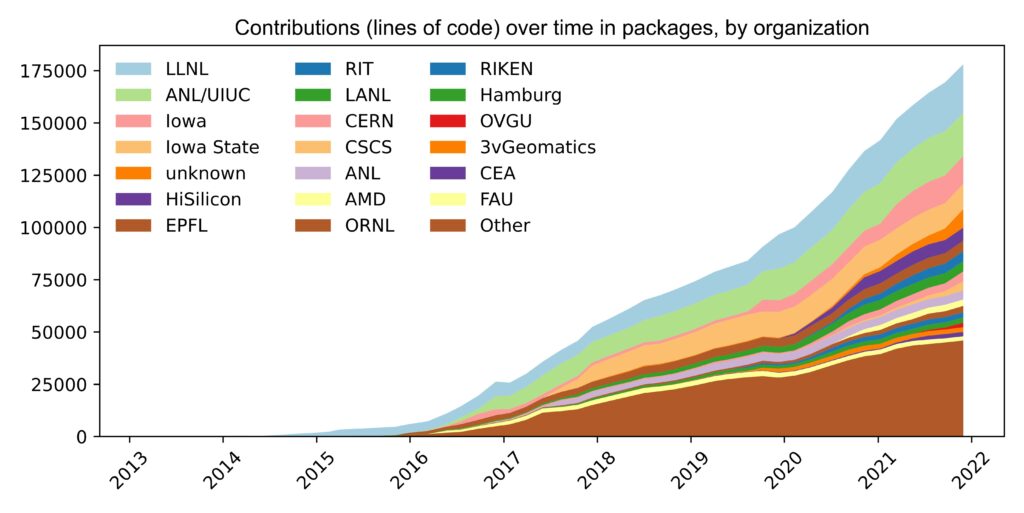

To achieve mass adoption and sustainability, the ECP and LLNL ATDM have made significant investments in hardening the software stack, which in turn has made these projects robust enough to grow communities. Gamblin notes, “Adoption is driven by value. These software components deliver significant value as they serve real needs of the users.” Spack is a critical part of ECP and has a rapidly growing global community with over 5,900 software packages and over 900 contributors. [6] This makes sense as explained in Scott Gibson’s podcast, Spack: The Deployment Tool for ECP’s Software Stack: “In [HPC], developers build software from source code and optimize it for the targeted computer’s architecture.” Spack allows them to build the software from source on many machines.

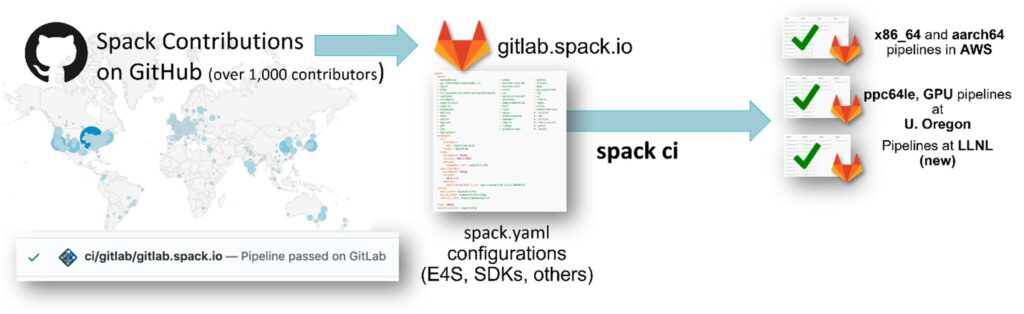

The implicit contract with the users when adopting a project such as Spack is that the software project will exist independent of any individual funding source and will continue to be supported in the future. The broad support by a global base of contributors (Figure 2) ensures that Spack will continue to be a current and viable mechanism for building software far into the future.

Figure 2: Spack sustains the HPC software ecosystem with the help of many contributors. (Source: https://indico.cern.ch/event/1078600/contributions/4536927/attachments/2331779/3973862/spack-hep-future-of-spack.pdf)

Spack is just one example of growing sustainable communities in the LLNL ATDM project. Gamblin notes, “Along with the adoption and many collaborations on Spack, many collaborations are also growing around Flux and RAJA—both in adoption and in contributions back to these projects. Ours is a long-term effort that looks beyond the ECP. We believe that these projects are never really done. However, it is not possible to sustain the current level of activity without some sort of sustainability project, post-ECP.” He continues, “Potential post-ECP software stewardship programs that may meet future needs include support by foundations and increased industry support to name a few possibilities. Such support is necessary for external teams to continue to rely on these tools.”

Along with the adoption and many collaborations on Spack, many collaborations are also growing around Flux and RAJA—both in adoption and contributions back to these projects. Ours is a long-term effort that looks beyond the ECP. We believe that these projects are never really done. However, it is not possible to sustain the current level of activity without some sort of sustainability project, post-ECP. Potential post-ECP software stewardship programs that may meet future needs include support by foundations and increased industry support to name a few possibilities. Such support is necessary for external teams to continue to rely on these tools. – Todd Gamblin

For more information about the Spack user demographics, please see Todd Gamblin’s 2020 User Survey. An example of industry support for Flux is shown in the presentation, KubeFlux: An HPC Scheduler Plugin for Kubernetes.

Leverage the Value of Open Source

Both Gamblin and Springmeyer recognize that, to be useful, each project must achieve and preserve a critical mass of users and contributors and obtain commercial adoption. Spack provides a bellwether example, as shown in Figure 3. The many contributors and continuous integration ensure that the Spack code runs reliably and correctly on a variety of architectures, including leadership-class exascale machines.

For outreach, the LLNL ATDM effort is building communities around their software projects via Birds-of-a-Feather gatherings, tutorials, articles (such as this one), and usable documentation on GitHub. Gamblin highlights recent success stories for each project:

- MFEM workshops

- Flux industry collaborations with IBM, Red Hat, and AWS

- RAJA industry contributions (e.g., support by AMD for their GPUs)

- Support from Amazon for Spack binary builds that stress test the software via many, many build cycles on the AWS cloud infrastructure

Continuous Integration, Security, and Federated Computing are Part of the Story

Gamblin notes, “The ECP is focused on big machines. We are designing software that can go big while supporting the general scientific audience.” Expounding on this, Springmeyer adds, “the software is both portable and consistent across platforms.”

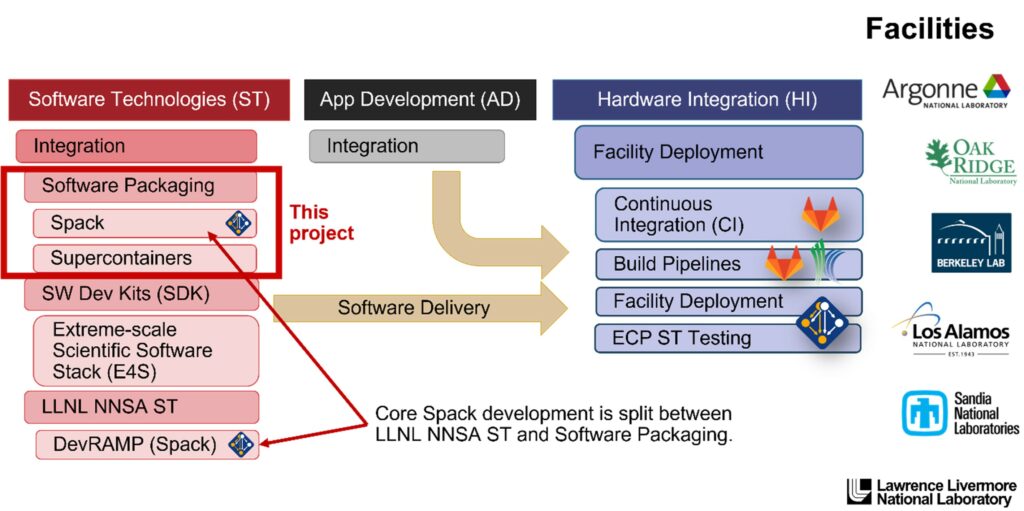

Both Springmeyer and Gamblin recognize that ECP funding has allowed for more design than is traditional for DOE R&D efforts. The software packaging technologies illustrated in Figure 4 have benefitted ECP projects across the board—from software technology and application development to hardware integration. They all come together to ultimately benefit production jobs that run on the latest leadership-class supercomputers.

Overall, automation is considered essential to supporting the now over 1,000 contributors to Spack and to ensuring that their contributions are integrated correctly in, as Springmeyer noted, “a portable and consistent manner across platforms.” Without automation, it is simply impossible for the team to manage the 400–500 contributions each month. Automation also means supporting the multitude of macOS and Windows users in addition to the increasingly varied HPC systems that run at all performance levels.

Both Springmeyer and Gamblin recognize that increased security is also required. Spack, for example, can address potential security concerns related to software supply chain issues. They also note that the Flux team is looking at how to integrate with cloud resources given the increasing number of users that leverage HPC resources in the cloud. Cloud utilization reinforces the lessons learned for Spack in terms of security and robust verification via continuous integration.

In supporting projects utilized by many users and contributors, Springmeyer and Gamblin are working to ensure that the team remains open to new use cases so they can learn from them.

Summary

The LLNL ATDM team is building a tool set that benefits many within the HPC and NNSA communities. By leveraging the value of open-source collaboration and development, the team has already achieved a high level of adoption and community support for their hardened software stack. This success reflects the value of the ECP funding, which enabled more comprehensive design work than is traditional in many DOE R&D projects. The use of automation and continuous integration provides support for a wide variety of hardware platforms used by the global HPC community—from PCs and small workstations to computational clusters of all sizes. Even with their current success, the team recognizes that additional support must be investigated for post-ECP software stewardship to ensure that external users can continue to rely on this software infrastructure well into the future.

This research was supported by the Exascale Computing Project (17-SC-20-SC), a joint project of the U.S. Department of Energy’s Office of Science and National Nuclear Security Administration, responsible for delivering a capable exascale ecosystem, including software, applications, and hardware technology, to support the nation’s exascale computing imperative.

Rob Farber is a global technology consultant and author with an extensive background in HPC and in developing machine learning technology that he applies at national laboratories and commercial organizations.

[1] See LLNL ATDM Software Ecosystem and Delivery at https://exascale.llnl.gov/llnl-ecp-software-technology.

[2] https://aws.amazon.com/blogs/hpc/introducing-the-spack-rolling-binary-cache/

[3] https://spack.io/spack-binary-packages/

[4] https://www.llnl.gov/news/llnl-amazon-web-services-cooperate-standardized-software-stack-hpc

[5] Ibid.

[6] https://indico.cern.ch/event/1078600/contributions/4536927/attachments/2331779/3973862/spack-hep-future-of-spack.pdf