A team funded by the Exascale Computing Project is preparing to release a new version of HPCToolkit, a suite of performance analysis tools that helps developers identify and diagnose performance bottlenecks on emerging exascale systems. This version of HPCToolkit employs a new, highly multithreaded strategy that the team calls “streaming aggregation” to accelerate postmortem analysis of performance measurements. The enhanced analysis employs both shared and distributed memory parallelism to process large amounts of data and exploits data sparsity to reduce storage and I/O resource demands. HPCToolkit supports instruction-level performance analysis of GPU-accelerated systems unlike any other existing tool, enabling developers of large-scale applications to pinpoint where and why performance losses occur. Using the same amount of resources, the team’s approach completes large-scale performance analysis of GPU-accelerated applications more than an order of magnitude faster than the previous version of HPCToolkit, and its sparse analysis results are as much as three orders of magnitude smaller than HPCToolkit’s previous dense representation of metrics. The team’s work was published in the Proceedings of the International Conference on Supercomputing ’22, which took place in June 2022.

Analyzing the source of performance losses will be challenging for developers using exascale systems because losses may appear only at scale, making it necessary to measure and analyze the applications running on tens of thousands of GPU-accelerated compute nodes. Performance analysis on GPU-accelerated systems is complicated by the fact that over 130 GPU metrics may be gathered to identify performance losses but none of them apply to CPU contexts. HPCToolkit constructs sophisticated approximations of call path profiles for GPU computations; uses PC sampling and instrumentation to support fine-grained analysis and tuning; measures GPU kernel executions using hardware performance counters; and collects, analyzes, and visualizes call path traces within and across nodes to provide a view of how an execution evolves over time.

The team also recently developed a new I/O abstraction layer that accelerates reading and writing of performance data by leveraging the capabilities of parallel file systems available on supercomputers. A new release of HPCToolkit based on recent research will be deployed in the coming months. The researchers are also working to improve HPCToolkit’s user interface to better present the complex performance data from parallel GPU-accelerated applications.

Jonathon Anderson, Yumeng Liu, and John Mellor-Crummey. “Preparing for Performance Analysis at Exascale.” 2022. Proceedings of the International Conference on Supercomputing ’22 (June).

https://doi.org/10.1145/3524059.3532397

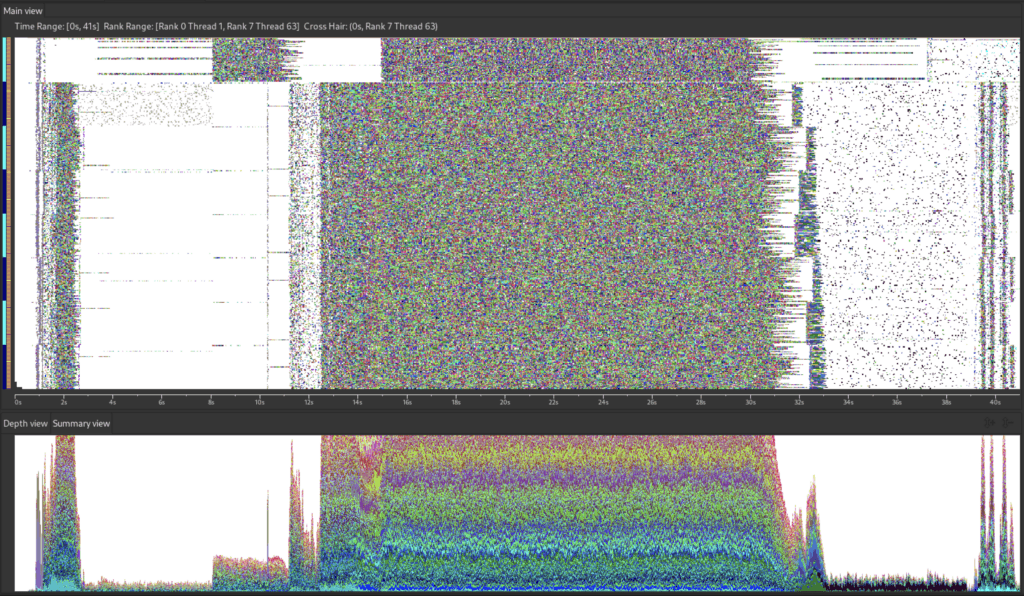

Visualization of HPCToolkit’s hpcprof-mpi using 8 nodes employing 63 threads each (504 threads total) to analyze performance data collected for a test case of the LAMMPS code running on 2K CPUs and 2K GPUS. hpcprof-mpi analyzes 38.1 GB of performance measurement data in 41 seconds. The prominent speckled region at top shows the team’s novel, highly parallel streaming aggregation approach at work. The lack of white space or solid color bands in this central region shows that the streaming aggregation approach does not stall or wait due to synchronization. The series of bands at right show the activity as hpcprof-mpi performs a sparse out-of-core transpose of performance data and writes its analysis results.