A team working on the ECP’s Exascale Atomistic Capability for Accuracy, Length, and Time (EXAALT) project has developed a task-level speculative method that maximizes parallelism and computational throughput at large scales by predicting whether task-level outputs will be used in subsequent executions. Their method optimizes resource allocation and improves strong scalability, returning 2 to 20× execution speed-ups in tests with EXAALT’s ParSlice simulator on a range of physical systems. Improvements were greatest when a system was most complex and resulted in lower speculative task probabilities and a greater ability to leverage the trade-off between overall task throughput and decreased time-to-solution for highly likely tasks. The researchers’ work was published in the September 2022 issue of Parallel Computing.

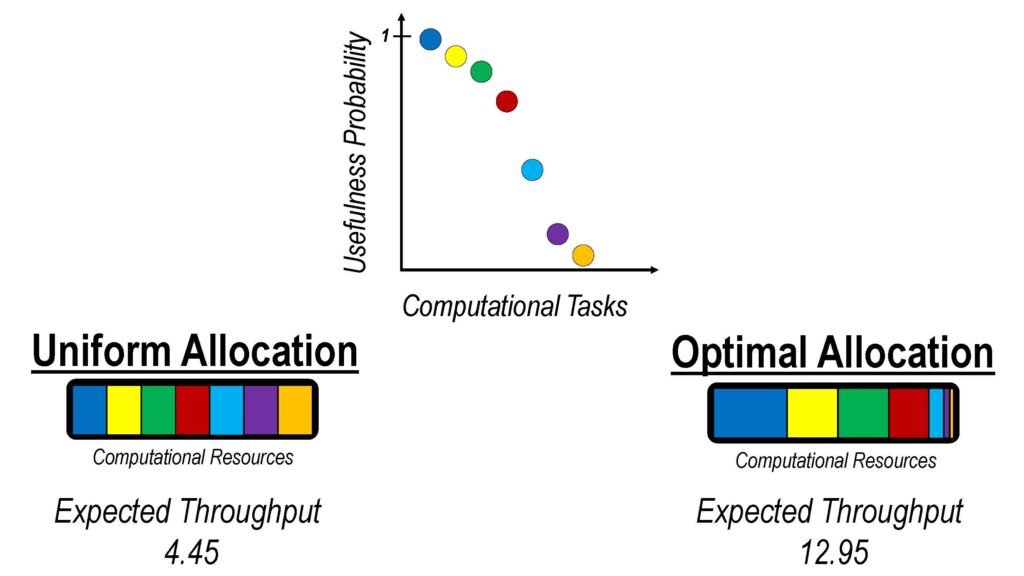

The growth in processing elements in high-performance and exascale computing systems presents a challenge to programmers to incorporate increasing parallelism in more efficient ways. Many programmers have turned to task-based programming, whereby the application’s execution is divided into tasks that can be run independently and in any order. Task-level speculation, which makes computational tasks available as subsequent inputs before their usefulness in later tasks is known, promises higher concurrency and improved strong scalability if speculations can be made accurate enough so that reuse of outputs increases.

Currently, many algorithms are not able to fully utilize high-performance computing systems. Speculation can significantly improve scalability when the number of “certain” tasks is insufficient to fully occupy the machine but a larger number of likely tasks can be identified. The team applied their task-level speculative method to multiple synthetic task probability distributions as well as ParSplice, a scientific application that along with LAMMPS and LATTE makes up the EXAALT framework for molecular dynamics–based atomistic simulations. The team tested their method in a dynamic setting with preemptable and restartable tasks, allowing for pauses for periodic resource reevaluation and restarts following resource reallocations at fixed time intervals, when certain tasks are completed, or when a change of context dictates.

The researchers’ novel method fills a gap in exploration of task-level speculative algorithms to solve scalability issues in the exascale era and points to significant potential payoffs with further development. Future work includes considering the usefulness of reformulating problems as task-level speculative executions and developing efficient task scheduling and resource reallocation strategies that will allow them to deploy them at scale.

Andrew Garmon, Vinay Ramakrishnaiah, and Danny Perez. “Resource Allocation for Task-Level Speculative Scientific Applications: A Proof of Concept Using Parallel Trajectory Splicing.” 2022. Parallel Computing (September).

https://doi.org/10.1016/j.parco.2022.102936

Comparison between a uniform allocation of resources (bottom left) and the optimal allocation of resources (bottom right) when speculative tasks can be assigned a usefulness probability (top). Shown by each color, resources can be assigned according to individual task probabilities to maximize total expected throughput.