By Rob Farber, contributing writer

Technical Introduction

Fossil fuel–based industrial processes and electricity production account for approximately half of all greenhouse gas emissions in the United States.[1] Commercial-scale multiphase reactors such as chemical looping reactors (CLRs) offer a promising approach for reducing industrial carbon dioxide emissions through carbon capture and storage technologies.[2] Heat from these chemical reactors can be harnessed to generate electrical energy while greenhouse gases produced from combusting fossil fuels are captured for storage. Multiphase reactors may also play an important role in direct air capture systems that could be deployed to extract existing greenhouse gases from the atmosphere.[3] [4] These reflect several technology approaches that can be used by the United States to address climate change, reduce greenhouse gas emissions, and assist with meeting the US Department of Energy’s (DOE’s) goal to decarbonize existing infrastructure and achieve net-zero emissions.[5]

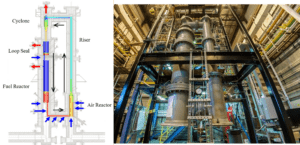

The successful operation of commercial CLRs for new or unproven-to-industry processes requires a scale-up from laboratory designs and pilot scale units, such as the existing 50 kW National Energy Technology Laboratory’s (NETL’s) CLR (shown in Figure 1 below), to production scale. Due to the large separation in physical scales, such scale-up efforts are often time consuming, labor intensive, and require trial and error. Many of these challenges could be mitigated through the study of detailed modeling results obtained by MFIX-Exa—an exascale-capable computational multiphase computational fluid dynamics (CFD) modeling tool.

Figure 1. Generic schematic of a CLR (left) and the 50kW Chemical Looping Reactor at NETL (right). Source (right image) https://mfix.netl.doe.gov/workshop-files/2014/mfs/multiPhase_topHat.pdf and NETL (left image).

Jordan Musser (Figure 2), research scientist at DOE’s NETL and PI of the Exascale Computing Project’s (ECP’s) MFIX-Exa effort, is working to translate knowledge gleaned from the existing NETL 50kW CLR into an accurate computer modeling tool that engineers can use to design industrial-scale gas-solid reactors, such as CLRs, and troubleshoot them once deployed. Running on the forthcoming exascale hardware, the effort intends to prove that MFIX-Exa is a viable and accurate tool that scientists and engineers can use to model real-world behavior.

The importance of the MFIX-Exa effort is that, as Musser notes, “Computational solutions give scientists and engineers a unique tool that lets them see inside the complex, corrosive environment of chemical reactors in a way that is not possible with instrumentation. Software gives them the ability to examine pressure, temperature, and the complex ways in which the reactor components interact with the gas-particle flows—all without affecting the processes inside the reactor.”

Musser highlights the current successes of the team by stating, “Due to our efforts, we now have a computational fluid dynamics–discrete element model software that can leverage the extraordinary capabilities of the forthcoming generation of exascale supercomputing systems. We plan to further enhance the speed and accuracy of our codes once these supercomputers are available.”

Computational solutions give scientists and engineers a unique tool that lets them see inside the complex, corrosive environment of chemical reactors in a way that is not possible with instrumentation. Software gives them the ability to examine pressure, temperature, and the complex ways in which the reactor components interact with the gas-particle flows—all without affecting the processes inside the reactor. Due to our efforts, we now have a computational fluid dynamics–discrete element model software that can leverage the extraordinary capabilities of the forthcoming generation of exascale supercomputing systems. We plan to further enhance the speed and accuracy of our codes once these supercomputers are available. –Jordan Musser

ECP’s Let’s Talk Exascale podcast, hosted by Scott Gibson, featured the MFIX-Exa project on the July 22, 2021, episode, “Scaling Up Clean Fossil Fuel Combustion Technology for Industrial Use.”

Societal Benefits

Musser explains that ease of carbon capture is the reason why industrial-scale chemical-looping combustion is important to society. CLRs provide a viable pathway for industry to reduce greenhouse gas emissions and their impact on climate change.

In the CLR reactor, the fuel (e.g., methane) reacts with oxygen in the solid carrier to produce carbon dioxide and water. It is easy to separate the two (e.g., water vapor and carbon dioxide gas), which makes it relatively easy to capture the carbon dioxide gas so it can be immediately used or stored. This differs from a post-combustion carbon dioxide removal process that generates a mixture of gasses. It is much more difficult to extract the carbon dioxide from these gaseous mixtures—particularly from nitrogen (N2)—if air is used during the combustion process.

Successful industrial-scale CLR carbon capture can provide a significant economic and societal impact. “We have a fair amount of fossil fuel reserves in the United State,” Musser observes. “The problem is greenhouse gases. If CLRs can be made efficient enough to be deployed broadly at a commercial scale, then we have a mechanism to significantly reduce carbon emissions from industrial fossil fuel use.”

We have a fair amount of fossil fuel reserves in the United States. The problem is greenhouse gases. If CLRs can be made efficient enough to be deployed broadly at a commercial scale, then we have a mechanism to significantly reduce carbon emissions from industrial fossil fuel use. –Jordan Musser

Can CLRs be Efficient Enough?

The MFIX-Exa team is working to answer the question—can CLRs be efficient enough? The team is hoping to find the answer by creating an accurate computer model using knowledge from the NETL 50 kW CLR. Scientists and engineers can then use this model to create industrial-scale CLRs and diagnose operational issues when they are deployed. This makes MFIX-Exa a unique project that ties together software engineering, data from the NETL pilot-project reactor, and exascale computing capability.

The quest for accuracy starts by understanding the behavior of the two primary components of a CLR—the fuel reactor and the air reactor. This directly translates into a computational load balancing problem that can strongly and adversely affect the efficiency of the software when running on massively parallel distributed supercomputer hardware.

In the fuel reactor, oxygen from particles (e.g., typically a metal oxide) is used in place of air to combust fossil fuels (e.g., methane). The spent oxygen carrier then travels to the air reactor where it is regenerated with oxygen from air. The replenished carrier particles return to the fuel reactor, thereby completing the chemical-looping cycle shown in Figure 1.

Exascale Capable, but the Work Must be Load Balanced

Exascale supercomputers provide the necessary hardware resources to evaluate the complex geometry of the reactor, which is represented by detailed mesh structures. The algorithms utilize these meshes to perform the computations to accurately model the variety of flow regimes, particle movements, chemical reactions, and thermal effects that occur inside the CLR. Musser explains the complexity of this behavior by noting, “There is an inherent dynamic load balancing problem in simulating a CLR which makes efficient use of HPC resources difficult. For example, the riser portion is relatively dilute in terms of solids concentration whereas the fuel reactor contains a dense particle bed, and within each component, local particle concentrations can vary greatly in space and time.”

There is an inherent dynamic load balancing problem in simulating a CLR which makes efficient use of HPC resources difficult. For example, the riser portion is relatively dilute in terms of solids concentration whereas the fuel reactor contains a dense particle bed, and within each component, local particle concentrations can vary greatly in space and time. –Jordan Musser

Massively Parallel GPUs are Ideal for Particle Simulations

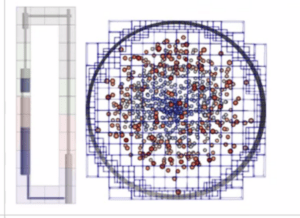

Addressing the reality of this load balancing issue is essential to efficiently using the massively parallel computational capabilities of the GPUs that are part of the forthcoming generation of exascale supercomputers. In terms of parallelism, CLR simulations are a good fit for GPUs because they spend much of their time simulating the behavior of the many, many particles on a mesh (shown in Figure 3), which can also be referred to as a grid. This is perfectly suited to the single instruction, multiple data (SIMD) and single instruction, multiple threads (SIMT) computational models used by GPUs to support massive parallelism.

Figure 3. Illustration of the load balance for a CLR riser problem (left) and a representative corresponding distribution of particles on the grid (right). Note the variable density of points in the simulation and the variable grid size.

Modernizing the software and algorithms to exploit massive parallelism promises to bring new science to CLR simulation. Although the previous CPU-based software is highly accurate, it is also slow. GPUs promise big speedup, but Musser notes that the porting effort to GPU hardware is a work in progress. As an added benefit, Musser notes that the decades of work by GPU vendors to support collision detection for gaming applications means that this mature capability can be leveraged to determine collisions between particles on a grid.

The AMReX Solver Enables Load Balancing and ECP Scientific Capability

The solution used by the MFIX-Exa team lies in leveraging the hybrid capabilities of the open source AMReX software framework, a framework designed to enable building massively parallel block-structured adaptive mesh refinement (AMR) applications. AMReX supports both CPUs and GPUs along with a variety of programming models and languages, including the flat homogenous model; MPI; OpenMP; hybrid MPI/OpenMP; hybrid MPI/CUDA, HIP, or DPC++; or MPI/MPI. This enables scalability and portability across ECP’s hardware platforms. Continuous compilation and nightly regression testing ensure the stability of the framework. AMReX is developed by teams from Lawrence Berkeley National Laboratory, the National Renewable Energy Laboratory, and Argonne National Laboratory as part of ECP’s Block-Structured AMR Co-Design Center.

AMReX’s support for running particle simulations using dual grids is the key to addressing the load balancing issues.[6] However, AMReX provides many additional benefits, including complex geometries, mesh pruning, and more, as described in the article “MFIX-Exa: A path toward exascale CFD-DEM simulations.”

In the MFIX-Exa code, one grid is used to perform particle calculations and the other is used to perform fluid calculations. The advantage conferred by this approach is that:

- The fluid work remains constant over a simulation as it is dictated by the geometry of reactor.

- The particle work can vary in space and time as particles may accumulate in different regions at different times throughout a simulation.

Musser notes that the computation can perform load balancing on the particle grid to even out the workload, so the amount of particle work per GPU remains somewhat constant. He states, “We don’t want some GPUs sitting idle while others are grinding away.”

Andrew Myers, lead developer of AMReX’s particle library, explains that “particle and hybrid particle-mesh methods often entail additional load balancing challenges beyond those involved in pure structured grid calculations. To help mitigate this, AMReX provides a number of load balancing tools, including work distribution strategies based on space-filling curves or on an approximate knapsack algorithm. The cost estimates used by these functions can either be heuristic or based on actual runtime cost measurements, which is particularly useful in particle codes like MFIX-Exa, for which the local cost of the advance per particle is not easy to estimate a priori. Under the right conditions, these tools can result in a significant decrease in the overall time-to-solution on problems of scientific interest.”

The key takeaway, according to Musser, is that “we can use different strategies to distribute particle work and fluid work across system resources allowing efficient use of the hardware. The code can redistribute the CFD [computational fluid dynamics] particle work to keep GPU threads running efficiently and constantly. The software can re-grid on the fly. The key to efficient computation then depends on who owns a particle. Distributing the particle workload efficiently makes better use of the hardware to achieve a better time-to-solution. For this reason, the team rewrote the entire particle solver and modernized the legacy Fortran code to turn it into massively parallel modern GPU code. We also implemented a new fluid solver by changing our simulation approach from a Semi-Implicit Method for Pressure Linked Equations (SIMPLE) to a modern projection methodology because the solver had to be a reacting solver constructed for gas flows. We needed more than a CFD solver to account for the presence of the particles plus coupling terms to manage thermal (i.e., heat) and mass transfers caused by reactions.”

Distributing the particle workload efficiently makes better use of the hardware to achieve a better time-to-solution. For this reason, the team rewrote the entire particle solver and modernized the legacy Fortran code to turn it into massively parallel modern GPU code. We also implemented a new fluid solver by changing our simulation approach from a Semi-Implicit Method for Pressure Linked Equations (SIMPLE) to a modern projection methodology because the solver had to be a reacting solver constructed for gas flows. We needed more than a CFD solver to account for the presence of the particles plus coupling terms to manage thermal (i.e., heat) and mass transfers caused by reactions. – Jordan Musser

Exascale Challenge Problems to Demonstrate Success and Value to Industry

Prior to running on exascale hardware, the team has focused on verifying individual code components to ensure they are working correctly and can stand up to modeling large-scale gas workflows. Once exascale hardware becomes available, the goal then becomes running a large-scale challenge problem to demonstrate that the software is not just a research code but rather a tool that is ready to address CLR modeling challenges.

Success on the challenge problem will do more than just prove that the team has met its ECP performance obligations. The challenge problem was also chosen to demonstrate that the software has the capability to simulate large scale commercial reactors during their design phase and to diagnose operational issues once they are built. Such a demonstration is essential to success as Musser notes, “Computational solutions give scientists and engineers the ability to see inside the reactor in a way that is not possible with instrumentation. A computational approach provides a way to deal with pressure, temperature, and a caustic chemical environment.”

Computational solutions give scientists and engineers the ability to see inside the reactor in a way that is not possible with instrumentation. A computational approach provides a way to deal with pressure, temperature, and a caustic chemical environment. – Jordan Musser

NETL Provides Ground Truth Data

To gain recognition as a viable modeling tool, the software must demonstrate that it can accurately model a large, near industrial scale CLR. That is why the team is focused on simulating data from the NETL pilot-scale 50 kW CLR as a real-world proof-point.

An overview of the physical models and solution methodology being followed by the MFIX-Exa team was published in the ECP special issue of the International Journal of High-Performance Computing Applications.[7],[8]

Summary

The MFIX-Exa team is uniquely positioned to create an exascale-capable software tool to address basic scientific questions and engineering concerns that must be answered to model industrial-scale multiphase reactors. Through data from the NETL 50 kW pilot-scale CLR, the team wishes to prove they have developed an accurate modern software tool to help create a green economy.

Success means that scientists and engineers can use this tool to design and diagnose industrial-scale multiphase reactors such as CLRs. These devices offer a technology path that the United States can follow to meet its climate goals through decarbonizing existing infrastructure and achieving net-zero emissions.

The project also reflects a unique synergy that integrates modern software design practices, US investment in the ambitious pilot project at NETL that provides ground truth data for model validation, and the US investment in exascale computing. Looking beyond MFIX-Exa, this synergy provides an example that can be used as a template to build and improve energy and carbon-management stewardship.

This research was supported by the Exascale Computing Project (17-SC-20-SC), a joint project of the U.S. Department of Energy’s Office of Science and National Nuclear Security Administration, responsible for delivering a capable exascale ecosystem, including software, applications, and hardware technology, to support the nation’s exascale computing imperative.

Rob Farber is a global technology consultant and author with an extensive background in HPC and in developing machine learning technology that he applies at national laboratories and commercial organizations.

[1] https://doi.org/10.1177/10943420211009293

[2] https://www.exascaleproject.org/research-project/mfix-exa/

[3] https://iopscience.iop.org/article/10.1088/2516-1083/abf1ce/meta

[4] https://www.amazon.com/Carbon-Capture-Jennifer-Wilcox/dp/1461422140

[5] US Department of Energy, “The Quadrennial Technology Review,” 2015, http://energy.gov/qtr.

[6] https://amrex-codes.github.io/amrex/docs_html/Introduction.html

[7] https://www.osti.gov/biblio/1841162

[8] https://www.exascaleproject.org/publication/mfix-exa-leverages-cfd-dem-strengths-to-modernize-reactor-simulations/