Frontier: Guiding the Design and Construction of the Mechanical Systems

By Scott Gibson

Hi. In this podcast we explore the efforts of the Department of Energy’s (DOE’s) Exascale Computing Project (ECP)—from the development challenges and achievements to the ultimate expected impact of exascale computing on society.

This is episode two in a series on Frontier, the nation’s first exascale supercomputer. Joining us is David Grant of Oak Ridge National Laboratory (ORNL). David is high-performance computing lead engineer in ORNL’s Laboratory Modernization Division. He is responsible for ensuring every new supercomputer system that’s installed at the lab has the cooling it requires to reliably operate 24/7. With the upcoming Frontier exascale system, he must check and recheck many important design details before it is powered on. I interviewed David on September 28, 2021.

Our topics: Insights into the planning for Frontier, the system’s mechanical requirements, staying on budget, the talented team that’s deploying America’s first exascale supercomputer, and more.

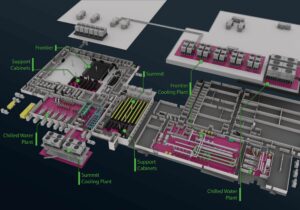

The Frontier exascale supercomputer data center at the Oak Ridge Leadership Computing Facility, ORNL. Credit: Andy Sproles/ORNL, US Department of Energy

Interview Transcript

Gibson: David, what are your responsibilities in preparing for Frontier?

Grant: My role for this project was to map out a technical solution for maintaining the desired environmental envelope within the data-center space, as well as the cooling system for the HPC system itself. And so, once that solution is formulated, I assist the project through the design and construction process by writing scopes of work, where we basically just tell the contractor what we need and what we need to accomplish in order to enable this mission. And then once construction commences, I oversee those construction activities. And then once we hand over the systems to operations, we continue to optimize things on the mechanical side. So we get to see the process from its inception to the—most cases, to the end of life, almost. So it’s kind of unique from an engineer’s perspective, where I’m not out there putting out designs, and we hand them over to the construction folks, and they go out and do it themselves and we never see it anymore. So we get to see the design, construction, and operations of the systems that we build, and then throughout the whole process we’re trying to make sure that the system can maintain its mission, purpose and be as reliable and maintainable as possible, especially given the asset value of these systems. We don’t want the mechanical system to be the reason why it is down for any extended amount of time for sure.

Gibson: What has the planning work you did for Frontier been like?

Grant: Yeah, so to map out a technical solution on the mechanical side, we look at all the HPC system components. So these are the compute racks, support racks, and management racks, and we assess the power consumption and the profiles of each of those categories, and we get the quantities so we can add that all up into the magnitude of the heat loss within the data center. So all that electrical power used by those components is turned into waste heat within the data center that has to be removed. And so, we use working fluids to do that, which are largely air and water, and some of the loads get rejected to the air side of the data center and then others to the water side, and then we look and collect all that waste heat, and we have to move it out of the data center.

So we look and see what kind of cooling solutions there are for what we find the data center will need. For Frontier, the heat loads, including the water flows and pressure drops, are given to us by the OEM, the original equipment manufacturer, or HPE Cray in the systems case. And then we have to see how the system will be laid out within the data-center space to understand how those heat loads will be distributed within the space. And then we optimize the air-side and water-side cooling components to efficiently and effectively get that heat out. This system, Frontier, also required a new cooling central energy plant, which is where we have large pumps and heat exchangers and cooling towers to help process this heat to ultimately reject all the waste heat to, in our case, the atmosphere, the environment outside of the building. That whole process included needing to demo existing laboratory space and come back in and do extensive structural modifications to accommodate the 80,000-pound cooling towers that we put on top of the roof and then laying out the equipment within the space for future expansion.

Gibson: We can probably assuredly assert that all of the mechanical details matter, but will you give us a broad-stroke view of the various aspects you review and analyze?

Grant: So once the scopes of work are written, proposals are made, and we review those against technical acceptance criteria. So we look at all the nuts and bolts to make sure that everything is there that is needed to accomplish the goal of the statement of work. So in the highest of terms, we need a cooling plant that can serve the 26-to-28-megawatt heat load coming from Frontier. And so, at the end of the day, we have to make sure that the size of the pipes, the quantity of the pipe, the types and size of the pumps and the heat exchangers all can provide what is needed to serve that mission. And so, once those statements of work get past that review phase and they’re accepted, it moves into the construction side, and we—in that phase, we’re looking at submittals. So we’ll look at the specific pieces of equipment that will come from specific manufacturers and try to see what they’re offering, I guess, in that submittal.

And so, if a cooling tower doesn’t meet a certain rating that we require, then at that point we can go back and work with our manufacturer or, in our case, the mechanical contractor, to get things where they need to be. And then, of course, during construction we’re doing field walks, so we’re watching the work progress and make sure that the piping routing isn’t going to negatively affect the performance of the system, and we’re always looking for safety as well. So when we’re doing our construction field walks, we’re making sure that the technical intent of the design isn’t being compromised by those field decisions that the construction workers are having to make, because we—in the design phase, we don’t give them every little detail about how to get from point A to point B with piping, say. And so we want to make sure that the positions and the locations of piping and instrumentation are in the right places at the end of the day, and it’s better to catch those during the construction work rather than at the very end of the project, when you’re trying to commission it.

Gibson: What are some of the specific mechanical needs for Frontier?

The supply temperature stability is kind of unique. The manufacturer is laid out in an envelope that could be pretty challenging, considering the load swings of the system could be in the magnitude of 18 megawatts. Being able to stage on the needed equipment to cool that kind of load swing and maintain a supply temperature within a tight envelope is a challenge that we’re going to be working through as we get the system online. During the peak operations, about three megawatts of heat will be rejected to our chilled-water cooling systems that are fed by our chiller plants. And since Frontier is water cooled, the air-side cooling requirements for the compute cabinets themselves is minimal. Its cabinets are basically closed hot boxes with water going in and out with no fans, and so the cabinets themselves are quiet, which is a major change from a typical data center.

Gibson: You not only have to ensure that the mechanical systems are up to standard for the supercomputer but you also are responsible staying within budget. How do you do that? How do you balance the technical aspects of your job with the fiscal, or budgetary, ones?

Grant: So the cost of doing this size project over a project schedule that spans years is challenging, especially when it spans a global pandemic. One strategy we implemented that I believe had the biggest payback is doing an early procurement of the largest equipment with the longest lead time. And that effectively locks down prices early of those pieces of equipment, and you can get them on site, and so you’ll know that you’ll have the big pieces of the mechanical system when you need them. So I think that’s kind of the best budgetary kind of thing that we do strategy wise.

Gibson: David, you and the other members of the Frontier team shoulder an impressive level of responsibility in standing up the nation’s first exascale supercomputer. Describe for us the team dynamics that are involved in supporting the mechanical efforts for Frontier.

Grant: Yes, and like you mentioned, we don’t shoulder it alone, even as our internal group here. We have an integrated design and construction team that has other mechanical engineers within our division, architects, electrical, mechanical contractors and a commissioning agent. But not only do we leverage those teams, but we also have a deep bench internally here at ORNL. Between our mechanical utilities, engineers, the cooling-system operators, facility management team, and HVAC mechanics, we have a full-time pulling in from their expertise. So we continuously are providing information to those different pieces of the team and seeing what optimization opportunities there are so that at the end of the road, when we hand it over to operations, they’ll have something that they’re familiar with and actually had input on. The heavy lift comes from the men and women fabricating everything. I mean, standing up the mechanical piping systems—when this system has headers inside the central energy plant that are 36 inches in diameter, they stand that up safely and with pride and willingness to discuss other ways of accomplishing things if they see a better way. And so having that baked into the project really puts it on a trajectory for success.

Gibson: What’s your assessment of the state of progress in having Frontier ready?

Grant: So we are cabinet ready, and during the first month of having everything connected to the system, we will have some more commissioning activities, mainly surrounding controls optimization needed to put the system on sound footing for the next five years. During previous projects we’ve done load-bank testing for this type of system but given its sheer size at 26 to 27 megawatts on this cooling system, doing a load-bank test economically is pretty hard. And so, if we can get time with the actual compute cabinet on there, then it economically makes a lot of sense and just makes things interesting right down here at the line of having to turn it over for the NCCS [ORNL’s National Center for Computational Sciences] folks to do their side of things. So in the next probably three to four weeks we are going to be hard at it, making sure that the control system is sound and ready to be able to stage up and down with varying loads from the system.

Gibson: Okay, well, thank you for joining us.

Grant: Thank you!

Gibson: Hey, thank you for listening. We’ll delve into other aspects of Frontier coming up, along with a lot more about exascale computing on Let’s Talk Exascale.

Related Links

- Pioneering Frontier: David Grant: Planning Ahead

- The Pioneering Frontier article series

- The Road to Exascale

- Exascale Computing’s Four Biggest Challenges and How They Were Overcome

Frontier Construction Features:

- 09/29/21—Stunning Specs: What’s Inside the Nation’s First Exascale Supercomputer Facility?

- 05/20/21—OLCF Announces Storage Specifications for Frontier Exascale System

- 12/11/20—Building an Exascale-Class Data Center

- 09/23/20—Powering Frontier and complementary Photo Story

- 01/26/20—Making Room for Frontier