E3SM-MMF: Forecasting Water Resources and Severe Weather with Greater Confidence

By Scott Gibson

Mark Taylor, Sandia National Laboratories

Scientists aim to forecast water resources and severe weather with greater confidence and understand how to deal with associated changes to the food supply. Having those capabilities is important to multiple sectors of the US and global economies.

A subproject within the US Department of Energy’s (DOE’s) Exascale Computing Project (ECP) called E3SM-MMF is working to improve the ability to simulate the water cycle and the processes around precipitation.

Our guest in the latest episode of ECP’s podcast, Let’s Talk Exascale, is Mark Taylor of Sandia National Laboratories, principal investigator of the E3SM-MMF project.

Topics discussed: what is meant by cloud-resolving and Earth system modeling, what E3SM-MMF refers to, a little bit about DOE’s history with Earth system modeling, computational demands, efforts at ongoing improvement, benefits to society, progress in preparation for exascale computing, why GPUs are advantageous, performance and application advances expected at the exascale, and the anticipated legacy of E3SM-MMF.

Interview Transcript

Gibson: The E3SM-MMF project is developing a cloud-resolving Earth system model. Will you explain the terminology? How do you define ‘cloud resolving’?

Taylor: That refers to atmospheric components. But, first, let me just say a few things about an Earth system model because that’s kind of a collection of several models in their own right—an atmosphere model; a land model; an ocean model; and ice, sea ice, and land ice models, all coupled together in a big software framework. And cloud-resolving refers to the resolution in the atmosphere model.

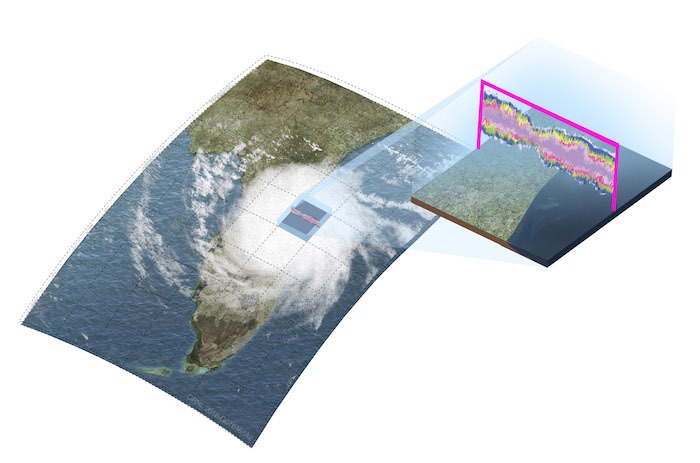

In the E3SM-MMF project’s multiscale modeling framework, a cloud-resolving model is embedded within a global model of Earth’s atmosphere. The cloud-resolving model improves the ability to simulate the many processes responsible for cloud formation. Credit: the E3SM-MMF project

The name implies resolving individual clouds, but that is not quite what we mean. We mean the model has the ability to resolve the processes that are responsible for cloud formation.

These processes, the models, don’t have enough resolution and have to be approximated with parameterizations. In a cloud-resolving model, that’s where we get the resolution up to around one kilometer. You can now start to resolve these processes in your simulation. So, we call that a cloud-resolving model. And at those resolutions, we can turn off these parameterized approximations and hopefully get better simulations.

Gibson: E3SM-MMF is quite an initialism. Will you explain what it refers to?

Taylor: There’s really two projects. There’s the parent project, called E3SM, that’s developing the Earth system model. E3SM stands for Energy Exascale Earth System Model because of our focus on problems related to the Department of Energy and exascale computing. And then MMF is a configuration of the E3SM being developed especially for exascale computers. MMF stands for Multiscale Modeling Framework, and that introduces some multiscale aspects. It’s an algorithmic approach to get some aspects of a cloud-resolving model without running the entire model at this one-kilometer resolution

Gibson: Mark, please tell us about DOE’s history with Earth system modeling and the origins of E3SM and E3SM-MMF.

Taylor: It is a rather long history. I’ve been in this business since I got my degree in 1992, so quite a while.

The E3SM is a relatively new project. It started about five or six years ago. DOE basically reorganized their whole climate program around this E3SM project. The rationale was two things. DOE’s Office of Science has this mission to study the Earth system. They focus on particular things that will have societal impacts, like on agriculture and energy production. DOE also leads the US’s efforts to build these exascale computers.

DOE wanted to ensure that they had an Earth system model for their science mission that would run on these upcoming exascale platforms. So, about five or six years ago, we started with the CESM. That’s the Community Earth System Model. That’s a model that’s been developed through a collaborative effort within the National Science Foundation and Department of Energy for decades now.

It runs very well on traditional CPU supercomputers. The goal of the E3SM flavor of that model, or new version, was to really focus on these exascale architectures. At the time ECP started, we didn’t quite know what the architectures would be. But now that’s kind of settled down, and I use the shorthand for that as GPUs. All the new exascale machines will basically get their horsepower from GPUs, graphics processing units. So, our rationale now is to get an Earth system model running on GPU systems.

Gibson: What are the computational demands of a global Earth system model?

Taylor: Yeah, so Earth system models have traditionally been able to consume all the cycles. We have an endless need for compute cycles. And the two biggest drivers for that are throughput and resolution. Resolution is what we talked about a little earlier—the goal, the push to get to cloud-resolving resolution. One thing we know is that as we improve these models, one of the best ways to get better, more-accurate predictions is to increase the resolution of the model. But that’s incredibly expensive. If you double the resolution, you need eight times more horsepower to run the same simulation.

If you look at the most recent IPCC [Intergovernmental Panel on Climate Change], climate change assessment report, which collects Earth system model results from many different groups, typically running around 100-kilometer resolution, to get down to 1 kilometer, we’re talking 1008 or 1016 more computing power than the community is in those simulations. So, a huge demand there for resolution. And then the other driver is throughput. It depends on the science you’re looking at, but many simulation campaigns need 100-year-long simulations. You could think of an Earth system model as like a weather forecast model. But it’s run for much longer, so we’re not interested in individual storms, but the statistics of all the future storms. So, you have to run 40 years, 100-year simulations. And so your time-to-solution is critical. You want to run a 100-year simulation. You don’t want to take 10 years to finish. We have throughput demands, roughly speaking, that need to be in the ballpark of five simulated years per day.

With today’s hardware, what we see in the last decade or so is that individual processors are not getting much faster, and, in some cases, they’re slower. But the new computers have many more of them. And so trying to use those to make the model run faster is a big challenge.

Gibson: Of course, models are only as good as the data that’s fed into them. How can the E3SM-MMF project ensure the quality of the Earth system model data so that the simulation results are valuable?

Taylor: In our project, roughly speaking, we really divide it into two pieces, about a fifty–fifty split. Fifty percent on getting the model to run well on the computer, but we do spend probably half our time on exactly that, making sure the model is doing a good job scientifically. The goal of our project—getting into cloud-resolving resolutions—is to improve our ability to simulate the water cycle, the processes, precip, and the processes around precipitation. So, we constantly evaluate the model and how well it can do on these types of features.

A couple of examples of areas where today’s models don’t do a good job are in what they call MCSes, mesoscale convective systems. These are convective storms that sweep across the Midwest in the continental US. Getting that right will be a big improvement in our model. Closely related to that is the diurnal cycle of precipitation. In a climate simulation or Earth system simulation, you want to be able to predict the total amount of precipitation a region is going to get, but you also want to know, when is it going to rain? If you’re getting the right answer for the wrong reason. It’s raining; it’s the wrong time, drizzling all day instead of thunderstorms. Then you know something’s wrong with your model. So, the diurnal cycle of precipitation is another metric where we’re constantly evaluating our model against observations to measure our improvements.

One more even more complex example—kind of an emergent behavior that’s hard to get right—is the MJO, the Madden–Julian oscillation. That’s a forty-day pattern in precipitation in the tropics. It is just another example of that type of thing where we compare model against data and can document our improvements as we add these, as we increase our resolution and add these other new algorithms.

Gibson: What do see as the potential benefits of Earth system modeling to society?

Taylor: Earth system models are not quite the same as weather forecast models, but you can think of them as weather forecasting. But we typically run longer climate-like simulations. Better forecasts through modeling have been a huge economic benefit to society.

On the climate side, we focus on science questions of understanding the Earth system. But I think better understanding of these also will have a similar type of societal economic impact. Two big examples the E3SM project is working on is improvements in the water cycle—storms, cloud formation—because that’s related to rain, flooding, and droughts. So, understanding the potential changes and future statistics of fresh water supplies through rain, flood, and droughts and things like that will be a big benefit to society. The second area that we haven’t mentioned too much is sea level rise, a big focus of our work on the cryosphere. The ice sheet work is to get better predictions of sea level rise in the future, and knowing what’s going to happen there could have a big impact on our coastal communities.

Gibson: The first exascale systems will be in operation next year, but in the meantime, E3SM-MMF has been getting ready for those machines by running on pre-exascale supercomputers. How well has the team progressed in that respect?

Taylor: Exascale, for us now, is getting our code to run well on GPU systems. We really focus on good performance on GPU systems that we couldn’t get on CPU systems. We don’t want to just run on the GPU because it’s available—we want to run better and faster and more efficiently. And that’s turned out to be a lot harder than we thought and is taking a lot longer. But we’re finally starting to get some good results along the lines where we can really use these machines to do things we couldn’t do for the same power on the CPU system.

We have about, I’d say, twenty-five percent of our code ported and in that situation, and the remaining seventy-five percent is partially done. So, right now, for example, we can run the atmosphere-only component of the Earth system model well on pre-exascale GPU machines. And by next year or maybe the year after, we hope to run, as we get more and more pieces ready, the full Earth system model on a GPU machine.

Gibson: For those who don’t know, will you explain why the use of GPUs is advantageous?

Taylor: The biggest [advantage] is you get more cores, more processing units. So, we’re really not constrained, right? DOE can build a 20- to 30-megawatt supercomputer, but they can’t build a 200-megawatt supercomputer. GPUs will allow you to get more of these cores for less. Well, for 30 megawatts, you can get more cores and potentially do a lot more calculations than you could with a more traditional architecture. So, a common refrain is that, ‘The CPUs are great, and our codes run well on CPU systems, but they’re not going to get us to exascale, at least not with affordable power consumption.’

Gibson: A few minutes ago you mentioned the aim of running better, faster, and more efficiently. How would you define that in terms of the goals that you have for exascale computing when those systems are available?

Taylor: You know, we’re in a situation where we’ve been running Earth system models on big CPU machines for a long time. So, what’s exascale going to get us that those traditional architectures haven’t been able to? It’s really either more efficient, meaning you can do more simulations, or faster, or higher resolution. And I think GPU systems can actually do all three of those for us with careful coding, especially by the time we get to exascale. Actually, maybe even a fourth one: having a code that runs on GPU systems will open up more cycles to the climate community because there’s a lot of GPU systems out there and make the simulations that we do more efficient so we can run more of them. Large ensembles do more science with the work. And then push these exascale issues, which is getting the model to run at cloud-resolving resolution.

Gibson: Mark, what breakthroughs could exascale computing make possible in the type of work your team is doing?

Taylor: The biggest breakthrough in the cloud-resolving field is being able to run a climate model, a century-long simulation, at cloud-resolving resolutions. So, we remove this approximation, this convective approximation, which is responsible for a lot of uncertainty in climate projections, in climate simulations. Running that climate, we don’t know what’s going to happen because it’s never been done before, but we hope to run an Earth system model at a cloud-resolving resolution without these approximations. This will give us significantly better results and also build more confidence in the results.

Gibson: What do you believe will be the enduring legacy of the E3SM-MMF project?

Taylor: I’ll repeat myself a little bit, but I think the two things we’ve just touched on are a model that runs well on GPUs because that opens up more cycles to the community and makes these high-resolution simulations more possible. I’d say probably on the CPU systems, they might not even be possible to do. And then from the science point of view, every year, improving the models makes the simulations more trustworthy. So, you have more confidence in our understanding of the earth’s system and the ability to predict what’s going to happen on a changing planet.

Gibson: Thanks for being on the podcast.

Taylor: Thank you. It was fun.