Researchers funded by the Exascale Computing Project (ECP) have extended the sparse direct solver STRUMPACK to GPU frameworks, providing more flexibility and efficiency for execution of large-scale sparse linear solvers on high-performance computing infrastructures. The team’s novel algorithm delivers efficient numerical factorization and triangular solve by offloading dense linear algebra operations and sparse scatter–gather operations between frontal matrices to reduce data synchronization and kernel launch overhead costs. On 4 nodes of the Oak Ridge National Laboratory’s Summit system, their code runs ∼10× faster when using all 24 V100 GPUs compared to when it uses only the 168 POWER9 cores. On 8 Summit nodes, using 48 V100 GPUs, the sparse solver reaches over 50 TFlop/s. Compared to SuperLU, on a single V100, the implementation is on average 5× faster. The team’s research was published in the February 2022 issue of Parallel Computing.

The algorithm targets large sparse linear systems derived through discretization of partial differential equations using finite elements, finite volumes, or finite differences on structured or unstructured meshes. The algorithm starts with an analysis phase to determine peak memory usage and memory requirements and then allocates memory to a single GPU; memory is reused for maximum efficiency. Overall, high performance is achieved by fitting entirely in GPU memory the submatrix factorizations corresponding to subtrees of the multifrontal assembly tree. The subtree is traversed level by level using customized CUDA and HIP kernels for smaller frontal matrices and vendor-optimized libraries (e.g., cuBLAS and cuSOLVER for NVIDIA GPUs, rocBLAS and rocSOLVER for AMD GPUs) for larger frontal matrices. The multi-GPU version of the team’s code uses SLATE as a modern GPU-aware replacement for ScaLAPACK. ECP also funded SLATE’s development.

The team’s method improves the state of the art by incorporating batched dense linear algebra routines and variable-sized batched LU factorization and fully exploiting the potential of GPU-accelerated nodes. Future work includes improving memory management in the solver to reduce bottlenecks during factorization of smaller linear systems and developing new tricks for batched dense matrix operations. The team is also working to port and optimize STRUMPACK preconditioners to their algorithm. Future new library releases (e.g., new batched dense linear algebra kernels in the MAGMA library) also are expected to result in improved performance for the team’s implementation. The team’s open-source code is available through the STRUMPACK library.

Pieter Ghysels and Ryan Synk. “High Performance Sparse Multifrontal Solvers on Modern GPUs.” 2022. Parallel Computing (February).

https://doi.org/10.1016/j.parco.2022.102897

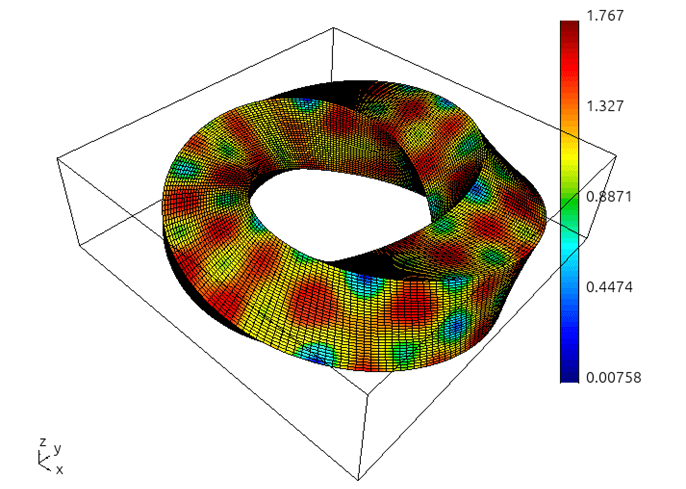

The second-order Maxwell equation on a toroidal domain is discretized using Nédélec finite elements using the MFEM package developed by the Center for Efficient Exascale Discretizations, an ECP co-design center. The resulting large sparse linear system was solved using the STRUMPACK sparse direct solver on OLCF’s Summit machine.