By Rob Farber, contributing writer

Technology Introduction

The Exascale Computing Project’s (ECP’s) ExaFEL effort aims to help researchers who are making molecular movies using the Linac Coherent Light Source (LCLS). Their exascale data analysis workflow for serial femtosecond crystallography will assist in the observation of the dynamic movement of atoms.[1] The LCLS is located at the SLAC National Accelerator Laboratory and is operated by Stanford University for the US Department of Energy. The project is being built on a prior demonstration project and collaboration between NERSC, ESnet, and SLAC.[2]

LCLS is the world’s first hard, x-ray free electron laser facility, which makes it a superb instrument for observing the dynamics of atomic interactions in a molecular system. This is due in part to the resolving power (e.g., ability to resolve atomic-level detail) of the instrument (x-rays have a much shorter wavelength than visible light) combined with the ultrafast pulse and brightness (also referred to as power) of the laser.[3]

Scientists use ultrafast pulses of the powerful LCLS laser energy to illuminate a carefully prepared sample of some system of interest. The sample can be chosen to elucidate a chemical reaction, how photosynthesis works, the formation of chemical bonds, the acceleration of reactions through catalysis, and more.[4] Data are captured by sensors during each laser pulse and processed by the LCLS workflow to effectively create a stop-motion snapshot of atoms and molecules in the system.[5] The concept is similar to that of a strobe light, which can be used to illuminate and create the visual appearance of a stop motion image of moving objects. SLAC provides a short video explaining the concept. Unlike capturing a picture with a camera, the LCLS workflow must use computationally expensive x-ray diffraction algorithms to process each x-ray snapshot.

Creating a movie from these x-ray snapshots is computationally challenging because each x-ray pulse destroys the sample. This means that the x-ray snapshots cannot be simply viewed one after the other like what we see when a strobe light illuminates dancers moving on a dance floor.[6] Instead, scientists use sophisticated algorithms that examine large aggregates of x-ray snapshots, in which each snapshot presents a randomly oriented view of the sample, to organize and piece together a molecular movie that captures the dynamics of how the atoms move over time.

The complexity of the algorithms, coupled with the large number of snapshots that must be processed, makes molecular movie generation a very data intensive and computationally expensive task. The scientific benefits are undeniable as the resulting movies provide an invaluable and unique source of experimental observation (some transformative examples are shown here). Scientists study these movies to create and verify or refute hypotheses about the dynamics of atomic behavior in their system of interest. The ability to observe and form hypotheses that are verified or refuted by data is a foundation of the scientific method.

Need for Exascale Computing

Accelerating the LCLS workflow is essential to help scientists by providing results while their experiment is running so they collect the best data during their use of LCLS. Real time results give experimentalists the opportunity to make adjustments and gather better, more informative data. The result is better science and utilization of the instrument.

The need for performance is vital to processing data from the LCLS-II upgrade because the laser can be programmed to operate at 1 million pulses per second compared to the 120 per second pulse rate of the current LCLS laser. [7] [8] The faster pulse rate will generate orders of magnitude more data that must be processed quickly. Exascale supercomputing hardware provides the necessary network and computing capability to handle the massive increase in data produced by the LCLS-II sensors. Amedeo Perazzo, ExaFEL PI and Controls and Data Systems Division director at the SLAC National Accelerator Laboratory, notes, “Both now and in the future, fast turnaround is necessary so scientists can make the best use of their time at LCLS and are not flying blind.”

Both now and in the future fast turnaround is necessary, so scientists can make the best use of their time at LCLS and are not flying blind. –Amedeo Perazzo, Controls and Data Systems Division director at the SLAC National Accelerator Laboratory

Rethinking the Current Workflow

Adapting the current tools so they can run on the forthcoming exascale hardware requires innovative thinking and new approaches.

Perazzo notes that the ExaFEL team must consider new algorithms and computing frameworks to leverage GPUs and other high-performance capabilities in the forthcoming US exascale supercomputers. These new approaches mean the team must replace and/or augment existing CPU-only algorithms and computing frameworks. The expanded capability afforded by GPU-accelerated machines along with new AI technology enable the team to explore new approaches that can increase the resolution of the computed results and ultimately improve the quality of the movies viewed by scientists.

Creation of Snapshots

GPUs are instrumental in generating diffraction patterns of multiple conformations of a protein sample to account for beam fluctuations, parasitic beamline scattering, and detector noise. These simulated images will be leveraged for characterizing the performance of the new algorithms under realistic conditions while the team waits for large datasets to be produced by future LCLS-II experiments.

Making Molecular Movies

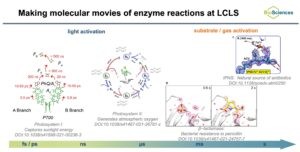

Chuck Yoon, Advanced Methods for Analysis Group lead at the SLAC National Accelerator Laboratory, observes, “We want to sample an ensemble set of experiments from the initial state to their final state of the system. This requires sophisticated and established algorithms to reconstruct the pathway.” He notes that making movies of molecular systems can require processing data collected from very short to very long timeframes on the order of femtoseconds (10−15 second or 1 quadrillionth of a second) to minutes owing to the orders-of-magnitude variation in the reactions’ timescales. Many snapshots must be taken to capture a few fleeting moments when some of the most interesting conformational changes occur. Figure 1 illustrates the order-of-magnitude variation in the timescale for a spectrum of important reactions being studied with LCLS. In addition to improving performance, Yoon notes, “the team is looking to use AI and GPU technology to create and establish new higher-resolution algorithms that can run in the desired timeframe.”

Figure 1. Various timescales being studied at LCLS. Picture credit: Philipp Simon, Junko Yano, and Jan Kern (LBNL).

Science Drivers

According to SLAC, the LCLS has transformed science and pushed boundaries in numerous areas of discovery.[9] The high repetition rate and ultrahigh brightness of the current laser has made it possible to determine the structure of individual molecules and map out their natural variation in conformation and flexibility. Structural dynamics and heterogeneities, such as changes in the size and shape of nanoparticles or conformational flexibility in macromolecules are at the basis of understanding, predicting, and eventually engineering functional properties in the biological, material, and energy sciences.[10]

The value of this research has proven to be extraordinary in both breadth and depth while offering a pathway for scientists to significantly impact society.

Highlighting just one aspect, Nicholas Sauter, senior scientist in the Molecular Biophysics and Integrated Bioimaging Division at Berkeley Laboratory, notes that, “Scientists use LCLS and the associated workflow data analysis tools to study the basics of how nature derives energy from sunlight and splits water to create oxygen. Understanding these basics gives us the knowledge we need to act as the shepherd for our environment.” The impact and value of this and a number of other LCLS research focus areas in which scientists are taking advantage of the capabilities of this superb instrument can be found in the SLAC article, “10 Ways SLAC’s x-ray Laser has Transformed Science.”

Scientists use LCLS and the associated workflow data analysis tools to study the basics of how nature derives energy from sunlight and splits water to create oxygen. Understanding these basics gives us the knowledge we need to act as the shepherd for our environment. –Nicholas Sauter, senior scientist in the Molecular Biophysics and Integrated Bioimaging Division at Berkeley Laboratory

A short excerpt featured in the SLAC public lecture, “Photosynthesis: How Plants Build the Air we Breathe – Atom by Atom” provides an example of a molecular movie that scientists used to study photosynthesis.

With over a decade of successful operation, LCLS makes a compelling case for the upgrade to the next-generation LCLS-II facility, which will be based on advanced superconducting accelerator technology (e.g., continuous-wave RF and tunable magnetic undulators).[11] The technical details of this upgrade are beyond the scope of this article, but think in terms of a brighter and faster laser: LCLS-II will provide fully coherent x-rays (at the spatial diffraction limit and at the temporal transform limit) in a uniformly spaced train of pulses with programmable repetition rates of up to 1 MHz and tunable photon energies from 0.25 to 20 keV.[12] It will also provide coherent x-ray pulses at photon energies that significantly exceed those available at LCLS—up to 25 keV at 120 Hz.[13]

“New Science Opportunities Enabled by LCLS-II X-Ray Lasers” highlights the new opportunities enabled by this faster and brighter instrument.

Increased Computational Throughput for Better Experimental Results

How fast the ExaFEL workflow can deliver results is a key component in realizing good experimental data. The LCLS-II upgrade will require exascale-class data processing to prevent computational throughput from becoming a serious limitation.

Access to the current LCLS facility is in high demand, and competition to use the facility is fierce. This demand means it is rare for a researcher to be awarded time at the facility, which means that every moment of LCLS operating time is precious. The research team must get their results by doing it right the first time. A failure to collect meaningful data can be devastating both scientifically and in terms of funding as the cost to research groups is high. Perazzo observes, “Sample preparation is costly as is the per-hour operating cost. Typically, a group will operate the LCLS for 5 days utilizing 12-hour shifts per day. During that time, the group will collect and process copious amounts of data.”

Figure 2. Amedeo Perazzo, Controls and Data Systems Division director at the SLAC National Accelerator Laboratory.

An interactive computational workflow that provides researchers with real-time feedback is a game changer. Perazzo continues, “To assist researchers so they are not blindly operating the instrument, the computational workflow must provide near–real time results—somewhere in a 5–10 minute range. Successes by the ExaFEL team to date have resulted in a 10-minute turnaround time given the current data rates. This is a tremendous help to researchers, as they can see and make adjustments on the fly so they can use the instrument more effectively, which leads to better quality science, a broader user base, and a much more efficient use of these oversubscribed facilities.”

To assist researchers so they are not blindly operating the instrument, the computational workflow must provide near–real time results—somewhere in a 5–10 minute range. Successes by the ExaFEL team to date have resulted in a 10-minute turnaround time given the current data rates. This is a tremendous help to researchers, as they can see and make adjustments on the fly so they can use the instrument more effectively, which leads to better quality science, a broader user base, and a much more efficient use of these oversubscribed facilities.” –Amedeo Perazzo

Perazzo predicts that the LCLS-II upgrade will require exascale-class processing capabilities, “In the future, the greater capability of the LCLS-II laser means that future workflows must deliver timely results when processing a two-orders-of-magnitude increase in data, with some experiments generating a four‑orders-of-magnitude increase in data. The ExaFEL team is making the software faster, but the forthcoming exascale supercomputers will be needed.” Perazzo further notes that the ExaFEL software is designed to scale efficiently and is written to be portable, which means it should be adaptable to future high-performance computing (HPC) architectures that will likely deliver beyond-exascale performance.

In the future, the greater capability of the LCLS-II laser means that future workflows must deliver timely results when processing a two-orders-of-magnitude increase in data, with some experiments generating a four-orders-of-magnitude increase in data. The ExaFEL team is making the software faster, but the forthcoming exascale supercomputers will be needed. –Amedeo Perazzo

New Algorithm Development Expands What Can Be Done

Better software and exascale hardware can support scientific discovery at LCLS and future research at LCLS-II, but the ExaFEL team believes that they can do more with the data.

Figure 3. Chuck Yoon, Advanced Methods for Analysis Group leader at the SLAC National Accelerator Laboratory.

GPU-friendly algorithms for single-particle imaging (SPI) provide experimentalists with the ability to study molecules that are difficult to crystallize, thereby further expanding the opportunity for discovery. Yoon explains, “SPI breaks free from the need for crystallization, which is difficult for some proteins, and allows for imaging molecular dynamics at near-ambient conditions. The SpiniFEL codebase is being developed to perform structure determination of proteins from SPI experiments on supercomputers in near real time, while an experiment is taking place, so that the feedback about the data can guide the data collection strategy.”[14] SpiniFEL leverages distributed computing to spread work across many compute nodes where on-node computation is accelerated using general-purpose GPUs.[15]

SPI breaks free from the need for crystallization, which is difficult for some proteins, and allows for imaging molecular dynamics at near-ambient conditions. The SpiniFEL codebase is being developed to perform structure determination of proteins from SPI experiments on supercomputers in near real time, while an experiment is taking place, so that the feedback about the data can guide the data collection strategy. –Chuck Yoon

As part of the ECP-funded work for new algorithms and workflow improvements, Yoon notes that the team is writing code that can utilize GPUs and CPUs more effectively. “The impact of these new algorithms,” Yoon notes, “is that scientists will be able to observe details of enzyme interactions that are averaged away in crystallography. It also helps to future proof the software so that it can potentially run on even faster systems in the post-exascale era.”

The impact of these new algorithms is that scientists will be able to observe details of enzyme interactions that are averaged away in crystallography. It also helps to future proof the software so that it can potentially run on even faster systems in the post-exascale era. –Chuck Yoon

Exploit Fast Memory Systems and High I/O Concurrency

Data throughput is a central theme that ties everything together in the ExaFEL workflow. ECP funding has helped the team add new capabilities, such as fast MPI data transfers to GPUs, plus exploit the parallel processing capabilities of Legion. Legion is a data-centric parallel programming system for writing portable high-performance programs that target distributed heterogeneous architectures.[16] Elliott Slaughter, staff scientist in the Computer Science Research Department at SLAC, notes that Legion provides capabilities to manage scheduling, load balancing, and data-centric computation. Legion is portable, performs well, and automates the discovery of parallelism in the code and asynchronous data movement to/from GPU devices. Slaughter has made contributions to the Legion programming system, including research with collaborators on data partitioning, which is a central challenge in parallel processing and an explicit challenge when processing the LCLS and forthcoming LCLS-II data. More specifically, Slaughter and collaborators focused on creating a dependent partitioning framework that allows an application to concisely describe relationships between partitions.[17]

“Having a system like Legion that understands your data, including how it is partitioned and where it is laid out, is a huge advantage,” Slaughter notes. “Legion makes data partitioning a first-class citizen, which means the programming system can reason about the data and perform important tasks such as keeping ensembles aggregated while preventing the workflow from getting overwhelmed. Furthermore, Legion gives programmers the ability to exploit node-local memory and storage to reduce or eliminate costly data movement. These and other features make Legion a critical component in managing data in ExaFEL’s high-throughput data-processing workflow.”

Having a system like Legion that understands your data, including how it is partitioned and where it is laid out, is a huge advantage. Legion makes data partitioning a first-class citizen, which means the programming system can reason about the data and perform important tasks such as keeping ensembles aggregated while preventing the workflow from getting overwhelmed. Furthermore, Legion gives programmers the ability to exploit node-local memory and storage to reduce or eliminate costly data movement. These and other features make Legion a critical component in managing data in ExaFEL’s high-throughput data-processing workflow. –Elliott Slaughter

Summary

The ExaFEL team is rethinking all aspects of the LCLS and LCLS-II computational workflow—from the advancement and use of the data-reasoning capabilities in the Legion programming system, to the creation of new algorithms that can utilize massively parallel computing devices such as GPUs. Changes to the ExaFEL workflow to date have enabled better science by helping experimentalists see what is happening while they are running their experiments on LCLS.

Recognizing that exascale computing capabilities will be required for future workflows, the team is laying the groundwork now so experimentalists can leverage these capabilities when the machines arrive. Upgraded laser capabilities mean that the team will have to leverage exascale supercomputers to process the expected two-to-four orders of magnitude increases in data volume generated by the upgraded LCLS-II. Rethinking the ExaFEL workflow in terms of exascale capabilities has also opened the door to new algorithm development that the team believes will increase the resolution of the images gathered and the types of systems that can be studied, be they chemical or biological processes or some new material.

This research was supported by the Exascale Computing Project (17-SC-20-SC), a joint project of the US Department of Energy’s Office of Science and National Nuclear Security Administration, responsible for delivering a capable exascale ecosystem, including software, applications, and hardware technology, to support the nation’s exascale computing imperative.

Use of the Linac Coherent Light Source (LCLS), SLAC National Accelerator Laboratory, is supported by the US Department of Energy, Office of Science, Office of Basic Energy Sciences under Contract No. DE-AC02-76SF00515.

Rob Farber is a global technology consultant and author with an extensive background in HPC and in developing machine learning technology that he applies at national laboratories and commercial organizations.

[1] https://www.exascaleproject.org/research-project/exafel/

[2] https://exascale.lbl.gov/2020/02/11/exafel-data-analytics-at-the-exascale-for-free-electron-lasers/

[3] https://lcls.slac.stanford.edu/overview

[4] https://www6.slac.stanford.edu/news/2019-04-10-10-ways-slacs-x-ray-laser-has-transformed-science.aspx

[5] https://www-public.slac.stanford.edu/lcls/WhatIsLCLS_1.aspx

[6] An illustration of this analogy from an older paper can be seen at https://www.youtube.com/watch?v=9Y2DF-X5LSA.

[7] https://lcls.slac.stanford.edu/lcls-ii

[8] https://news.fnal.gov/2021/03/fermilab-delivers-final-superconducting-particle-accelerator-component-for-worlds-most-powerful-laser/

[9] https://www6.slac.stanford.edu/news/2019-04-10-10-ways-slacs-x-ray-laser-has-transformed-science.aspx

[10] https://www.exascaleproject.org/research-project/exafel/

[11] https://portal.slac.stanford.edu/sites/lcls_public/Documents/LCLS-IIScienceOpportunities_final.pdf

[12] https://lcls.slac.stanford.edu/lcls-ii-he/design-and-performance

[13] https://lcls.slac.stanford.edu/parameters

[14] https://arxiv.org/abs/2109.05339

[15] https://www.osti.gov/biblio/1834376